- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Distributed Timer Execution in a HA Cluster

Distributed Timer and Scheduler Execution in a ThingWorx High Availability (HA) Cluster

Written by Desheng Xu and edited by Mike Jasperson

Overview

Starting with the 9.0 release, ThingWorx supports an “active-active” high availability (or HA) configuration, with multiple nodes providing redundancy in the event of hardware failures as well as horizontal scalability for workloads that can be distributed across the cluster.

In this architecture, one of the ThingWorx nodes is elected as the “singleton” (or lead) node of the cluster. This node is responsible for managing the execution of all events triggered by timers or schedulers – they are not distributed across the cluster.

This design has proved challenging for some implementations as it presents a potential for a ThingWorx application to generate imbalanced workload if complex timers and schedulers are needed.

However, your ThingWorx applications can overcome this limitation, and still use timers and schedulers to trigger workloads that will distribute across the cluster. This article will demonstrate both how to reproduce this imbalanced workload scenario, and the approach you can take to overcome it.

Demonstration Setup

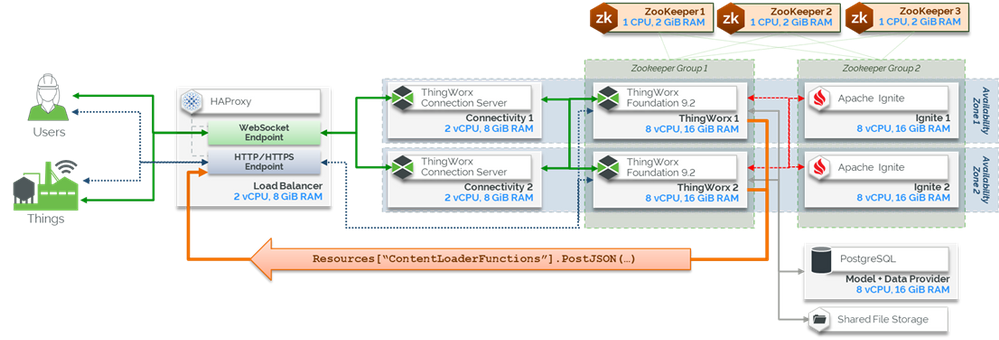

For purposes of this demonstration, a two-node ThingWorx cluster was used, similar to the deployment diagram below:

Demonstrating Event Workload on the Singleton Node

Imagine this simple scenario: You have a list of vendors, and you need to process some logic for one of them at random every few seconds.

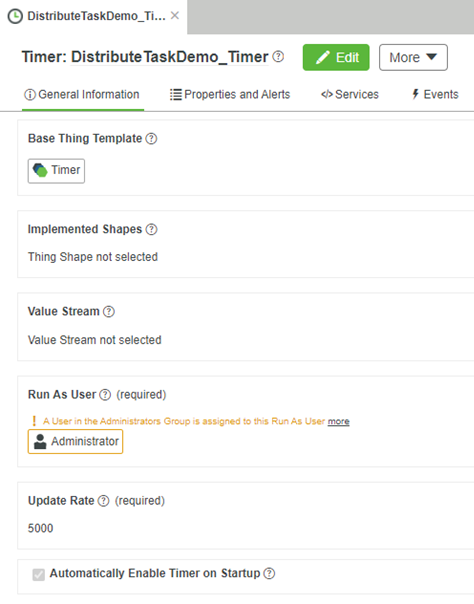

First, we will create a timer in ThingWorx to trigger an event – in this example, every 5 seconds.

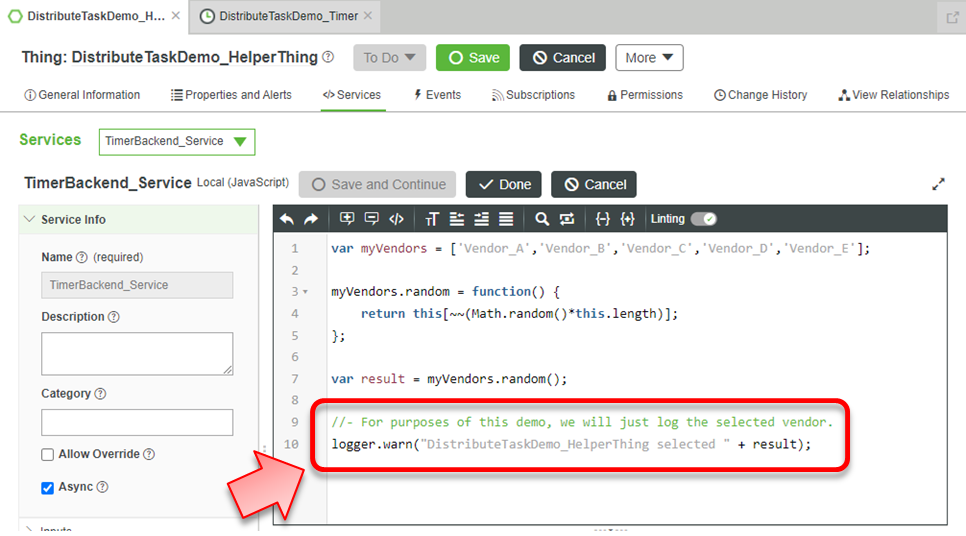

Next, we will create a helper utility that has a task that will randomly select one of the vendors and process some logic for it – in this case, we will simply log the selected vendor in the ThingWorx ScriptLog.

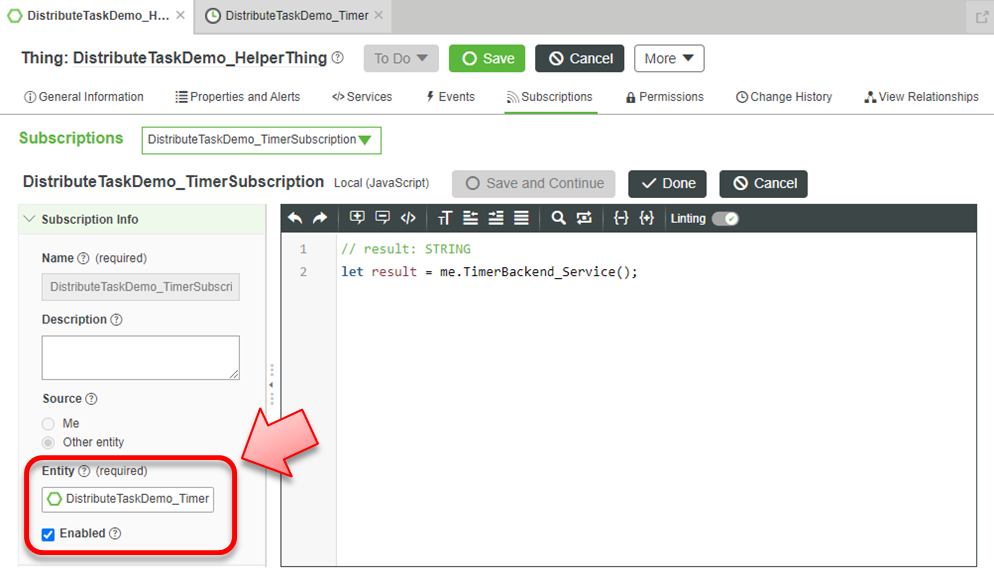

Finally, we will subscribe to the timer event, and call the helper utility:

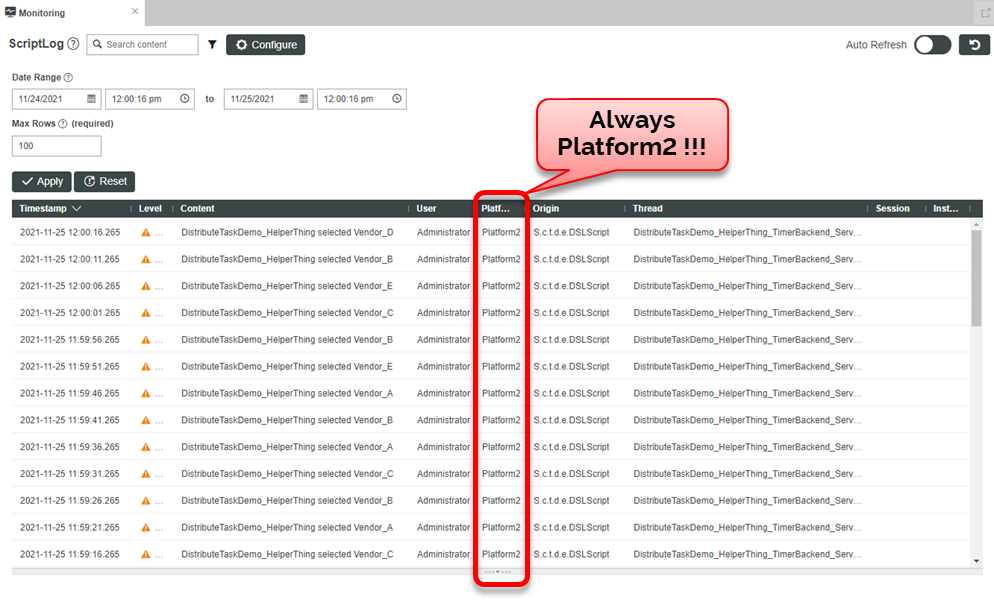

Now with that code in place, let's check where these services are being executed in the ScriptLog.

Look at the PlatformID column in the log… notice that that the Timer and the helper utility are always running on the same node – in this case Platform2, which is the current singleton node in the cluster.

As the complexity of your helper utility increases, you can imagine how workload will become unbalanced, with the singleton node handling the bulk of this timer-driven workload in addition to the other workloads being spread across the cluster.

This workload can be distributed across multiple cluster nodes, but a little more effort is needed to make it happen.

Timers that Distribute Tasks Across Multiple ThingWorx HA Cluster Nodes

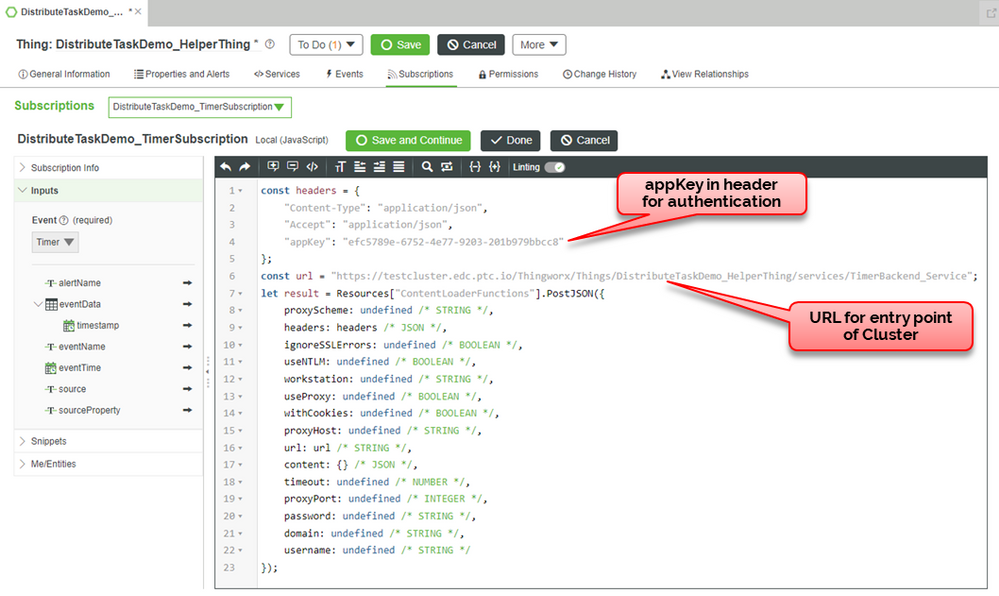

This time let’s update our subscription code – using the PostJSON service from the ContentLoader entity to send the service requests to the cluster entry point instead of running them locally.

const headers = {

"Content-Type": "application/json",

"Accept": "application/json",

"appKey": "INSERT-YOUR-APPKEY-HERE"

};

const url = "https://testcluster.edc.ptc.io/Thingworx/Things/DistributeTaskDemo_HelperThing/services/TimerBackend_Service";

let result = Resources["ContentLoaderFunctions"].PostJSON({

proxyScheme: undefined /* STRING */,

headers: headers /* JSON */,

ignoreSSLErrors: undefined /* BOOLEAN */,

useNTLM: undefined /* BOOLEAN */,

workstation: undefined /* STRING */,

useProxy: undefined /* BOOLEAN */,

withCookies: undefined /* BOOLEAN */,

proxyHost: undefined /* STRING */,

url: url /* STRING */,

content: {} /* JSON */,

timeout: undefined /* NUMBER */,

proxyPort: undefined /* INTEGER */,

password: undefined /* STRING */,

domain: undefined /* STRING */,

username: undefined /* STRING */

});

Note that the URL used in this example - https://testcluster.edc.ptc.io/Thingworx - is the entry point of the ThingWorx cluster. Replace this value to match with your cluster’s entry point if you want to duplicate this in your own cluster.

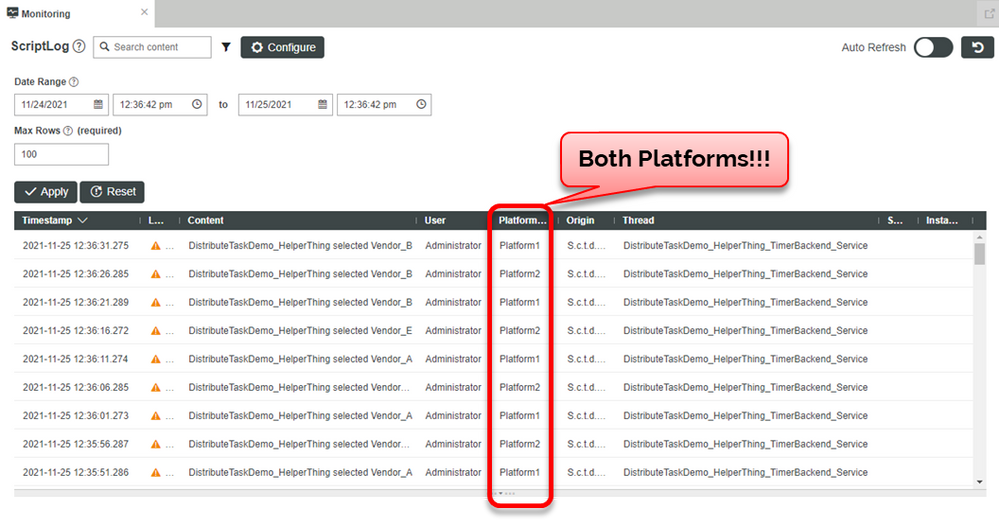

Now, let's check the result again.

Notice that the helper utility TimerBackend_Service is now running on both cluster nodes, Platform1 and Platform2.

Is this Magic? No! What is Happening Here?

The timer or scheduler itself is still being executed on the singleton node, but now instead of the triggering the helper utility locally, the PostJSON service call from the subscription is being routed back to the cluster entry point – the load balancer. As a result, the request is routed (usually round-robin) to any available cluster nodes that are behind the load balancer and reporting as healthy.

Usually, the load balancer will be configured to have a cookie-based affinity - the load balancer will route the request to the node that has the same cookie value as the request. Since this PostJSON service call is a RESTful call, any cookie value associated with the response will not be attached to the next request. As a result, the cookie-based affinity will not impact the round-robin routing in this case.

Considerations to Use this Approach

Authentication: As illustrated in the demo, make sure to use an Application Key with an appropriate user assigned in the header. You could alternatively use username/password or a token to authenticate the request, but this could be less ideal from a security perspective.

App Deployment: The hostname in the URL must match the hostname of the cluster entry point. As the URL of your implementation is now part of your code, if deploy this code from one ThingWorx instance to another, you would need to modify the hostname/port/protocol in the URL.

- Consider creating a variable in the helper utility which holds the hostname/port/protocol value, making it easier to modify during deployment.

Firewall Rules: If your load balancer has firewall rules which limit the traffic to specific known IP addresses, you will need to determine which IP addresses will be used when a service is invoked from each of the ThingWorx cluster nodes, and then configure the load balancer to allow the traffic from each of these public IP address.

- Alternatively, you could configure an internal IP address endpoint for the load balancer and use the local /etc/hosts name resolution of each ThingWorx node to point to the internal load balancer IP, or register this internal IP in an internal DNS as the cluster entry point.