Community Tip - Learn all about PTC Community Badges. Engage with PTC and see how many you can earn! X

- Community

- ThingWorx

- ThingWorx Developers

- Thingworx Analytics Introduction 2/3: Non Time Ser...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Thingworx Analytics Introduction 2/3: Non Time Series TTF Prediction

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thingworx Analytics Introduction 2/3: Non Time Series TTF Prediction

This is the 2nd article of series: Thingworx Analytics introduction.

1st article introduces Analytics Function and Modules.

3rd article introduces Time Series TTF prediction.

This article is for TTF(Time to Failure) prediction, it is quite useful in IoT applications.

Environment: Thingworx Platform 8.5, Analytics Server 8.5, Analytics Extension 8.5.

Step 1, configure Analytics setting.

Click Analytics icon >> Analytics Manager >> Analysis Providers, create a new Analysis Provider, Connector = TW.AnalysisServices.AnalyticsServer.AnalyticsServerConnector.

Click Analytics Builder >> Setting, select and set Analytics Server.

Step 2, create Analysis Data.

We need to prepare a CSV format data file and a JSON format data type file.

For CSV file, its 1st line is its header, should be equivalent with definition in JSON file.

JSON file structure can refer to:

---------------------------------------------------

[

{

"fieldName": "s2",

"values": null,

"range": null,

"dataType": "DOUBLE",

"opType": "CONTINUOUS",

"timeSamplingInterval": null,

"isStatic": false

},

{

"fieldName": "s3",

"values": null,

"range": null,

"dataType": "DOUBLE",

"opType": "CONTINUOUS",

"timeSamplingInterval": null,

"isStatic": false

}

]

---------------------------------------------------

CSV file includes below information:

- goalField, for result checking.

- key parameters fields.

- Data filter field, e.g. we can use filed record_purpose to filter training data out of scoring data, while training data is for model training, and scoring data is for validation or test.

- If the dataset type is time series data, we need to create 2 additional fields to identify AssetID(sequence of failure) and Cycle(cycle number inside AssetID).

Here I used a public NASA dataset for training, download URL:

https://c3.nasa.gov/dashlink/resources/139/

When data is ready, click Analytics Builder >> Data >> New..

Select CSV file and JSON file, check option of “Review uploaded metadata”.

For most parameter fields, the data type should be Continuous.

And for goal filed, data type should be Continuous or Boolean, in this example we use Continuous.

Click “Create Dataset”.

When it’s done, the newly created Data will be showed in Datasets list.

Select the data, and click Dataset >> View >> Filters >> New, to create filter.

Step 3, create Machine Learning model.

Click Analytics Builder >> Models >> New.

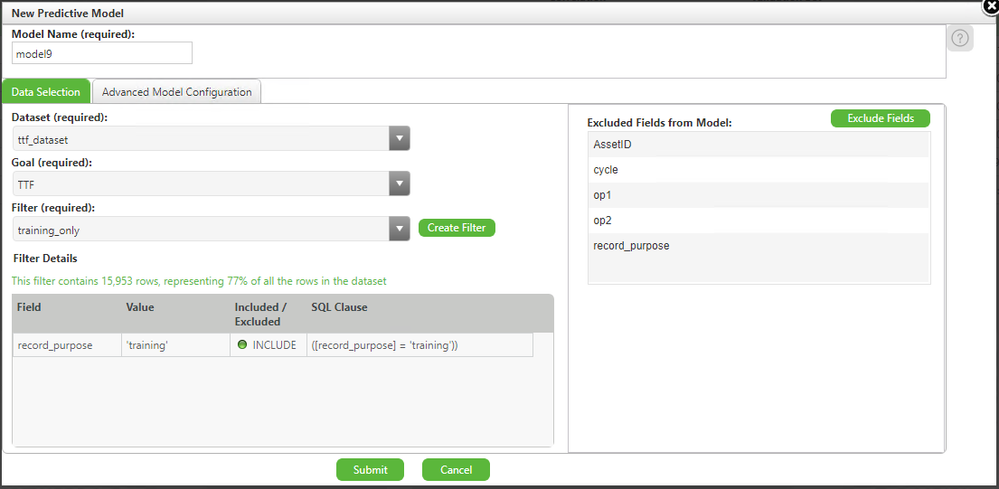

Select dataset, and select goal field and filter, and select excluded fields:

Click Advanced Model Configuration:

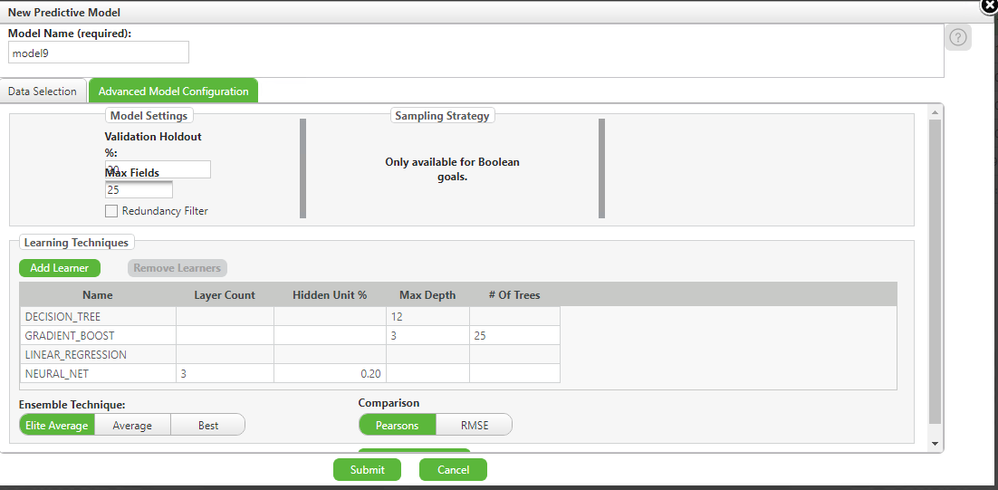

In Machine Learning, normally we split data into training set, validation set, and test set, with rate of 60%, 20%, 20%.

In this step, we can use Filter to filter out test data, and use Validation Holdout % to define percentage of validation, we can use default setting of 20%.

Learning Techniques includes possible Machine Learning algorithms, we can manually add algorithms and modify parameters.

For each new model, I’d suggest to training it with different algorithms, and test them to get the most accurate one.

For same algorithm, we can train it with different parameters.

Ensemble Technique is the mix method of multiple algorithms, we can try and test with different settings.

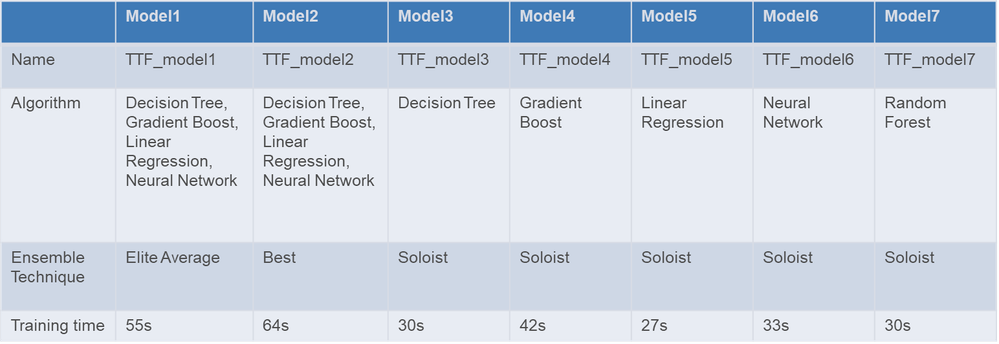

My training methods:

Click Submit to create model.

For small dataset, it requires little training time, but for time series data, it requires much longer training time.

For any dataset, the data size is bigger, the training time is longer.

When it’s done, we can find the new model in Analytics Builder >> Models.

Select the model and click View, then we can see the model information.

Select the model and click Publish, then we can publish it to Analytics Manager, we can see it in Analytics Manager >> Analytics Models, and a Test Page will pop up.

Step 4, test model.

The test page will pop up when model is published, and we can also access it by: Click Analytics Manager >> Analysis Models, select model, click View >> Test:

For causalTechnique, normally we set it as FULL_RANGE.

For goalField, input name of goal field.

Then input values of all features/sensors, and click Add Row. In case of time series data, we need to add multiple rows for it.

Select 1st row, click Set Parent Row, then click Submit Job.

System will calculate and get result based on model and input values.

It might take a few seconds for calculation.

Under below Results Data Shape, select AnalyticsServerConnector.xxx, click Refresh Job, then you can see the result value.

For more detail information, you can check from Analysis Jobs.

Step 5, set automatic calculation.

After model creation, we might monitor it for some time, to check the prediction with actual values.

Take the NASA data for example, we could use some of its data for comparison, you may refer to below steps.

Firstly, build a Data Table, load CSV data into it.

Then build a Thing, create attributes mapping with model features.

Then create a Timer, to read data from Data Table and write value into Thing timely, and use these data for test.

Click Analytics Manager >> Analysis Models, enable the newly created model.

Click Analysis Events >> New, set Source Type = Thing, set Source as newly created Thing, set Event = DataChange, set Property as trigger attribute.

Save and create new Event under Analysis Events, click Map Data >> Inputs Mapping, set Source Type = Thing, and bind model features with Thing Property.

Tips: model feature names are started with _, and that causalTechnique & goalField can use static values. So if we defined such features in Thing, we can use Map All in this step, which will map all features automatically.

Then click Results Mapping, bind the test result with Thing Property. Please be noted, system generated result will be updated, but will NOT be logged into Value Stream, so we need to build another Property, and use Service to sync the data and log to Value Stream eventually.

When Event is setup, system will monitor and trigger automatically, and output value by calling internal Analytics API.

For both manual test and Event calculation, we can see the details in Analysis Jobs.

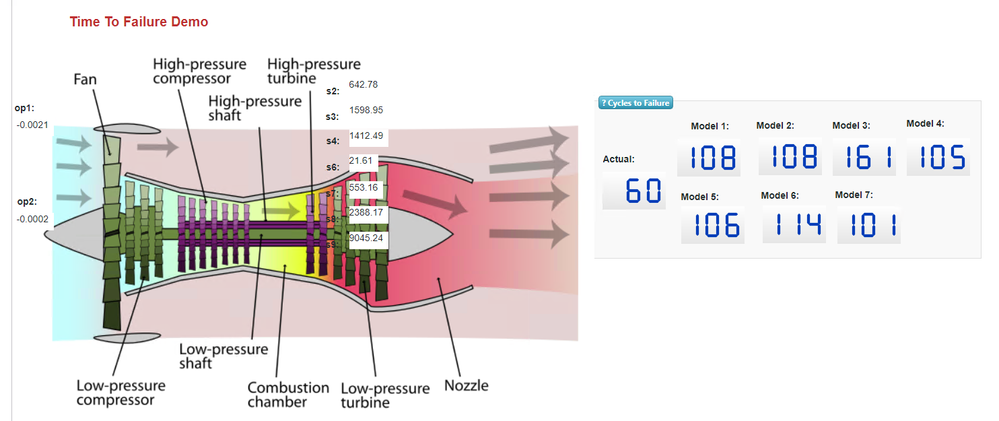

My TTF Demo Mashup for reference:

Comparison of different models:

Some notes:

- If you didn’t’ install Analytics Platform, then Analytics will run job in Synchronous mode. That means if many jobs are submitted in same time, then only 1 job will be running in that moment, and the other jobs will be pending(state=waiting). To avoid waiting jobs, we can manually create multiple trigger properties, and use PAUSE in JavaScript to delay the time in purpose.

- If raw data has many features, we can build a smaller model with 2 features, and use it to train and test, to find issues in modeling, and to optimize logic in Data Table/Thing/Timer, then extend to all features.

- Be cautious of using Timer, because wrong operations might cause mass data in Value Stream, or generate lots of Waiting Jobs. Too many Waiting Jobs will block analysis and prediction. We can use Resource of TW.AnalysisServices.JobManagementServicesAPI.DeleteJobs to delete jobs by force, and we can check Job’s total number by running Data Table’s Service of TW.AnalysisServices.AnalysisDataTable.GetDataTableEntryCounts.

- Labels:

-

Analytics