Community Tip - You can change your system assigned username to something more personal in your community settings. X

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Example: Downsampling timeseries data for mashups using LTTB

In this post, I show how you can downsample time-series data on server side using the LTTB algorithm.

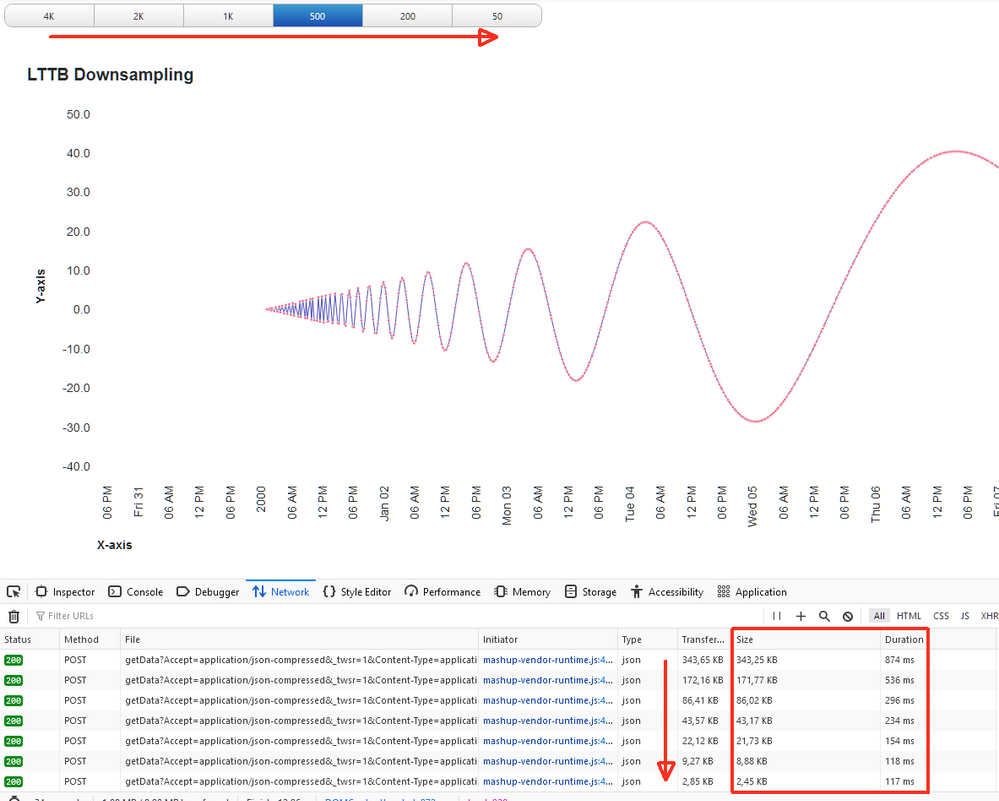

The export comes with a service to setup sample data and a mashup which shows the data with weak to strong downsampling.

Motivation:

- Users displaying time series data on mashups and dashboards (usually by a service using a QueryPropertyHistory-flavor in the background) might request large amounts of data by selecting large date ranges to be visualized, or data being recorded in high resolution.

- The newer chart widgets in Thingworx can work much better with a higher number of data points to display. Some also provide their own downsampling so only the „necessary“ points are drawn (e.g. no need to paint beyond the screen‘s resolution). See discussion here.

- However, as this is done in the widgets, this means the data reduction happens on client site, so data is sent over the network only to be discarded.

- It would be beneficial to reduce the number of points delivered to the client beforehand. This would also improve the behavior of older widgets which don’t have support for downsampling.

- Many methods for downsampling are available. One option is partitioning the data and averaging out each partition, as described here. A disadvantage is that this creates and displays points which are not in the original data. This approach here uses Largest-Triangle-Three-Buckets (LTTB) for two reasons: resulting data points exist in the original data set and the algorithm preserves the shape of the original curve very well, i.e. outliers are displayed and not averaged out. It also seems computationally not too hard on the server.

Setting it up:

- Import Entities from LTTB_Entities.xml

- Navigate to thing LTTB.TestThing in project LTTB, run service downsampleSetup to setup some sample data

- Open mashup LTTB.Sampling_MU:

- Initially, there are 8000 rows sent back. The chart widget decides how many of them are displayed. You can see the rowcount in the debug info.

- Using the button bar, you determine to how many points the result will be downsampled and sent to the client. Notice how the curve get rougher, but the shape is preserved.

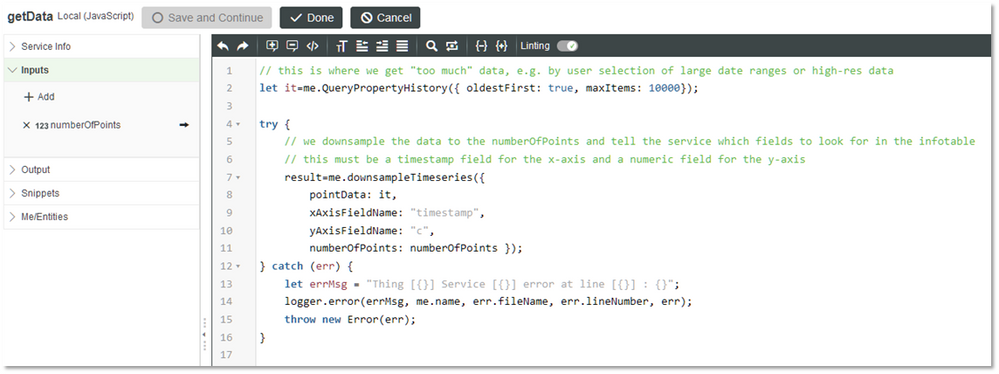

- How it works: The potentially large result of QueryPropertyHistory is downsampled by running it through LTTB. The resulting Infotable is sent to the widget (see service LTTB.TestThing.getData). LTTB implementation itself is in service downsampleTimeseries

Debug mode allows you to see how much data is sent over the network, and how much the number decreases proportionally with the downsampling.

LTTB.TestThing.getData;

The export and the widget is done with TWX 9 but it's only the widget that really needs TWX 9. I guess the code would need some more error-checking for robustness, but it's a good starting point.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Kudos for converting this code to work in TWX! The issue, though, is that you are still returning back a set of 10000 rows and THEN downsampling. Some TWX instances will exhibit performance issues with VS queries that return this much data. I've run similar code in Azure as a function that returns a downsampled dataset from Azure SQL to TWX. Performance was superb. Another strategy which we're implementing right now with Cosmos as the primary time series data store is to use the Cosmos to Synapse link and downsample data as daily jobs. The downsampled results are stored back in cosmos in specific containers for 30 day, 90 day, 6 month, and 1 year datasets. This means that when a user wants to see a one year plot of their data, it's already downsampled and ready to go. The only issue with this approach is controlling data granularity if a user wants to zoom in on the plot anywhere past 30 days. We have a 30 day TTL on Cosmos data. Anything older goes to Data Lake Storage, with Synapse pulling data from there, as well.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Thanks for sharing this - indeed this is a "workaround" of the issue that the QueryPropertyHistory services do not offer downsampling capabilities, so you have to do it afterwards - at least it doesn't travel the network for nothing. What you did is the most direct way - making use of the capabilities available directly in the storage system. Precomputing is another solution, but uses more storage - fair, if you know the data will be used. In the end, the usecase determines where you want to be in the triangle of speed, storage and memory. But it's always nice to have options 🙂

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Thanks for sharing, Rocko.

It's an interesting and somewhat non-trivial topic and it's always great to read about someone else's view and approach on dealing with such things.

Explaining the reason and necessity of downsampling data (at least for outputting, putting aside storing - that's a different erm... story

Apparently, most customers I have didn't think about it until someone highlights the topic

Even if the performance and technical issues (like outputting 15000 points on a 4k display) were not applicable, the problem of comprehending such amounts of data for end users still stands,

so something must be done to deal with this.

On my last project, the customer was monitoring its manufacturing equipment and wanted to be able to get a graph which gives an overview of the status and behaviour of a particular unit

and to be able to get a quick understanding if there were any issues (exceeded thresholds, changed patterns) after just glancing at the graph.

We did a PoC with candlestick charts with 25/75 percentiles, max/min and median, which were precalculated for for hourly, daily, weekly and monthly periods, but didn't manage to pring it to the prod environment yet.

There is a nice video from the makers of the TimescaleDB, overviewing some of the approaches to downsampling time-series data for graphing

https://www.youtube.com/watch?v=sOOuKsipUWA

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hi @Rocko Nice post. I am unable to download the attachment LTTB_Entities.xml. Could you please help with it.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

@slangleyCould you take a look at this? I can't download it myself, nor add it again to the posting or a reply.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Attachment was fixed.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Hi All, I agree the the downsampling and and possible interpolation should be done serverside at the database.

I have created a ThingShape for running Flux queries against Influxdb. Adding this ThingShape to at Thing you will get a service that works much like QueryNamedProperties, but with the option to specify how many rows you want returned from Influxdb, or specify a specifik interval. Influx will then downsample and calculate means, before sending data to ThingWorx.

To use this, you will need to have a valid Token for your influx database, so will not work in PTC SaaS for ThingWorx.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Great post Rocko! Thank you for this - I will certainly implement this as a part of a demo/knowledge sharing session that I'm working on right now.

Also all great comments from people - I think that there is no right answer to the subject... normally we want to only get the specific required data in the first place, but here we clearly see a variety of differing needs at different parts of the solution landscape.

FYI - I did a short video showing the concept that @JL_9663190 mentioned (however with InfluxQL instead of Flux)