Community Tip - Need to share some code when posting a question or reply? Make sure to use the "Insert code sample" menu option. Learn more! X

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

On loop execution speed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

On loop execution speed

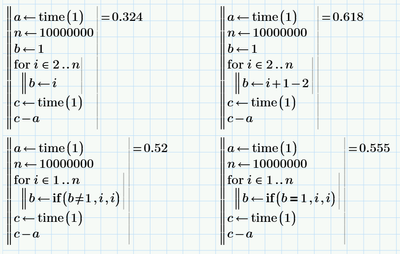

In another thread the execution speed of loops was mentioned as a side issue. That warranted some testing.

It turns out that using if-construction inside the loop to deal with the first index instead of assigning that before the loop means that the loop takes almost twice as long ... if the loop just assigns a single value to a parameter. If the loop contents are even moderately complex, the effect of the if-construction will soon disappear into noise.

Another raised question was the speed of the two forks in the if-construction. From this simple test that is minimal. The random variation is much higher than the difference, but on the average the result below seems to be about right.

Another interesting thing, which doesn't show below, is the effect of multithreading. These examples are likely about as bad for multithreading as possible and that was also reflected in the results. The overhead from it was such that each execution time roughly quadrupled from the shown ones, which didn't use it.

- Labels:

-

Programming

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

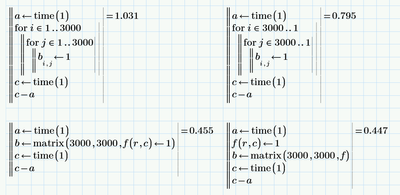

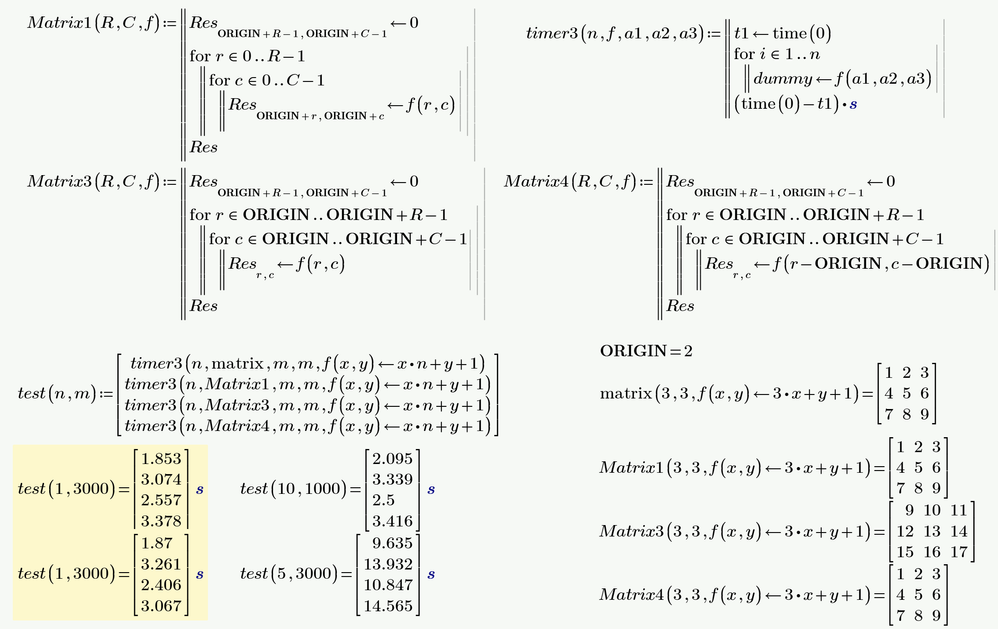

I couldn't resist: Similar samples of matrix generation. Defining the matrix in dual loop starting from top left does take longer than doing it from bottom right, but the difference is relatively small. I expected more. On the other hand, using matrix instead of dual loop was faster as I expected, but it still took about half as long as the dual loop. The loops fared rather well in my opinion. If there is any real calculation for the matrix values, the difference would likely be insignificant.

In this case multithreading worked a bit differently. The first two clocked relatively consistently a bit over 2 seconds each. The second may have still been slightly faster. The last two were interesting, though. The time in the first of them varied from about 0.6 to somewhat over 1s. The second was much faster. In some cases it was roughly the same as without multithreading and the max was maybe 0.7s. That kind of difference I didn't expect. Another thing I didn't expect is that on average every third run the latter two produced an error when using multithreading. There are obviously some bugs in the code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

To be fair you should also let the loop-programs call a function f rather than assigning a constant 1.

I also guess that the function should in some way use its arguments to avoid the effects of some sort of code optimization.

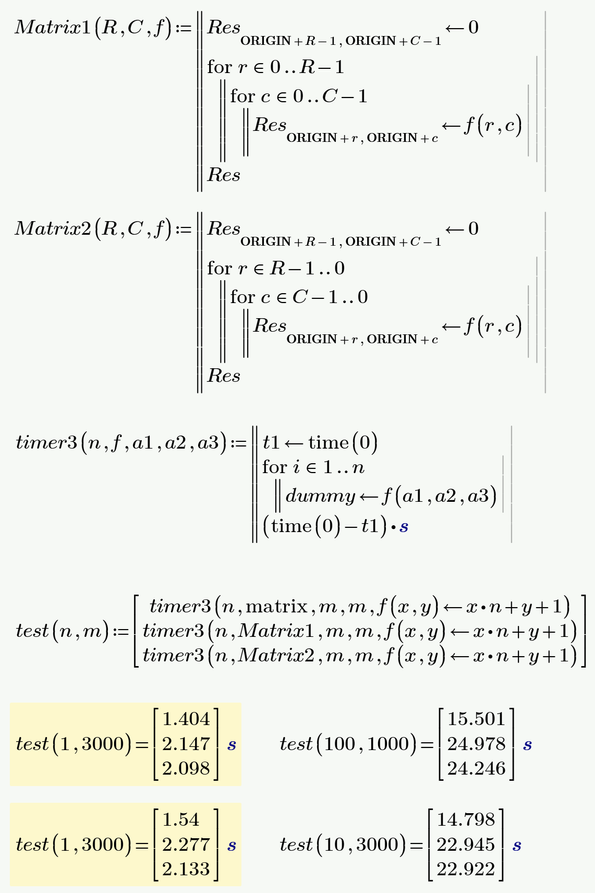

I made tests by defining user-written Matrix functions which mimic what the built-in matrix function does.

I guess if we create the whole matrix in front of the loops by assigning the last element any value, there should be no significant difference if we loop up or down.

My tests showed that

1) your machine is faster than mine 😉

2) timing is quite unreliable or there is a large random variation

3) the built-in matrix function is not that much faster than I thought!

Its surprising to see that even though the matrix is pre-created the Matrix2 function seems to be (very slightly) faster.

All timing was done with multithreading turned off as I won't trust any timing done with the time() function with multithreading on.

Nonetheless I tried with multithreading turned on and when I tried to recalculate the whole sheet, all four regions failed because of "overflow or infinite recursion". Letting them evaluate one after the other worked and the results were similar to the ones without multithreading:

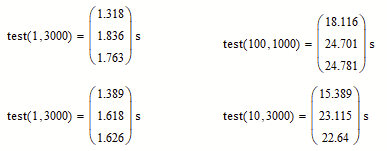

BTW, I also tried the very same in real Mathcad (V.15). Here are the results:

Prime 9 and Mathcad 15 files attached

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Interesting how minor things effect calculation time significantly.

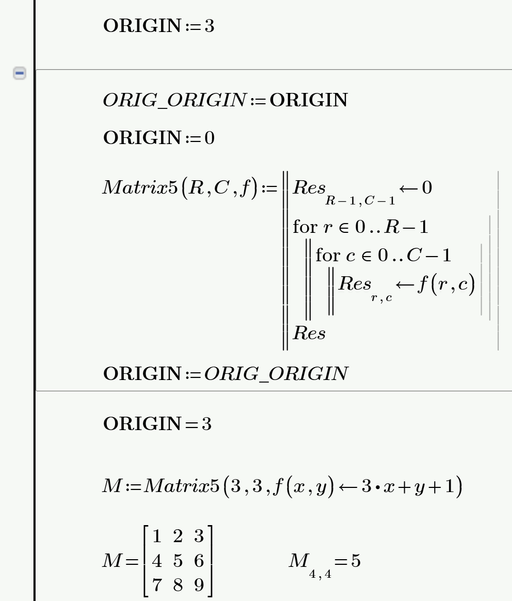

The adding (in Matrix 1) or subtracting (as in Matrix 4) of ORIGIN costs a lot of calculation time.

So Matrix3 is much close in calc time as the other two. Unfortunately Matrix3 is not exactly duplication what the built-in matrix() function does as can be seen in the example with ORIGIN set to 2.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm not sure it is such a surprise. The simple addition and subtraction in my first examples had quite an effect on the timing. So it could be just showing the time taken by that calculation and no additional increase.

That makes me also wonder what the natural ORIGIN is and does that affect the results - that is is there a penalty for the invisible origin adjustment?

In any case, I found that using lookup functions to choose between some (as in three or four) strings and replacing them with numbers was about 4...5 times slower than doing it with nested if-functions ... and that in turn was about 4 times slower than having a number there in the first place. Some editing to do...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

That makes me also wonder what the natural ORIGIN is and does that affect the results - that is is there a penalty for the invisible origin adjustment?

The default value for ORIGIN sure is zero and the built-in functions are written just with that value in mind, I guess.

You can mimic this in case you'd like to write a faster function not using additions or subtractions of ORIGIN by saving the current value of ORIGIN in a variable, setting ORIGIN to zero, define your function and set ORIGIN to the former value again..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Werner_E wrote:To be fair you should also let the loop-programs call a function f rather than assigning a constant 1.

I also guess that the function should in some way use its arguments to avoid the effects of some sort of code optimization.

I didn't think of that. I just tried to eliminate everything else besides what I wanted to "measure".

I guess if we create the whole matrix in front of the loops by assigning the last element any value, there should be no significant difference if we loop up or down.My tests showed that

1) your machine is faster than mine 😉

2) timing is quite unreliable or there is a large random variation3) the built-in matrix function is not that much faster than I thought!

That's why I did it both ways - one of the ways had to re-size the matrix multiple times. A long vector would have done it even more times.

I found the same about timing. It obviously depends on other processes going on in the machine and - I suspect - what the window manager in Prime happens to be doing. That's why an average of 10 or so runs would be much better. The relative slow speed of the matrix was indeed a surprise.

Its surprising to see that even though the matrix is pre-created the Matrix2 function seems to be (very slightly) faster.

That is surprising indeed ... even though the difference is minimal as you wrote.

All timing was done with multithreading turned off as I won't trust any timing done with the time() function with multithreading on.Nonetheless I tried with multithreading turned on and when I tried to recalculate the whole sheet, all four regions failed because of "overflow or infinite recursion". Letting them evaluate one after the other worked and the results were similar to the ones without multithreading:

BTW, I also tried the very same in real Mathcad (V.15). Here are the results:

Prime 9 and Mathcad 15 files attached

Makes you really trust the multithreading, doesn't it!

There's not that much difference between Mathcad 15 and Prime 9 results. That makes me wonder what makes the huge difference between them. It could be partially in the window manager, but it seems to me that the worst culprit is how Prime tries to calculate the entire document every time you make changes. When I'm editing in the middle of the document that can generate a long backlog of updates to the engine and when same thing or something else is changed that accumulates. The situation is even worse if the edit creates an error as those seem to take much longer to handle. Mathcad 15 didn't try to calculate beyond the bottom of visible page, so there never was that much to re-calculate and even if errors occurred those didn't take that long either as there were just a few of them.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Modern software development relies heavily an already existing frameworks and toolboxes. This allows more convenient, faster (and cheaper) development at the cost of efficiency and calculation speed.

I also suspect that PTC provides the developer crew significantly less resources as Mathsoft did with theirs.

Development progresses at a snail's pace, slow response to user input, still a lot of missing features compared to Mathcad, the catastrophic implementation of the third-party Chart Component and then the indisputable implementation of 3D plots seem to be indicators for that. I don't think the development crew is just made up of incompetent and unwilling useless people. They certainly do their best within the limits given to them, but Prime is just an insignificant, unimportant little fish in the PTC universe...