- Community

- IoT & Connectivity

- IoT & Connectivity Tips

- Enable compression in Tomcat to speed up network p...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Enable compression in Tomcat to speed up network performance in ThingWorx

Large files could cause slow response times. In some cases large queries might cause extensively large response files, e.g. calling a ThingWorx service that returns an extensively large result set as JSON file.

Those massive files have to be transferred over the network and require additional bandwidth - for each and every call. The more bandwidth is used, the more time is taken on the network, the more the impact on performance could be. Imagine transferring tens or hundreds of MB for service calls for each and every call - over and over again.

To reduce the bandwidth compression can be activated. Instead of transferring MBs per service call, the server only has to transfer a couple of KB per call (best case scenario). This needs to be configured on Tomcat level. There is some information availabe in the offical Tomcat documation at https://tomcat.apache.org/tomcat-8.5-doc/config/http.html

Search for the "compression" attribute.

Gzip compression

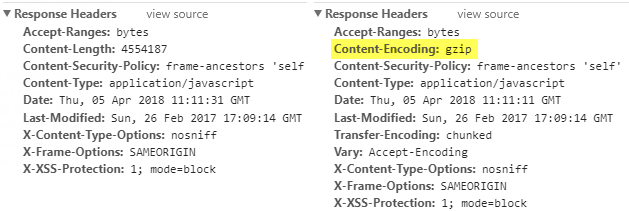

Usually Tomcat is compressing content in gzip. To verify if a certain response is in fact compressed or not, the Development Tools or Fiddler can be used. The Response Headers usually mention the compression type if the content is compressed:

Left: no compression

Right: compression on Tomcat level

Not so straight forward - network vs. compression time trade-off

There's however a pitfall with compression on Tomcat side.

Each response will add additional strain on time and resources (like CPU) to compress on the server and decompress the content on the client. Especially for small files this might be an unnecessary overhead as the time and resources to compress might take longer than just transferring a couple of uncompressed KB.

In the end it's a trade-off between network speed and the speed of compressing, decompressing response files on server and client. With the compressionMinSize attribute a compromise size can be set to find the best balance between compression and bandwith.

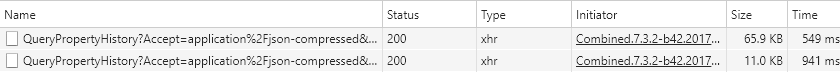

This trade-off can be clearly seen (for small content) here:

While the Size of the content shrinks, the Time increases.

For larger content files however the Time will slightly increase as well due to the compression overhead, whereas the Size can be potentially dropped by a massive factor - especially for text based files.

Above test has been performed on a local virtual machine which basically neglegts most of the network related traffic problems resulting in performance issues - therefore the overhead in Time are a couple of milliseconds for the compression / decompression.

The default for the compressionMinSize is 2048 byte.

High potential performance improvement

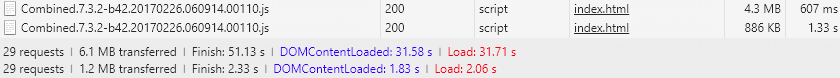

Looking at the Combined.js the content size can be reduced significantly from 4.3 MB to only 886 KB.

For my simple Mashup showing a chart with Temperature and Humidity this also decreases total load time from 32 to 2 seconds - also decreasing the content size from 6.1 MB to 1.2 MB!

This decreases load time and size by a factor of 16x and 5x - the total time until finished rendering the page has been decreased by a factor of almost 22x! (for this particular use case)

Configuration

To configure compression, open Tomcat's server.xml

In the <Connector> definitions add the following:

compression="on" compressibleMimeType="text/html,text/xml,text/plain,text/css,text/javascript,application/javascript,application/json"

This will use the default compressionMinSize of 2048 bytes.

In addition to the default Mime Types I've also added application/json to compress ThingWorx service call results.

This needs to be configured for all Connectors that users should access - e.g. for HTTP and HTTPS connectors. For testing purposes I have a HTTPS connector with compression while HTTP is running without it.

Conclusion

If possible, enable compression to speed up content download for the client.

However there are some scenarios where compression is actually not a good idea - e.g. when using a WAN Accelerator or other network components that usually bring their own content compression. This not only adds unnecessary overhead but is compressing twice which might lead to errors on client side when decompressing the content.

Especially dealing with large responses can help decreasing impact on performance.

As compressing and decompressing adds some overhead, the min size limit can be experimented with to find the optimal compromise between a network and compression time trade-off.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Great post.

I thought the correct attribute in server.xml is "compressibleMimeType" but "compressableMimeType", the one you've used in your post, seems to work as well...

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Thanks...

yeah - the documentation says compressibleMimeType but compressableMimeType is something you will see quite often in tutorials on the web. I've tested with both and both are working - seems like Tomcat has implemented it in a way that's tolerant for typos 🙂

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Agreed! This should be added to the list of best practices to consider - if such a list existed.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator