- Community

- IoT & Connectivity

- IoT & Connectivity Tips

- Smoothing Large Data Sets

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Smoothing Large Data Sets

Smoothing Large Data Sets

Purpose

In this post, learn how to smooth large data sources down into what can be rendered and processed more easily on Mashups. Note that the Time Series Chart widget is limited to load 8,000 points (hard-coded). This is because rendering more points than this is almost never necessary or beneficial, given that the human eye can only discern so many points and the average monitor can only render so many pixels. Reducing large data sources through smoothing is a recommended best practice for ThingWorx, and for data analysis in general.

To show how this is done, there are sample entities provided which can be downloaded and imported into ThingWorx. These demonstrate the capacity of ThingWorx to reduce tens of thousands of data points based on a "smooth factor" live on Mashups, without much added load time required. The tutorial below steps through setting these entities up, including the code used to generate the dummy data.

Smoothing the Data on Mashups

- Create a Value Stream for storing the historical data.

- Create a Data Shape for use in the queries. The fields should be:

- TestProperty - NUMBER

- timestamp - DATETIME

- Create a Thing (TestChartCapacityThing) for simulating property updates and therefore Value Stream updates. There is one property:

- TestProperty - NUMBER - not persistent - logged

- The custom query service on this Thing (QueryNamedPropertyHistory) will have the logic for smoothing the data. Essentially, many points are averaged into one point, reducing the overall size, before the data is returned to the mashup. Unfortunately, there is no service built-in to do this (nothing OOTB service). The code is here (input parameters are to - DATETIME; from - DATETIME; SmoothFactor - INTEGER):

// This is just for passing the property name into the query

var infotable = Resources["InfoTableFunctions"].CreateInfoTable({infotableName: "NamedProperties"}); infotable.AddField({name: "name", baseType: "STRING"}); infotable.AddRow({name: "TestProperty"}); var queryResults = me.QueryNamedPropertyHistory({ maxItems: 9999999, endDate: to, propertyNames: infotable, startDate: from });

// This will be filled in below, based on the smoothing calculation var result = Resources["InfoTableFunctions"].CreateInfoTable({infotableName: "SmoothedQueryResults"}); result.AddField({name: "TestProperty", baseType: "NUMBER"}); result.AddField({name: "timestamp", baseType: "DATETIME"});

// If there is no smooth factor, then just return everything if(SmoothFactor === 0 || SmoothFactor === undefined || SmoothFactor === "") result = queryResults; else {

// Increment by smooth factor for(var i = 0; i < queryResults.rows.length; i += SmoothFactor) { var sum = 0; var count = 0;

// Increment by one to average all points in this interval for(var j = i; j < (i+SmoothFactor); j++) { if(j < queryResults.rows.length) if(j === i) { // First time set sum equal to first property value sum = queryResults.getRow(j).TestProperty; count++; } else { // All other times, add property values to first value sum += queryResults.getRow(j).TestProperty; count++; } } var average = sum / count; // Use count because the last interval may not equal smooth factor result.AddRow({TestProperty: average, timestamp: queryResults.getRow(i).timestamp}); } } - Create a Timer for updating the property values on the Thing. The Timer should subscribe to itself, containing this code (ensure it is enabled as well):

var now = new Date(); if(now.getMilliseconds() % 3 === 0) // Randomly reset the number to simulate outliers Things["TestChartCapacityThing"].TestProperty = Math.random()*100; else if(Things["TestChartCapacityThing"].TestProperty > 100) Things["TestChartCapacityThing"].TestProperty -= Math.random()*10; else Things["TestChartCapacityThing"].TestProperty += Math.random()*10; - Don't forget to set the runAsUser in the Timer configuration. To generate many properties, set the updateRate to a small value, like 10 milliseconds. Disable the Timer after many thousands of properties are logged in the Value Stream.

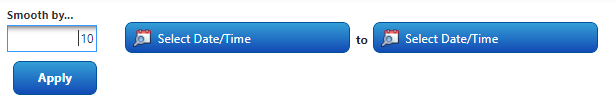

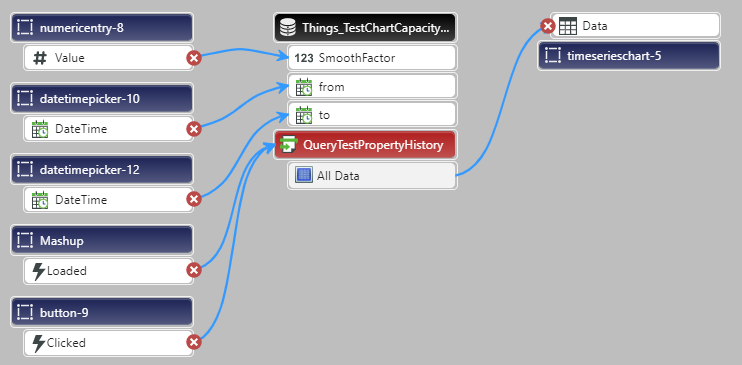

- Create a Mashup for displaying the property data and capacity of the query to smooth the data. The Mashup should run the service created in step 4 on load. The service input comes from widgets on the mashup:

Bindings: - Place a Time Series Chart widget in the bottom of the Mashup layout. Bind the data from the query to the chart.

- View the Mashup. Note the difference in the data...

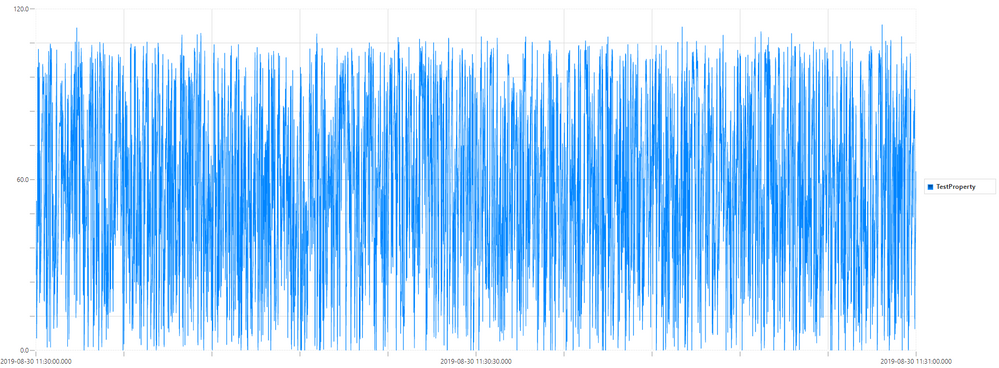

All points in one minute:

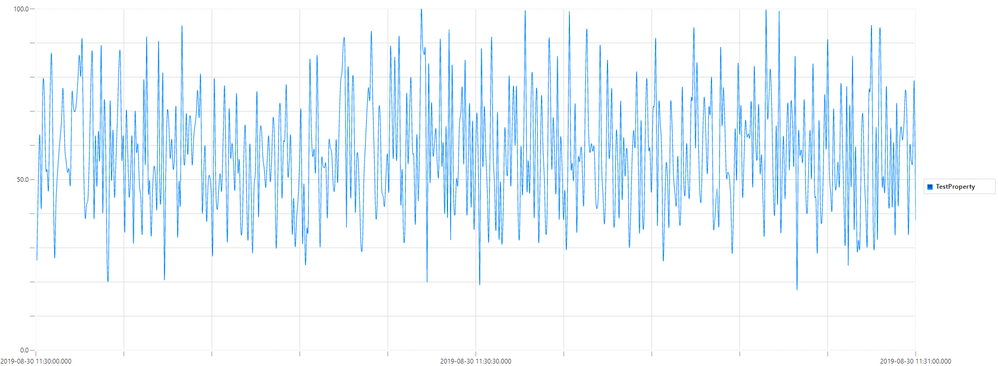

And a smooth factor of 10 in one minute:

Note that the outliers still appear, and the peaks are much easier to see. With fewer points, trends become easier to spot and data is easier to understand. For monitoring the specific nature of the outliers, utilize alerts and other types of displays. Alternative forms of data reduction could involve using the mean of each interval (given by the smoothing factor) or the min or max, as needed for the specific use case. Display multiple types of these options for an even more detailed view.

Remember, though, the more data needs to be processed, the slower the Mashup will load. As usual, ensure all mashups are load tested and that the number of end users per Mashup is considered during application design.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Is there any improvements made in terms of rendering more data points on the charts in the latest version of Thingworx i.e. 9.x, Although I agree with the fact of smoothing the data points.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

Yes, see this post:

But note, that even with the charts being now able to support more points, and downsampling, you would still transfer those points over the network to the client. Therefore it still makes sense to reduce the number of points before they are sent out.