- Community

- ThingWorx

- ThingWorx Developers

- Why TWX Platform could not receive/queue all value...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Why TWX Platform could not receive/queue all value streams updated by edges?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Why TWX Platform could not receive/queue all value streams updated by edges?

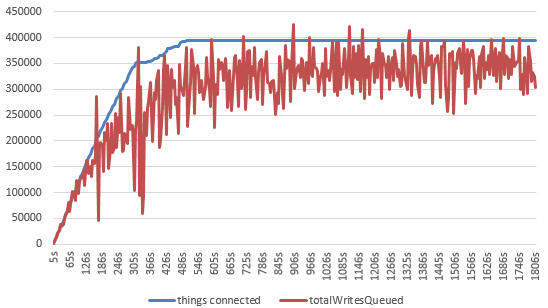

We made one edge update a temperature property increased by 1 every 5 secs. If 300k ~ 400k edges update logged temperature properties(value streams) every 5 secs concurrently, the ThingWorx Platform(TWX) could only receive/queue 85% ~ 90% of properties. When the edges' connections are established stably, We observed the 'totalWritesQueued' value of Value Stream Substem every 5 secs and computed its minus, then divided this minus by the amount of edges.

My question is, why the TWX couldn't handle 100% of properties when more than 300k edges update properties concurrently?

+ TWX System Resource:

- CPU E5-2650 v3 @ 2.30GHz with 10 cores * HT * 2-way

- CPU usage: less than 50%

- Total Memory: 128GB

- JVM Memory: 96GB

+ TWX Subsystem Configuration:

- Default except:

> Value Stream Subsystem:

' maximumBlockSize: 50,000

' maximumQueueSize: 10, 000, 000

' maximumWaitTime: 1000

' numberOfProcessingThreads: 80

+ Logs:

- Tomcat:

com.thingworx.exceptions.CouldNotConnectException: 172.21.128.30-temp13270 is not connected

java.util.concurrent.TimeoutException: Timed out APIRequestMessage [requestId: 1398634, endpointId: -1, sessionId: -1, method: POST, entityName:

172.21.128.30-temp11300, characteristic: Services, target: NotifyPropertyUpdate]

- TWX:

[L: ERROR] [O: c.t.p.p.BatchStreamEntryProcessor] [I: ] [U: SuperUser] [S: ] [T: pool-8-thread-10] Error executing batch

[L: WARN] [O: c.t.s.s.w.WSCommunicationsSubsystem] [I: ] [U: ] [S: ] [T: WsCommunication-151] Could not dispatch async service request [NotifyPropertyUpdate] :

Timed out APIRequestMessage [requestId: 1405707, endpointId: -1, sessionId: -1, method: POST, entityName: 172.18.142.102-temp540, characteristic: Services,

target:NotifyPropertyUpdate]

[L: WARN] [O: c.t.c.s.e.DispatchingServerEndpoint] [I: ] [U: ] [S: ] [T: http-nio-8080-exec-68] NULL MessageSynchronizationContext! Request either timed-out waiting

for this response, or it was received by mistake: ResponseMessage [requestId: 1401202, endpointId: 175200, sessionId: 1406274, code: STATUS_SUCCESS,

multipart: false, packet #: 0, total packets: 0]

+ QueryPropertyHistory for One Thing: NOT CONTINUOUS

temperature | timestamp |

98 | 2017-01-04 03:49:27.962 |

93 | 2017-01-04 03:49:01.006 |

91 | 2017-01-04 03:48:49.861 |

87 | 2017-01-04 03:48:26.911 |

86 | 2017-01-04 03:48:21.909 |

85 | 2017-01-04 03:48:16.907 |

79 | 2017-01-04 03:47:28.103 |

77 | 2017-01-04 03:47:17.009 |

74 | 2017-01-04 03:47:00.646 |

72 | 2017-01-04 03:46:46.981 |

70 | 2017-01-04 03:46:34.941 |

69 | 2017-01-04 03:46:29.372 |

66 | 2017-01-04 03:46:11.139 |

65 | 2017-01-04 03:46:05.810 |

63 | 2017-01-04 03:45:54.443 |

62 | 2017-01-04 03:45:48.696 |

61 | 2017-01-04 03:45:42.753 |

60 | 2017-01-04 03:45:37.495 |

58 | 2017-01-04 03:45:26.171 |

53 | 2017-01-04 03:44:57.037 |

50 | 2017-01-04 03:44:37.082 |

48 | 2017-01-04 03:44:26.089 |

46 | 2017-01-04 03:44:13.205 |

44 | 2017-01-04 03:44:03.188 |

42 | 2017-01-04 03:43:52.557 |

38 | 2017-01-04 03:43:29.961 |

33 | 2017-01-04 03:42:55.120 |

31 | 2017-01-04 03:42:44.548 |

27 | 2017-01-04 03:42:21.796 |

26 | 2017-01-04 03:42:12.657 |

25 | 2017-01-04 03:42:07.615 |

24 | 2017-01-04 03:42:02.288 |

21 | 2017-01-04 03:41:42.045 |

19 | 2017-01-04 03:41:30.359 |

18 | 2017-01-04 03:41:24.820 |

16 | 2017-01-04 03:41:13.063 |

15 | 2017-01-04 03:41:04.834 |

14 | 2017-01-04 03:40:59.453 |

13 | 2017-01-04 03:40:54.440 |

12 | 2017-01-04 03:40:49.361 |

11 | 2017-01-04 03:40:40.500 |

10 | 2017-01-04 03:40:28.502 |

9 | 2017-01-04 03:40:23.246 |

8 | 2017-01-04 03:40:15.742 |

7 | 2017-01-04 03:40:10.398 |

6 | 2017-01-04 03:40:04.640 |

5 | 2017-01-04 03:39:49.397 |

4 | 2017-01-04 03:39:38.949 |

3 | 2017-01-04 03:39:33.756 |

2 | 2017-01-04 03:39:28.749 |

1 | 2017-01-04 03:39:17.345 |

- Labels:

-

Design

- Tags:

- properties

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Are you saying that you have 300,000 - 400,000 edge devices updating the Platform, with no Connection Server, and you are wondering why the Platform cannot handle this? The Platform is not designed to handle this many edge devices on its own. You need the Connection Server for this. Here is a KCS Article on the subject. Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks so much for your reply!

To simplify the problem, I didn't mention the Connection Server. In fact, I made 300k ~ 400k edge devices update properties via 2 ~ 3 optimized high-performance Connection Servers. I've checked that the TWX platform can handle 200k value streams sent by remote devices every 5 secs via only one Connection Server.

I really appreciate your help:-)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Ah, so the issue can't be the fault of too many connections. You're actually not the first person to post about this, and I am pretty sure there was no resolution on the other thread. You may want to consider opening a support case for this issue. How are your properties updated? Are you sure the updates are propagating to the Platform? Are these remote properties? What are their remote bind settings?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for your advise. I've opened a support case and I'm waiting for the feedback.

>> How are your properties updated?

the code below shows updating a temperature property every 5 secs for 12 times:

ConnectedThingClient client = new ConnectedThingClient(getClientConfigurator(), null);

client.start();

if (client.waitForConnection(10000)) {

TemperatureThing thing = new TemperatureThing(thingName, sersorDescription, client, simulated, 0, tempConfig, testData);

client.bindThing(thing);

thing.loadPropertySubscriptions();

long start = System.currentTimeMillis();

while ((System.currentTimeMillis() - start) < 60000) {

for (VirtualThing vt : client.getThings().values()) {

vt.processScanRequest(); // defination described below

}

Thread.sleep(5000);

}

}

/*

* public void processScanRequest() {

* temperature_vtq.setValue(new NumberPrimitive(lastTemp)); // the 'lastTemp' is increased by 1 each time

* temperature_vtq.setQuality(QualityStatus.GOOD);

* setPropertyVTQ("temperature", temperature_vtq,true);

* updateSubscribedProperties(10000);

* }

*/

>> Are you sure the updates are propagating to the Platform?

I'm not sure whether the Platform has received all of updates. I observed 'submitedTaskCount/completedTaskCount'(in WS Execution Processing Subsystem Metrics) and 'totalWritesQueued'(in Value Stream Processing Subsystem Metrics), but neither of them reach the amount of properties egde devices should update when 30k ~ 40k devices update concurrently.

>> Are these remote properties?

Yes.

>> What are their remote bind settings?

I'm sorry that I don't know what you mean:-( What info I should supply?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello Ming, I suppose Tori is looking for the Data Change Type , may be i am wrong, but I would be interested to know how is the property's Data Change Type is setup - would it be correct to assume you have set it to Always? Are these simulated edge devices or real hardware? I see some timeouts in the log could indicate to the connectivity issues as well from the edge device to ThingWorx.

Streams/Value Stream are logging asynchronously, since you are pushing data at such a high rate with so many connections, would it help to have a small pause while pushing the values? That could probably allow the platform just enough time to persist the value for the purpose of reflecting it on the chart. Else in my opinion it could happen that values do get pushed but since they aren't persisted they probably won't appear when you are selecting them for display.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The property's Data Change Type is not Always, but is Value with '0' as the Change Threshold by default. They are simulated edge devices. Yes, there are timeouts log at the edge devices side too:

ERROR - com.thingworx.communications.client.things.VirtualThing: Unable To Update Subscribed Properties For 172.21.128.92-temp20738 : Timed out APIRequestMessage [requestId: 454508, endpointId: 284402, sessionId: 204915, method: POST, entityName: 172.21.128.92-temp20738, characteristic: Services, target: UpdateSubscribedPropertyValues]

ERROR - com.thingworx.communications.client.endpoints.ClientCommunicationEndpoint: Exception refilling connections was: java.util.concurrent.TimeoutException: timeout waiting for websocket handshake to complete

ERROR - com.thingworx.communications.client.connection.netty.NettyClientConnectionFactory: Exception occurred while connecting to server java.util.concurrent.TimeoutException: timeout waiting for websocket handshake to complete

ERROR - com.thingworx.communications.client.endpoints.ClientCommunicationEndpoint: Refilling connections on [endpoint [627276, ws://172.21.128.46:9003/Thingworx/WS, timeout waiting for websocket handshake to complete], uri: {}] failed : {}

ERROR - com.thingworx.communications.common.endpoints.CommunicationEndpoint: [Endpoint id: [721349, 721349-0, EP_721349], connection id: {}, connection name: {}] FAILED to remove Connection - was not found

ERROR - com.thingworx.communications.client.endpoints.ClientCommunicationEndpoint: Refilling connections on [endpoint [721349, ws://172.21.128.47:9003/Thingworx/WS, Timed out AuthRequestMessage [requestId: 11311356, endpointId: -1, sessionId: -1, method: AUTHREQUEST]], uri: {}] failed : {}

ERROR - com.thingworx.communications.client.endpoints.ClientCommunicationEndpoint: Exception refilling connections was: java.util.concurrent.TimeoutException: Timed out AuthRequestMessage [requestId: 11311356, endpointId: -1, sessionId: -1, method: AUTHREQUEST]

And there are many replicated lines at the Connection Server side as below:

ERROR i.vertx.core.net.impl.ConnectionBase - java.io.IOException: Connection reset by peer

[vert.x-eventloop-thread-3] WARN c.t.c.a.AbstractClientEndpoint - Error encountered, closing WebSocket

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method) ~[na:1.8.0_101]

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39) ~[na:1.8.0_101]

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) ~[na:1.8.0_101]

at sun.nio.ch.IOUtil.read(IOUtil.java:192) ~[na:1.8.0_101]

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380) ~[na:1.8.0_101]

at io.netty.buffer.PooledUnsafeDirectByteBuf.setBytes(PooledUnsafeDirectByteBuf.java:221) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:898) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:242) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:119) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:511) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:468) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:382) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:354) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:112) ~[netty-all-4.0.33.Final.jar:4.0.33.Final]

at java.lang.Thread.run(Thread.java:745) ~[na:1.8.0_101]

I'm afraid that we have to consider seriously whether we can pause while pushing in this situation....

Can the metrics 'submitedTaskCount' or 'totalWritesQueued' show how many values do get pushed?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

My question is how are connecting 300K-400K devices we are trying to connect 20,000 devices through connection server and connection are dropping and even only 8000 devices are connected and others are disconnected or unbound.

We are using HAProxy and PGPool. I hope you are not using this.

Please let us know.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello mren,

I am in similar situation where we are pooling more than 2000 assets data on to the thingworx platform.

I am getting similar error in the .net edge with the error code 1114 .

We are using postgres as the default persistent provider and what should be my configuration for persistent provider to meet my requirement, Like thread pool etc. also if there is any other configuration to be considered, please let me know.

Thanks.