Community Tip - Want the oppurtunity to discuss enhancements to PTC products? Join a working group! X

- Community

- Creo+ and Creo Parametric

- 3D Part & Assembly Design

- Re: Pro/Program Best Practices

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Pro/Program Best Practices

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Pro/Program Best Practices

I'm getting into a fairly complex assembly using a combination of Pro/Program and Relations. This is the first time I've gone this deep into a model using assembly-level Pro/PROGRAM, so I'd like to know if anybody has any tips or best practice advice for using both P/P and Relations together.

For instance (and my main question), is it best to write relations in the top assembly that drive features in lower-level subassemblies or parts, or is it better in the long run to pass parameters down to lower levels through the use of EXECUTE statements? I can see merit to using either way, but I'm hoping to avoid running into unforeseen problems in using one way over the other.

FYI, I'm using top-level parameters to do two things mostly: 1) Drive lower level dimensions and 2) drive lower-level conditional statements (if/then/else) within Pro/PROGRAM.

Thanks in advance

Solved! Go to Solution.

- Labels:

-

Assembly Design

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

We are using EXECUTE statements exclusively in our designs.

We put all our relations at the "top-level" of an assembly or a part, not burried somewhere in a feature (or even worse, inside a sketch!).

It takes some time to build a model driven by EXECUTE statements, but you can reuse (sub)assemblies and parts much, much easier. You just duplicate your entire assembly and change the INPUT parameters...and you're done!

If you have any specific questions, let me know!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

As I understand it, under the hood there is really just one big table with all of the parameter (and dimension) values. It doesn't matter which feature or set of relations is changing the parameter values, they're really all changing the same thing in the same place.

Now there can be a significant difference in behavior (and sometimes performance) depending on where your relations are placed. If you have multiple features that depend on a parameter (or dimension value), changing this value after some of the features have already regenerated will cause them to regenerate a second time. If at all possible, you want everything to only regenerate once.

I am a huge fan of placing the relations that control a feature inside that specific feature. Relations inside a feature will execute every time that feature is modified. This means if your're changing a feature's dimension, you will instantly see the impact of the relations every time a value is changed. The behavior is not the same if the relations are located in some other feature or in the top level model.

If there are lots of inter-connected relations/calculations that need to happen, I'll place those in one of the first features and then create parameters in that feature that subsequent features can read from to get their specific values.

Disclaimer: I've never used EXECUTE statements for anything.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for the reply, Tom. I'm with you in liking how lower-level relations keep things nice and neat (and tend to require less commenting in the top-level relations for them to make sense to another user); however, I've had problems from time to time finding exactly where the relation is that's driving a dimension. It's a give and take, for sure. For this model, I've decided to mostly go with top-level relations (aside from simple sketch-level stuff like dX = <parameter>/2, or what have you).

I see that you haven't used EXECUTE statements before, but just to clarify my question a bit for other users, my main question is about which way I should go in this situation:

Let's say I have my top-level assembly called BUILDING with a parameter called HAS_HANDLE in it. Now, there's a subassembly within BUILDING called DOOR, which may or may not include a part called HANDLE in it. I would like to use the HAS_HANDLE parameter to create an IF statement in the Pro/PROGRAM file for the DOOR assembly. I could do this one of two ways:

1) Add a duplicate parameter in DOOR also called HAS_HANDLE.

Add a relation in BUILDING like "HAS_HANDLE:0 = HAS_HANDLE" to fill in the DOOR-level parameter.

Then in DOOR-level P/P, add essentially this:

IF HAS_HANDLE

ADD PART HANDLE

END ADD

END IF

2) Add to BUILDING-level P/P:

EXECUTE ASSEMBLY DOOR

HAS_HANDLE = HAS_HANDLE

END EXECUTE

Add to DOOR-level P/P:

INPUT

HAS_HANDLE Yes_No

END INPUT

...

IF HAS_HANDLE

ADD PART HANDLE

END ADD

END IF

(By the way, this isn't my actual example, so please just take my word for it that the hierarchy has to be arranged this way, despite it looking like HAS_HANDLE should maybe just be a parameter in the DOOR assembly)

Both do essentially the same thing, but I wonder why the EXECUTE command even exists if it's so much more cumbersome than relations. I figure there has to be some benefit to using it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

If you have not worked with Layouts/Notebooks, check them out. Layouts (Pro/Notebook) are intended to define parameters and relations within the notebook that then can be declared/undeclared to models. They are one of the top down tools intended to be used to manage relations and control other models. There is no requirement to include any geometry in the layout they can be comprised of relations exclusively.

Involute Development, LLC

Consulting Engineers

Specialists in Creo Parametric

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Layouts/Notebooks create dependent relationships with whereever they are declared - hampering reuse of designs in other designs.. For this reason, we avoid them.

Pro/PROGRAM can be awesome for some very well structured design automations, but they have their limitations and frustrations. They are great for toggling feature logic within parts and assemblies - but can lock things up quite a bit if you start using execute statements to pass data from one level to the next (assembly top-down execution).

It really depends on what you are trying to accomplish. If you have family tables and are trying to switch configurations based on instance name - that is quite handy using variables within the Pro/PROGRAM. But often the logic / input of the changes can be a struggle.

Another HUGE problem is the "text editor" - it just is painful for editing Pro/PROGRAM - the bigger the more painful.

If you have not checked it out - look for Nitro-PROGRAM - a graphical Pro/PROGRAM editor. (Disclaimer: we make that product -- and use the heck out of it for our customers automation projects it saves a lot of time and headaches for debugging).

To truly automate Creo we try to avoid all the Component/Session ID relations and pass the data directly to the model that needs it at the time using Excel. But that is a whole different subject - it really depends on what your goals are for the automation.

Hope that helps.

Dave

- Tags:

- proprogram

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

"...but can lock things up quite a bit if you start using execute statements to pass data from one level to the next (assembly top-down execution)."

We have been building parametric models using EXECUTE statements for over a decade. We have never seen anything starting to "lock things up". In fact, we have increased the number of parameters which drive our assemblies to over a thousand, and still no problems.

Could you elaborate your statement?

"Another HUGE problem is the "text editor" - it just is painful for editing Pro/PROGRAM - the bigger the more painful."

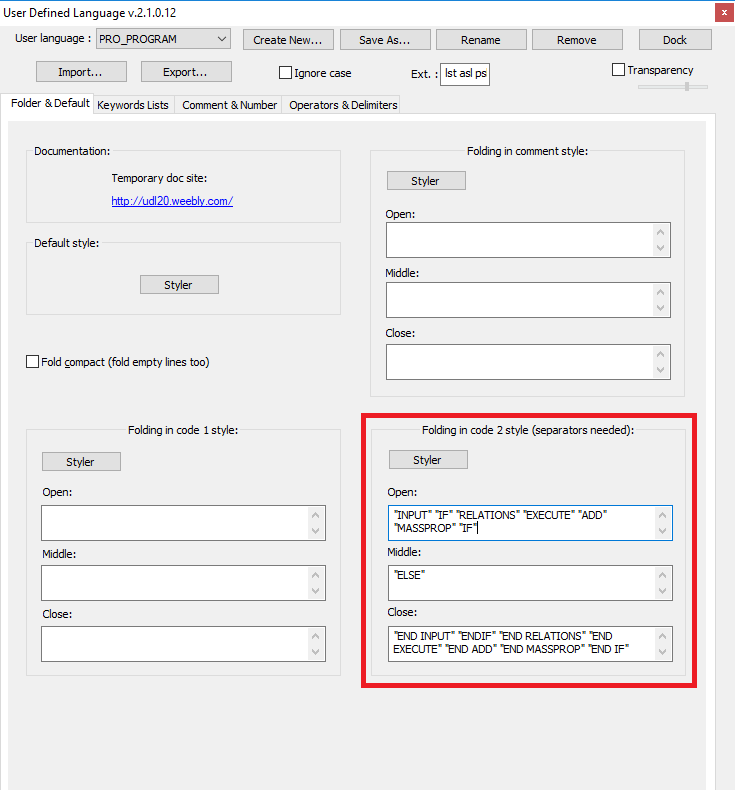

I have to agree, the standard text editor is just plain bad. That's why we recommend to use Notepad++ with a User Defined Language (UDL). Check this post "Notepad-User-Defined-Language-files-for-Creo-Parametric-and-Pro"

We have looked at Nitro-PROGRAM before, but for now we are sticking to Notepad++.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I agree on Notepad being rough for text editing, and I actually started looking into trying to incorporate Notepad++ in this process yesterday, since I already have familiarity with it for python coding. It'd definitely be helpful to be able to collapse the ADD/END ADD sections so I don't have to scroll endlessly trying to find the feature I'm looking for. Nitro-Program looks like a cool little app to use for this if I ever get into a more complex project. I'd never heard of it, so that was very good marketing targeting, if I do say so myself.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This is what we use...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This is excellent, thank you. I think I'm going to mark your first reply as the solution, because I've decided to use this method for this particular project, but all replies were helpful and I may end up using the Notebook and other suggestions at some point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

To answer your question(s) - I think it is important to put things in context.

We also started using Pro/PROGRAM over a decade ago and decided pretty quickly to avoid Execute Statements for multiple reasons. Execute Statements are great if you have a very stable configuration with a well defined inputs and logic structure. But, we often find that when you are 100% in that direction, things typically bloat with the amount of parameters and logic to pass things through the structure to populate changes as the complexity increases.

Fundamentally speaking, unless you have completely and thoroughly planned for it, Execute Statements "lock up" your design for flexibility and reusability. They are also very hard to track down / debug problems when they occur (buried knowledge that is not visible or obvious within the model tree) - often throwing a warning vs a full error to identify parameter execution problems from one level to the next.

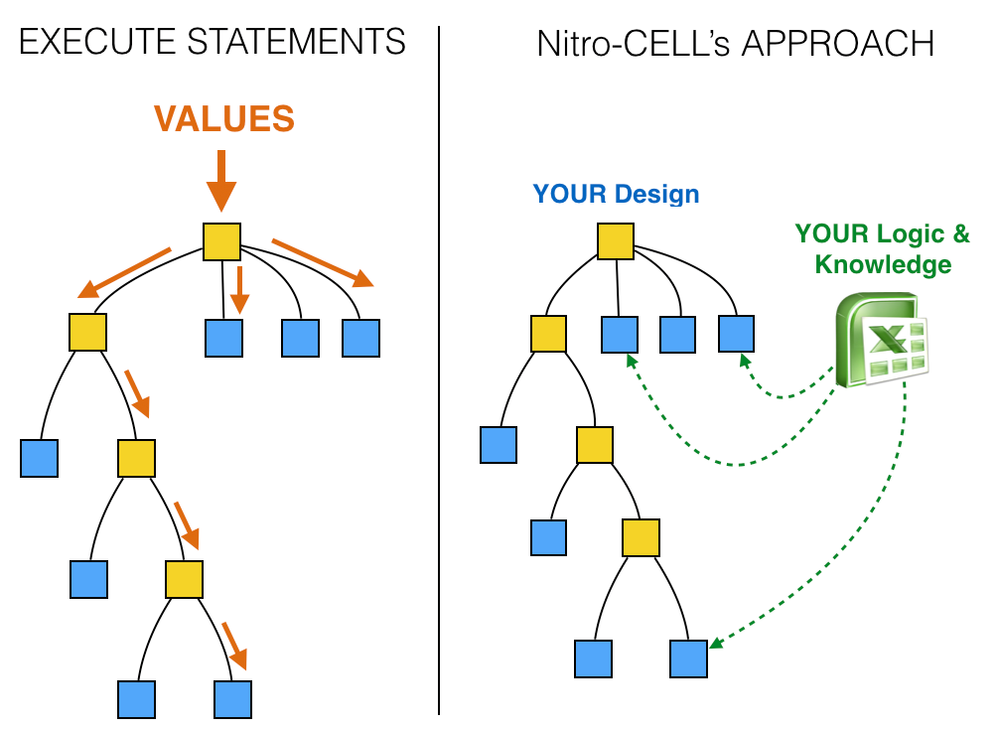

Here is a visual explanation of what I am talking about for what a structure with Execute Statements looks like (data flow from top to the target models) vs how we have been using Excel to avoid all that structure to manage the flow:

Execute Statements was one of the main reasons we wanted to target models directly for change from Excel (via Nitro-CELL -- disclaimer : we make that!) rather than build a cascading structure of logic to collect things very high up and pass them to the lowest level that requires the modification(s). We felt just hitting the models we needed to with required change was much easier to implement, understand and manage over the long term. Another key motivation was to avoid the obscure SESSION_ID/COMPONENT_ID assembly relations often found in conventional Top-Down Design approaches - which also kind of "locks things up" - track down <param>:<component_id> relations efficiently when debugging. (not very obvious or intuitive what is being changed or where it is in the structure)

We do a lot of dynamic design and configuration with Creo. Early on we found that the number of variables and limitations of Pro/PROGRAM cause us to hit the wall quickly (e.g. it does some things well, other things it creates a bit of liability). Pro/PROGRAM is awesome for feature level configuration of things (e.g. turn this on - that off - under certain logic conditions) - this applies for parts and assemblies. But, adding EXECUTE statements, and all the requirements that go with them to the stack, and things can get pretty complex very quickly.

To use Execute Statements correctly you must always satisfy the expectation that something to exist for it to execute/act upon... along with the required parameters to pass down... If the configuration of your design changes (e.g. completely different parts / sub-assemblies) - you have to add logic to anticipate that... It also (to keep things clean) requires that parameters that are being passed down one or multiple levels be in the components you are using (or replacing) to ensure things are executing properly -- errors are not obvious when they occur - other than visual results/problems that don't make sense (e.g. synching up bolt hole patterns across multiple levels away from each other is a good example of what should be simple but is often complex when there is a great separation between the levels of execution).

So yea - we view them as necessary in the right customer use case and commitment to that direction - but should be used very rarely in our book. But this depends on the problems you are solving and the complexity. If you are dealing with a very narrow structure and configuration - they may work fine... but when you get to 1,000+ parameters?!? And... Yikes... How much repetition, redundancy is occurring to keep all that straight?! My guess is that there is a significant percentage of those just being passed around without impacting designs along the way to their target models. Again, this is why targeting changes is easier to create, easier to manage directly and more flexible vs a trickle down workflow through the assembly structure. (again we use Nitro-CELL for that - again disclaimer: we make that also!) And you could achieve similar results without Nitro-CELL using Excel.

Notepad++ with the custom format detection is a good idea, but still very limited in my opinion. YES it makes things easier to visually navigate (color coding and code block identification) than the standard text editor. If it is working for you, then use it for sure.

For us ... the pains of using a text editor to create/manage Pro/PROGRAM was so bad that we created Nitro-PROGRAM to get around the issues and reduce the headaches of the back-and-forth workflow to get things working in our models. It was not just about editing the text file more efficiently, it was more about understanding the data flow, structure and testing the logic faster.

Hope that all makes sense. There is no right or wrong answer on this. Our experience and opinion will be different than others.

Dave

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

In my case as a manufacturer, we make custom products that generally follow a particular assembly structure. So my top model for this automation will include just about everything that could possibly be part of a unit (aside from the parts/subs that cannot usually be placed based strictly on rules or standards), and P/P reading a text file generated by Excel will include/exclude and modify those features to order. That's why, in this case, (I think) I've chosen to go the execute route. Not just that, but the end user of this automated model is new to Creo, and I'd like to keep the process of reading parameters into P/P as simple as possible, with as little manual assembly as possible, which is why I'm shooting for one text file load at top-level. I can certainly see how this process would become pretty cumbersome with a more complex model/company, and may lean more toward your method in those cases.

Luckily I'm working by myself (and I don't have 1000+ parameters), so I can (::crosses fingers::) build this assembly up very carefully to produce a stable model, hopefully without any of the floating parameters you're referring to. I'll try to not get set in my ways and continually evaluate which direction I should go.

Thanks again for the help, all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for your detailed explanation @DavidBigelow. The Nitro-CELL approach is exactly what a company I previously worked for used to do (with Autodesk Inventor), so I'm familiar with that approach. They later switched to a Skeleton Model approach because making use of sketches (with difficult angles) was way easier than calculating those in Excel.

As you say, there's no right or wrong way, just different approaches, and everybody needs to discover which approach works best.

We are a manufacturer, just like @cwilkes, and we also make custom products (custom height, width and options). So we know exactly what our models can/must do. We are using Family Tables, Interchange Assemblies, Component Interfaces, Copy/Publish Geometry, a lot of sheetmetal and a lot of extruded profiles.

Over the years, we have started to move the "logic" away from Pro/Program, and putting it more and more into Excel. If a user wants to move something, he can do it from Excel. If you make smart use of Interchange Assemblies, you can add new options without needing to edit Pro/Program. Just add the new component to the Interchange Assembly and drive that component by name (using a STRING parameter).

It might sound normal to us, but for others (seeing a component being swapped out without any errors) is some kind of magic 😉

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Oh - yea skeletons are a great solution for top-down type of approaches... we use them as 3-D measuring sticks to sync data between models often. That approach can be a game changer for keeping things simple and consistent.

What you just mentioned is one of those edge cases that is often the best solution for keeping things in sync without a lot of "math".

I think your approach makes total sense... I wish there were more options for managing the interchange assemblies (rare that I hear people using those)... They are quite a challenge to manage when you get a LOT of parts with different configurations vs. family tables - but they have tremendous flexibility.

I agree - we used to put a lot of logic into the Pro/PROGRAM - but man it is hard for a customer to understand what is going on when things get done that way. The benefits feel more fragile the more that is in there - and difficult to identify problems when they show up. UDFs have the same headaches at times....

Thanks for the interaction on this - always learning something new.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

We are using EXECUTE statements exclusively in our designs.

We put all our relations at the "top-level" of an assembly or a part, not burried somewhere in a feature (or even worse, inside a sketch!).

It takes some time to build a model driven by EXECUTE statements, but you can reuse (sub)assemblies and parts much, much easier. You just duplicate your entire assembly and change the INPUT parameters...and you're done!

If you have any specific questions, let me know!