Community Tip - You can subscribe to a forum, label or individual post and receive email notifications when someone posts a new topic or reply. Learn more! X

- Community

- Creo+ and Creo Parametric

- Analysis

- Dynamic Time Analysis - Output not as expected

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Dynamic Time Analysis - Output not as expected

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Dynamic Time Analysis - Output not as expected

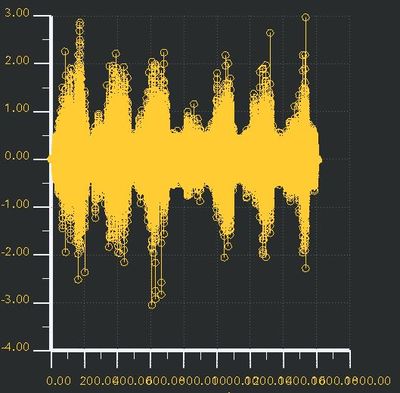

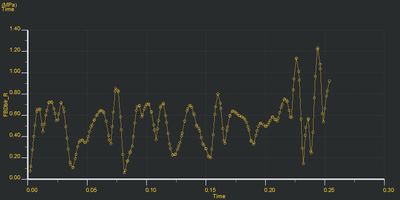

I have a question on Dynamic Time Analysis (DTA). I am inputting time history data for DTA that varies with time for about 25 min or so. The output I get does not align with the input, I only see 0.25 seconds of data.

I am attaching the snapshots to highlight my point above. Any feedback as to if such an analysis is possible in Creo or a change in output settings is needed somewhere, please let me know.

Appreciate all the help I have been getting so far. This community has been extremely helpful for someone trying to find things beyond typical online documentation.

- Labels:

-

Simulate

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

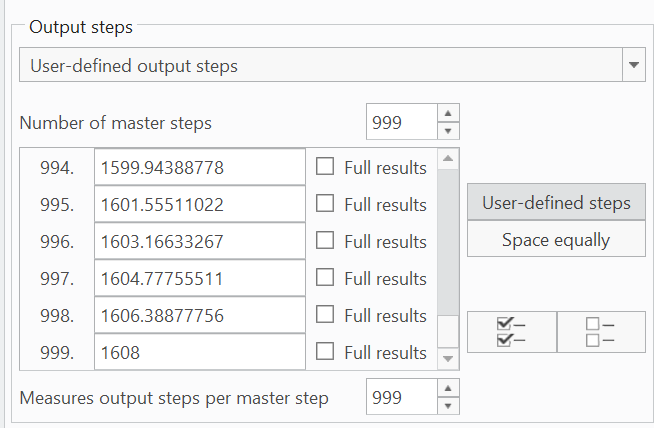

WOW!!! that is a HUGE dynamic time simulation. You just might be overloading some data types for this. Try user defined output steps set to the maximums like this image. This will give you somewhat the same resolution on the output as you are putting into the inputs. (around 500Hz sample rate is what I see)

change to user defined steps (2 default), then set the last step to 1608 seconds then change to 999 steps and hit "space equally" Turn off all full results check marks for now. Then change outputs per master step to 999.

You just may have to approach this differently. I suggest that dynamic random might be more appropriate. The time domain data is pre-processed with a power spectral analysis before entering into the Creo PSD inputs. Another possibility is to choose just the more aggressive portions of the time data, say the worst 10 seconds or something like that. I am observing that the data appears to be generated by simulation and does not have a constant time step like most real world acquisitions. A PSD algorithm that accepts arbitrary sample rates might be a difficulty. On the other hand there are likely some methods to resample your input signal to a constant sample rate.

How do you plan to sort through and summarize your 25 seconds of results?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks for the feedback !

I am currently working with 40 seconds of data extracted from the original run :). I figured working with less data points will help.

So, I have 41 master steps (for each second) and 500 points per master step (for the sample rate).

What does turning off full results do ? My understanding is that even with turning full results off, I will get measures at each of these time steps (all 41X500 data points).

Also, I understand Dynamic Frequency or Dynamic Random will be easier to run but it loses phase information which is important here (loading is more deterministic than random, so I worry not using phase in real time will affect the accuracy of loading at different location). I just wanted to compare time domain runs with the freq analysis.

Thanks again, I'll come back withe more questions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Great 40 seconds is more doable! You are correct, measures will be posted for 41*500 data points. The full results means you can look at the full 3D stress/deflection fringe results and animations. (data for every element at the plotting grid resolution = large data files) I would find where the measures point to high stress or deflection and re-run with turning full results on at those exact times only. Usually I have to add user defined master times at the right times then I just turn on those particular times for full results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How much 41*500 time steps supposed to take. I have the analysis running locally for last 15hrs and it has only ran 2829 time steps so far. I have 15k surface and 11k 3D elements in the model. This is a fairly stripped down model that I have. Normally I have to run analysis on model fairly large size compared to this one.