- Community

- IoT & Connectivity

- IoT & Connectivity Tips

- Top 5 Monitoring Best Practices

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Top 5 Monitoring Best Practices

By Tim Atwood and Dave Bernbeck, Edited by Tori Firewind

Adapted from the March 2021 Expert Session

Produced by the IoT Enterprise Deployment Center

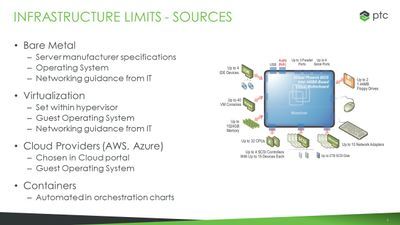

The primary purpose of monitoring is to determine when your application may be exhausting the available resources. Knowledge of the infrastructure limits help establish these monitoring boundaries, determining straightforward thresholds that indicate an app has gone too far. The four main areas to monitor in this way are CPU, Memory, Networking, and Disk.

For the CPU, we want to know how many cores are available to the application and potentially what the temperature is for each or other indicators of overtaxation. For Memory, we want to know how much RAM is available for the application. For Networking, we want to know the network throughput, the available bandwidth, and how capable the network cards are in general. For Disk, we keep track of the read and write rates of the disks used by the application as well as how much space remains on those.

There are several major infrastructure categories which reflect common modes of operation for ThingWorx applications. One is Bare Metal, which relies upon the traditional use of hardware to connect directly between operating system and hardware, with no intermediary. Limits of the hardware in this case can be found in manufacturing specifications, within the operating system settings, and listed somewhere within the IT department normally. The IT team is a great resource for obtaining these limits in general, also keeping track of such things in VMware and virtualized infrastructure models.

Lastly Containers can be used to designate operating system resources as needed, in a much more specific way that better supports the sharing of resources across multiple systems. Here the limits are defined in configuration files or charts that define the container.

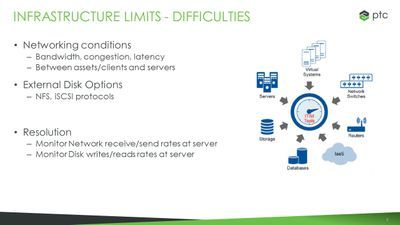

The difficulties here center around learning what the limits are, especially in the case of network and disk usage. Network bandwidth can fluctuate, and increased latency and network congestion can occur at random times for seemingly no reason. Most monitoring scenarios can therefore make due with collecting network send and receive rates, as well as disk read and write rates, performed on the server.

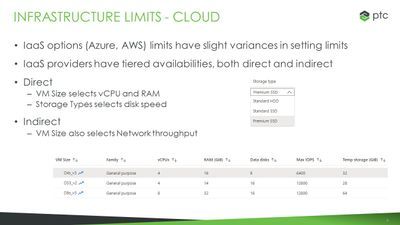

Cloud Providers like Azure provide VM and disk sizing options that allow you to select exactly what you need, but for network throughput or network IO, the choices are not as varied. Network IO tends to increase with the size of the VM, proportional to the number of CPU cores and the amount of Memory, so this may mean that a VM has to be oversized for the user load, for the bulk of the application, in order to accommodate a large or noisy edge fleet.

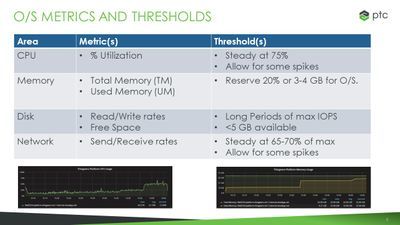

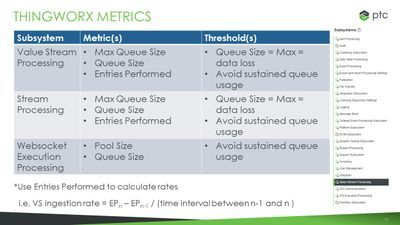

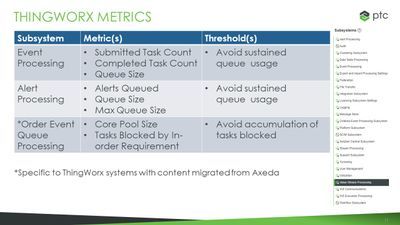

The next few slides list the operating metrics and common thresholds used for each. We often use these thresholds in our own simulations here at PTC, but note that each use case is different, and each situation should be analyzed individually before determining set limits of performance.

Generally, you will want to monitor: % utilization of all CPU cores, leaving plenty of room for spikes in

activity; total and used memory, ensuring total memory remains constant throughout and used memory remains below a reasonable percentage of the total, which for smaller systems (16 GB and lower) means leaving around 20% Memory for the OS, and for larger systems, usually around 3-4 GB.

For disks, the read and write rates to ensure there is ample free space for spikes and to avoid any situation that might result in system down time;

and for networking, the send and receive rates which should be below 70% or so, again to leave room for spikes.

In any monitoring situation, high consistent utilization

should trigger concern and an investigation into

what’s happening. Were new assets added? Has any recent change caused regression or other issues?

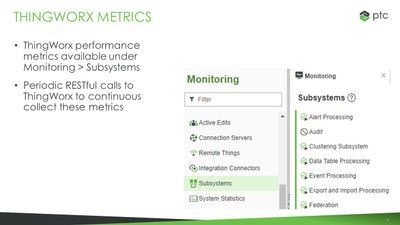

Any resent changes should be inspected and the infrastructure sizing should be considered as well. For ThingWorx specific monitoring, we look at max queue sizes, entries performed, pool sizes, alerts, submitted task counts, and anything that might

indicate some kind of data loss. We want the queues to be consistently cleared out to reduce the risk of losing data in the case of an interruption, and to ensure there is no reason for resource use to build up and cause issues over time.

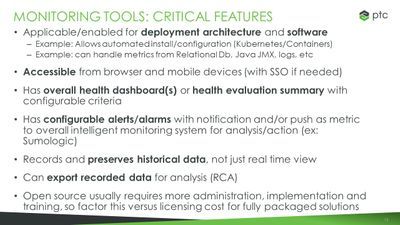

In order for a monitoring set-up to be truly helpful, it needs

to make certain information easily accessible to administrative users of the application. Any metrics that are applicable to performance needs to be processed and recorded in a location that can be accessed quickly and easily from wherever the admins are. They should quickly and easily know the health of the application from a glance, without needing to drill down a lot to be made aware of issues. Likewise, the alerts that happen should be

meaningful, with minimal false alarms, and it is best if this is configurable by the admins from within the application via some sort of rules engine (see the DGIS guide, soon to be released in version 9.1). The

monitoring tool should also be able to save the system history and export it for further analysis, all in the name of reducing future downtime and creating a stable, enterprise system.

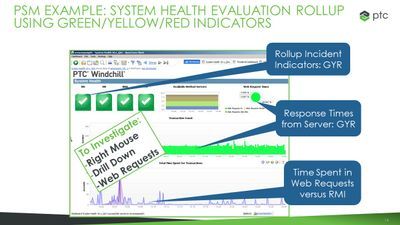

This dashboard (above) is a good example of how to

rollup a number of performance criteria into health indicators for various aspects of the application. Here there is a Green-Yellow-Red color-coding system for issues like web requests taking longer than 30s, 3 minutes, or more to respond.

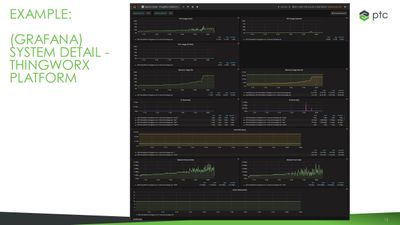

Grafana is another application used for monitoring internally by our team. The easy dashboard creation feature and built-in chart modes make this tool

super easy to get started with, and certainly easy to refer to from a central location over time.

Setting this up is helpful for load testing and making ready an application, but it is also beneficial for continued monitoring post-go-live, and hence why it is a worthy investment. Our team usually builds a link based on the start and end time of tests for each simulation performed, with all of the various servers being monitored by one Grafana server, one reference point.

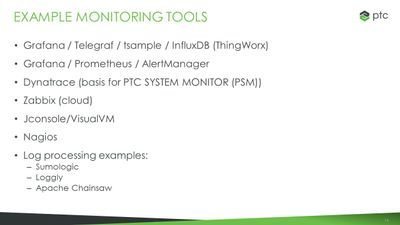

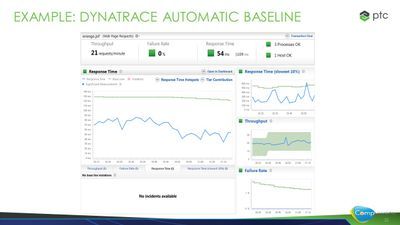

Consider using PTC Performance Advisor to help monitor these kinds of things more easily (also called DynaTrace).

When most administrators think of monitoring, they think of reading and reacting to dashboards, alerts, and reports. Rarely does the idea of benchmarking come to mind as a monitoring activity, and yet, having successful benchmarks of system performance can be a crucial part of knowing if an application is functioning as expected before there are major issues. Benchmarks also look at the response time of the server and can better enable

tracking of actual end user experience. The best

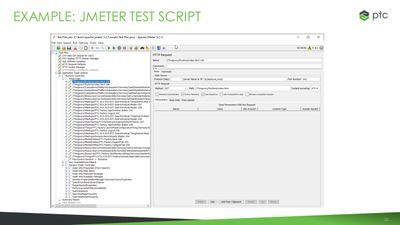

option is to automate such tests using JMeter or other applications, producing a daily snapshot of user performance that can anticipate future issues and create a more reliable experience for end users over time.

Another tool to make use of is JMeter, which has the option to build custom reports. JMeter is good for simulating the user load, which often makes up most of the server load of a ThingWorx application, especially considering that ingestion is typically optimized independently and given the most thought. The most unexpected issues tend to pop up within the application itself, after the project has gone live.

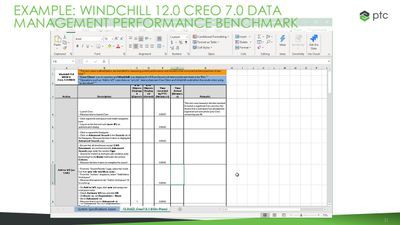

Shown here (right) is an example benchmark from a Windchill application, one which is published by PTC to facilitate comparison between optimized test systems and real life performance. Likewise, DynaTrace is depicted here, showing an automated baseline (using Smart URL Detection) on Response Time (Median and 90th percentile) as well as Failure Rate. We can also look at Throughput and compare it with the expected value range based on historical throughput data.

Monitoring typically increases system performance

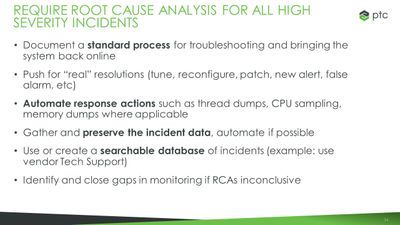

and availability, but its other advantage is to provide faster, more effective troubleshooting. Establish a systematic process or checklist to step through when problems occur, something that is organized to be done quickly, but still takes the time to find and fix the underlying problems. This will prevent issues from happening again and again and polish the system periodically as problems occur, so that the stability and integrity of the system only improves over time. Push for real solutions if possible, not band-aids, even if more downtime is needed up front; it is always better to have planned downtime up front than unplanned downtime down the line. Close any monitoring gaps when issues do occur, which is the valid RCA response if not enough information was captured to actually diagnose or resolve the issue.

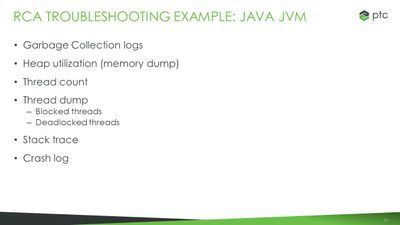

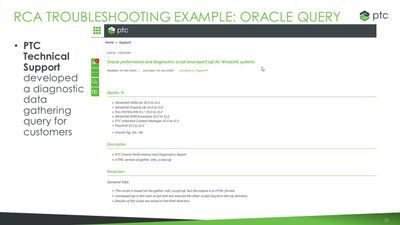

based on inputted criteria. Another example of troubleshooting is for the Java JVM, where we look at all of the things listed here (below) in an automated, documented process that then generates a report for easy end user consumption.

Don’t hesitate to reach out to PTC Technical Support in advance to go over your RCA processes, to review benchmark discrepancies between what PTC publishes and what your real-life systems show, and to ensure your monitoring is adequate to maintain system stability and availability at all times.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

A very interesting session. Delivered an enormous amount of knowledge bits per second 🙂

Thanks guys and gals, keep doing such great events as this!

The Q&A session was interesting too, so looking forward to see the recording with audio.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

For the ones that missed the session, here is the recording. The Q&A piece will be posted soon.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

If PTC manages our platform, how can we monitor these properties? The statements https://www.ptc.com/en/support/cloud-engagement-guide/monitoring-reporting/ptc-responsibilities do not discuss anything mentioned in this seminar.

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Notify Moderator

hey all,

here is the Q&A transcript from the live session:

How do I balance monitoring with security?

(Tim Atwood) There should be no exceptions allowed in security for monitoring. Your IT security department must be involved in the evaluation and implementation. Some monitoring tools may only work within the corporate intranet; others might allow browser or app access over the public intranet, but maintain security requirements like SSO and full encryption. This should be part of your evaluation.

Do you have any resources on how to use JMeter since you recommend it a few times here?

(Tori Firewind) There is a series of articles on the IoT Tech Tips Forum of the PTC Community, under the label "EDC", which has loads of information on how to get started with JMeter. The final installment post has an attached PDF with all of this information in one handy place. Check it out now: https://community.ptc.com/t5/IoT-Tech-Tips/Announcing-the-Final-Installment-JMeter-for-ThingWorx/m-p/694477/thread-id/1711

What other metrics could be monitored that were not mentioned?

(David Bernbeck) The discussed metrics are useful for most any monitoring scenario. But there are other ThingWorx subsystems that can be monitored if it fits the use case. For example, FileTransferSubsystem should be monitored when ThingWorx is sending or receiving files from assets.

Will we be able to get a copy of these slides?

(Tori Firewind) A full summary of this presentation including the slides is already published in the PTC Community to accompany our presentation. You can find that here: https://community.ptc.com/t5/IoT-Tech-Tips/Top-5-Monitoring-Best-Practices/td-p/720732

Is the jmeter test script available for downloading?

(Tim Atwood) There is a Jmeter test script called Windchill MultiUser Load generator that PTC makes available. It is only for Windchill. We are evaluating whether to make something similar available for Thingworx or Navigate.

Any utility or extensions available which are build mashups shown to monitor performance?

(Tim Atwood) A best practice method for built-in performance checking is to use the Support subsystem: https://support.ptc.com/help/thingworx/platform/r9/en/index.html#page/ThingWorx%2FHelp%2FComposer%2FSystem%2FSubsystems%2FSupportSubsystem.html%23wwID0EKZPZ

and the Utilization subsystem:

Is there already a PTC proprietary tool or a plan to develop such tool to monitor key indicators in TWX Foundation, Windchill, Kepware? If not, what is yoor personal opinion/experience with Zabbix and Grafana? Which is more granular, easier to setup etc.?

(Tori Firewind) Here is the PTC Community Post: https://community.ptc.com/t5/IoT-Tech-Tips/ThingWorx-Performance-Monitoring-with-Grafana/m-p/656996

How can we avoid showing Mashup parameters on MashupLink?

(Tim Atwood) https://www.ptc.com/en/support/article/CS318298 is the closest to an answer.

Other practices to be considered For monitoring apart from these top 5?

(Tim Atwood) Make sure to stay updated with respect to upgrades, patches and point releases so that you maintain security, performance and compatibility with the latest operating systems. I also mentioned the new Release Advisor (https://www.ptc.com/en/support/article/CS339673 please add this link to the Q&A section).