- Community

- IoT & Connectivity

- IoT & Connectivity Tips

- Using the SC API: Pitfalls to Avoid

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Notify Moderator

Using the SC API: Pitfalls to Avoid

Using the Solution Central API

Pitfalls to Avoid

by Victoria Firewind, IoT EDC

Introduction

The Solution Central API provides a new process for publishing ThingWorx solutions that are developed or modified outside of the ThingWorx Platform. For those building extensions, using third party libraries, or who just are more comfortable developing in an IDE external to ThingWorx, the SC API makes it simple to still utilize Solution Central for all solution management and deployment needs, according to ThingWorx dev ops best practices. This article hones in one some pitfalls that may arise while setting up the infrastructure to use the SC API and assumes that there is already AD integration and an oauth token fetcher application configured for these requests.

CURL

One of the easiest ways to interface with the SC API is via cURL. In this way, publishing solutions to Solution Central really involves a series of cURL requests which can be scripted and automated as part of a mature dev ops process. In previous posts, the process of acquiring an oauth token is demonstrated. This oauth token is good for a few moments, for any number of requests, so the easiest thing to do is to request a token once before each step of the process.

1. GET info about a solution (shown) or all solutions (by leaving off everything after "solutions" in the URL)

$RESULT=$(curl -s -o test.zip --location --request GET "https://<your_sc_url>/sc/api/solutions/org.ptc:somethingoriginal12345:1.0.0/files/SampleTwxExtension.zip" `

--header "Authorization: Bearer $ACCESS_TOKEN" `

--header 'Content-Type: application/json' `

)

Shown here in the URL, is the GAV ID (Group:Artifact_ID:Version). This is shown throughout the Swagger UI (found under Help within your Solution Central portal) as {ID}, and it includes the colons. To query for solutions, see the different parameter options available in the Swagger UI found under Help in the SC Portal (cURL syntax for providing such parameters is shown in the next example).

Potential Pitfall: if your solution is not published yet, then you can get the information about it, where it exists in the SC repo, and what files it contains, but none of the files will be downloadable until it is published. Any attempt to retrieve unpublished files will result in a 404.

2. Create a new solution using POST

$RESULT=$(curl -s --location --request POST "https://<your_sc_url>/sc/api/solutions" `

--header "Authorization: Bearer $ACCESS_TOKEN" `

--header 'Content-Type: application/json' `

-d '"{\"groupId\": \"org.ptc\", \"artifactId\": \"somethingelseoriginal12345\", \"version\": \"1.0.0\", \"displayName\": \"SampleExtProject\", \"packageType\": \"thingworx-extension\", \"packageMetadata\": {}, \"targetPlatform\": \"ThingWorx\", \"targetPlatformMinVersion\": \"9.3.1\", \"description\": \"\", \"createdBy\": \"vfirewind\"}"'

)

It will depend on your Powershell or Bash settings whether or not the escape characters are needed for the double quotes, and exact syntax may vary. If you get a 201 response, this was successful.

Potential Pitfalls: the group ID and artifact ID syntax are very particular, and despite other sources, the artifact ID often cannot contain capital letters. The artifact ID has to be unique to previously published solutions, unless those solutions are first deleted in the SC portal. The created by field does not need to be a valid ThingWorx username, and most of the parameters given here are required fields.

3. PUT the files into the project

$RESULT=$(curl -L -v --location --request PUT "<your_sc_url>/sc/api/solutions/org.ptc:somethingelseoriginal12345:1.0.0/files" `

--header "Authorization: Bearer $ACCESS_TOKEN" `

--header 'Accept: application/json' `

--header 'x-sc-primary-file:true' `

--header 'Content-MD5:08a0e49172859144cb61c57f0d844c93' `

--header 'x-sc-filename:SampleTwxExtension.zip' `

-d "@SampleTwxExtension.zip"

)

$RESULT=$(curl -L --location --request PUT "https://<your_sc_url>/sc/api/solutions/org.ptc:somethingelseoriginal12345:1.0.0/files" `

--header "Authorization: Bearer $ACCESS_TOKEN" `

--header 'Accept: application/json' `

--header 'Content-MD5:fa1269ea0d8c8723b5734305e48f7d46' `

--header 'x-sc-filename:SampleTwxExtension.sha' `

-d "@SampleTwxExtension.sha"

)

This is really TWO requests, because both the archive of source files and its hash have to be sent to Solution Central for verifying authenticity. In addition to the hash file being sent separately, the MD5 checksum on both the source file archive and the hash has to be provided, as shown here with the header parameter "Content-MD5". This will be a unique hex string that represents the contents of the file, and it will be calculated by Azure as well to ensure the file contains what it should.

There are a few ways to calculate the MD5 checksums and the hash: scripts can be created which use built-in Windows tools like certutil to run a few commands and manually save the hash string to a file:

certutil -hashfile SampleTwxExtension.zip MD5

certutil -hashfile SampleTwxExtension.zip SHA256

# By some means, save this SHA value to a file named SampleTwxExtension.sha

certutil -hashfile SampleTwxExtension.sha MD5

Another way is to use Java to generate the SHA file and calculate the MD5 values:

public class Main {

private static String pathToProject = "C:\\Users\\vfirewind\\eclipse-workspace\\SampleTwxExtension\\build\\distributions";

private static String fileName = "SampleTwxExtension";

public static void main(String[] args) throws NoSuchAlgorithmException, FileNotFoundException {

String zip_filename = pathToProject + "\\" + fileName + ".zip";

String sha_filename = pathToProject + "\\" + fileName + ".sha";

File zip_file = new File(zip_filename);

FileInputStream zip_is = new FileInputStream(zip_file);

try {

// Calculate the MD5 of the zip file

String md5_zip = DigestUtils.md5Hex(zip_is);

System.out.println("------------------------------------");

System.out.println("Zip file MD5: " + md5_zip);

System.out.println("------------------------------------");

} catch(IOException e) {

System.out.println("[ERROR] Could not calculate MD5 on zip file named: " + zip_filename + "; " + e.getMessage());

e.printStackTrace();

}

try {

// Calculate the hash of the zip and write it to a file

String sha = DigestUtils.sha256Hex(zip_is);

File sha_output = new File(sha_filename);

FileWriter fout = new FileWriter(sha_output);

fout.write(sha);

fout.close();

System.out.println("[INFO] SHA: " + sha + "; written to file: " + fileName + ".sha");

// Now calculate MD5 on the hash file

FileInputStream sha_is = new FileInputStream(sha_output);

String md5_sha = DigestUtils.md5Hex(sha_is);

System.out.println("------------------------------------");

System.out.println("Zip file MD5: " + md5_sha);

System.out.println("------------------------------------");

} catch (IOException e) {

System.out.println("[ERROR] Could not calculate MD5 on file name: " + sha_filename + "; " + e.getMessage());

e.printStackTrace();

}

}

This method requires the use of a third party library called the commons codec. Be sure to add this not just to the class path for the Java project, but if building as a part of a ThingWorx extension, then to the build.gradle file as well:

repositories {

mavenCentral()

}

dependencies {

compile fileTree(dir:'twx-lib', include:'*.jar')

compile fileTree(dir:'lib', include:'*.jar')

compile 'commons-codec:commons-codec:1.15'

}

Potential Pitfalls: Solution Central will only accept MD5 values provided in hex, and not base64. The file paths are not shown here, as the archive file and associated hash file shown here were in the same folder as the cURL scripts. The @ syntax in Powershell is very particular, and refers to reading the contents of the file, in this case, or uploading it to SC (and not just the string value that is the name of the file). Every time the source files are rebuilt, the MD5 and SHA values need to be recalculated, which is why scripting this process is recommended.

4. Do another PUT request to publish the project

$RESULT=$(curl -L --location --request PUT "https://<your_sc_url>/sc/api/solutions/org.ptc:somethingelseoriginal12345:1.0.0/publish" `

--header "Authorization: Bearer $ACCESS_TOKEN" `

--header 'Accept: application/json' `

--header 'Content-Type: application/json' `

-d '"{\"publishedBy\": \"vfirewind\"}"'

)

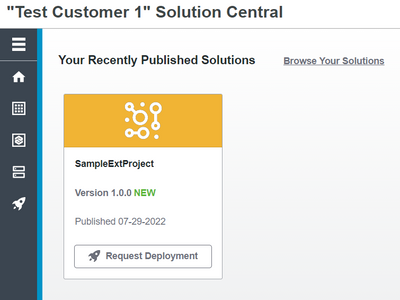

The published by parameter is necessary here, but it does not have to be a valid ThingWorx user for the request to work. If this request is successful, then the solution will show up as published in the SC Portal:

Other Pitfalls

Remember that for this process to work, the extensions within the source file archive must contain certain identifiers. The group ID, artifact ID, and version have to be consistent across a couple of files in each extension: the metadata.xml file for the extension and the project.xml file which specifies which projects the extensions belong to within ThingWorx. If any of this information is incorrect, the final PUT to publish the solution will fail.

Example Metadata File:

<?xml version="1.0" encoding="UTF-8"?>

<Entities>

<ExtensionPackages>

<ExtensionPackage

artifactId="somethingoriginal12345"

dependsOn=""

description=""

groupId="org.ptc"

haCompatible="false"

minimumThingWorxVersion="9.3.0"

name="SampleTwxExtension"

packageVersion="1.0.0"

vendor="">

<JarResources>

<FileResource

description=""

file="sampletwxextension.jar"

type="JAR"></FileResource>

</JarResources>

</ExtensionPackage>

</ExtensionPackages>

<ThingPackages>

<ThingPackage

className="SampleTT"

description=""

name="SampleTTPackage"></ThingPackage>

</ThingPackages>

<ThingTemplates>

<ThingTemplate

aspect.isEditableExtensionObject="false"

description=""

name="SampleTT"

thingPackage="SampleTTPackage"></ThingTemplate>

</ThingTemplates>

</Entities>

Example Projects XML File:

<?xml version="1.0" encoding="UTF-8"?>

<Entities>

<Projects>

<Project

artifactId="somethingoriginal12345"

dependsOn="{"extensions":"","projects":""}"

description=""

documentationContent=""

groupId="org.ptc"

homeMashup=""

minPlatformVersion=""

name="SampleExtProject"

packageVersion="1.0.0"

projectName="SampleExtProject"

publishResult=""

state="DRAFT"

tags="">

</Project>

</Projects>

</Entities>

Another large issue that may come up is that requests often fail with a 500 error and without any message. There are often more details in the server logs, which can be reviewed internally by PTC if a support case is opened. Common causes of 500 errors include missing parameter values that are required, including invalid characters in the parameter strings, and using an API URL which is not the correct endpoint for the type of request. Another large cause of 500 errors is providing MD5 or hash values that are not valid (a mismatch will show differently).

Another common error is the 400 error, which happens if any of the code that SC uses to parse the request breaks. A 400 error will also occur if the files are not being opened or uploaded correctly due to some issue with the @ syntax (mentioned above). Another common 400 error is a mismatch between the provided MD5 value for the zip or SHA file, and the one calculated by Azure ("message: Md5Mismatch"), which can indicate that there has been some corruption in the content of the upload, or simply that the MD5 values aren't being calculated correctly. The files will often say they have 100% uploaded, even if they aren't complete, errors appear in the console, or the size of the file is smaller than it should be if it were a complete upload (an issue with cURL).

Conclusion

Debugging with cURL can be a challenge. Note that adding "-v" to a cURL command provides additional information, such as the number of bytes in each request and a reprint of the parameters to ensure they were read correctly. Even still, it isn't always possible for SC to indicate what the real cause of an issue is. There are many things that can go wrong in this process, but when it goes right, it goes very right. The SC API can be entirely scripted and automated, allowing for seamless inclusion of externally-developed tools into a mature dev ops process.