- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to call values from a table and then do the minerr() calculation?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How to call values from a table and then do the minerr() calculation?

Hi:

This is the reply I got from created by VladimirN. in Creo.

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

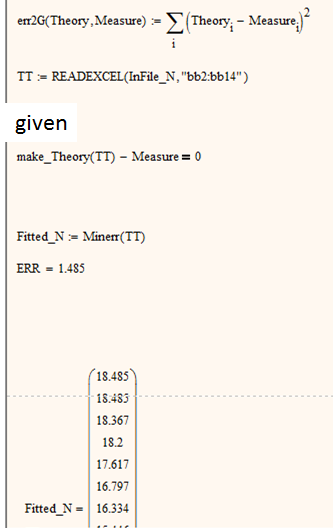

This is a built-in Mathcad function. Minerr(var1, var2, ...) - returns the values of var1, var2, ..., coming closest to satisfying a system of equations and constraints in a Solve Block. Returns a scalar if only one argument, otherwise returns a vector of answers. If Minerr cannot converge, it returns the results of the last iteration.

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

I have two questions:

1) As I read the minerr() instruction, var1, var2, .. should be either integer or complex number.

Can var1, var2 be vectors?

2) Is there any way to call var1, var2 from a separated table and then put them into minerr()?

Solved! Go to Solution.

- Labels:

-

Statistics_Analysis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

Thanks for the reply.

I doubted such long calculation time but you proved it is possible based on this code structure.

I guess what you said is: if I followed your suggestion, the calculation time will be about 5.5hr (5 and a half).

I have modified the code as you suggested and see what will happen.

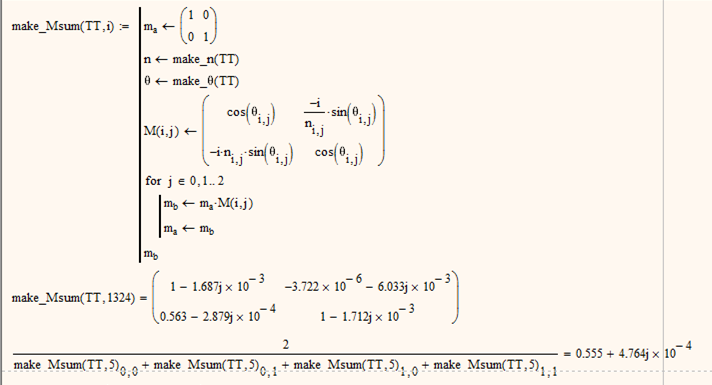

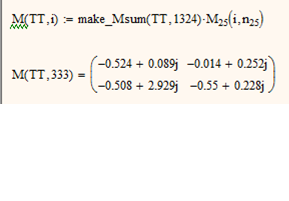

In my code, make_MSum(TT,i) returns a 2x2 matrix. M25 has to be an additionally independent 2x2 matrix. Therefore, I form a M(TT,i) by multuplying them. Then make_theory_t(TT) calculates the sum of all elements.

In my previous simulation, I used 2 matrixs and 100 elements(i). At that time, one element calculation takes 30s to come out the result. My confusion was: if one element just takes 30s, how come make_theory_t(TT) (i=100 elements) take such long time (even > 1 day)? I guess the reason is the code structure as you mentioned (make_MSum() would be a rther lengthy routine using make_n()).

You mentioned "There seems to be no reason making theta25 or M25 a function of n25."

The concept of my code is:

1) Define initial TTs

2) Initial TTs will look up databse in the file to form theta25 and n25.

3) Theta25 and n25 will form M(TT,i) to calculate make_theory_t(TT)

4) Minerr (TT) determine next run TTs

5) repeat steps 2-4 until TTs with minimum Err is determined and return possible TTs.

The goal is to find TTs which give a closest make_theory_t(TT) to measure_t.

I don't know whether there is any way to make theta25 or M25 not a function of n25 because they are connected in the equation. "i" has to be 1500 to my final goal. Each "i" has its meaning. About your idea, if Mthcad can calculate a vector containing 1500 2x2 matrix, maybe we don't need 1501 times.

So I guess my questions are:

1) Is there any way(different code structure) to shorten the calculation time to be reasonable?

2) In my previous simulation (2 matrixs and 100 elements(i)), the result (Fitted_N) is exact the same as initial TTs. Does the code really do its job(Minerr (TT) determines next run TTs and repeats steps 2-4)?

If such long calculation time is a "MUST" by using MathCad (I hope not), I may have to conside the use of other softwares (Matlab, Fortran....). I have to make sure this first bcz I am kind of like MathCad now.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I guess what you said is: if I followed your suggestion, the calculation time will be about 5.5hr (5 and a half).

No, unfortunately 5 and a half days (25% of three weeks).

Calculation: 3 values took about one hour. For each value you call make_MSum() four times, so 12 calls of that function took 1 hour, which means that one call takes 5 minutes. With my modification one value just need one call to make_Msum, so 1500 values would need 1500 * 5 minutes = 7500 minutes = 125 hours = somewhat more than 5 days.

The problem was/still is, as I see from your screen shot, that you call make_Msum() four time for every i, just to get the four elements and sum them up. It would make more sense to pout the summing into make_Msum() or at least call it only once (therfore the 25%) and store the resulting matrix in a varible and get the four elements from there .

In my code, make_MSum(TT,i) returns a 2x2 matrix. M25 has to be an additionally independent 2x2 matrix. Therefore, I form a M(TT,i) by multuplying them. Then make_theory_t(TT) calculates the sum of all elements.

I must confess that in the meantime I am too confused to be able to follow. At the very beginning you talked about selecting five "optimal" values for whatever out of a given set. Now we are varying (all?) 26 values.

Then you reported that to get the final matrix M you will have to multiply 26 different matrices - now in your last screenshot you are multiplying just three.

In my previous simulation, I used 2 matrixs and 100 elements(i). At that time, one element calculation takes 30s to come out the result. My confusion was: if one element just takes 30s, how come make_theory_t(TT) (i=100 elements) take such long time (even > 1 day)?

Because you obviously also changed other things., Without the worksheet thats not possible to tell and I don't understand why now you just have two matrices. If 26 are necessary they would be necessary mo matter how many datapoints you use, or am I missing something?

You mentioned "There seems to be no reason making theta25 or M25 a function of n25."

What I wanted to say is that n25 doe not need to be a parameter of that function, just i.

About your idea, if Mthcad can calculate a vector containing 1500 2x2 matrix, maybe we don't need 1501 times.

Sure Mathcad could create that kind of structure.

What I see from your pic is that you call make_n() and make_theta() for every i which is not necessary. There sure is room for improvement here and it would probably make a difference if these routines are called only once for every set of TT or 1501 times!!

So I guess my questions are:

1) Is there any way(different code structure) to shorten the calculation time to be reasonable?

I am sure there are ways, but I don't think its possible to help further without having the full sheet and data file.

2) In my previous simulation (2 matrixs and 100 elements(i)), the result (Fitted_N) is exact the same as initial TTs. Does the code really do its job(Minerr (TT) determines next run TTs and repeats steps 2-4)?

Hard to say w/o the sheet. If all is setup correct Minerr should do its job. Change the initial TT's to somewhat "wronger" values and see yourself if something changes. Just see what value the fit function has (use the simple difference, not the sum of squares).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

I guess it's okay to provide the worksheet without the data and attached file is the code.

Your questions are replied in the file using blue sentences.

Without solving this long calculation time it's hard to know whether this concept/code is workable.

I guess the calculation time can be much shorter if a vector containing 1500 2x2 matrix can be utilized and "you call make_n() and make_theta() for every i which is not necessary" is solved.

Do you mind to give hints about above two points?

Or

Do you know the possible calcluation time if the code is highly optimized?

Based on your suggestion, I guess make_Msum(TT,i) to make_Theory_t(TT) should be merged to one function to reduce the number of call function.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

See if the attached will help.

Otherwise I would need the data, too, as its not so easy doing it all "blindfolded" in a sheet which consists of nothing than errrors as of undefined variables and functions.

Maybe calculation time could be further tuned but this may be a matter of trial and error and would require a working sheet.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here is a more compacted version - can't say if its quicker.

Comments see version in last post

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

many many thanks!!

The code looks beautiful.

I am running it to check the calculation time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am running it to check the calculation time.

Hopefully less than 5,5 days.

Maybe you try it first with a smaller number of datapoints rather than the 1501.

I may be that you have to replace "m" for "M" to avoid unit conflict. I didn't bother creating my own testdata file to be able to make a testrun.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks!!

I was thinking the same thing and reduced datapoints to 200 for the time calculation.

By the way, in the file I saw these two equations.

Do you mean it's better to have these two instead of just the later (original) one?

Now my running code contains these two equations...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

which two equations?

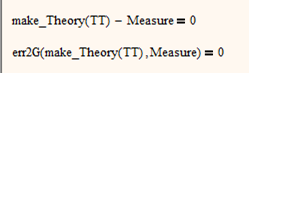

areou talking about the solve block? plese notice that one equ is disabled (the blck square at the upper right is the indicator for it)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi

Got it... Your are saying err2G can be removed.

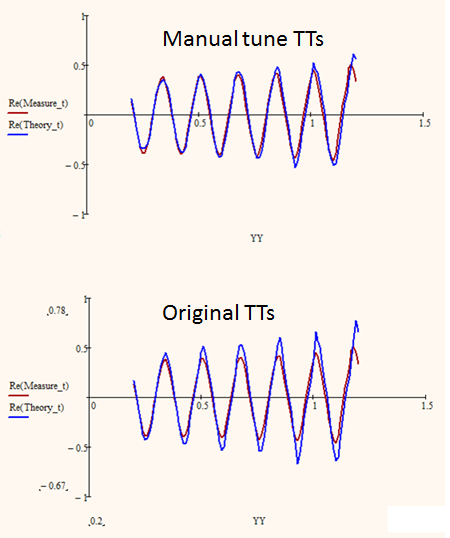

I have tried 25 variable 200 elements yesterday and at least I know it can be finished in 8 hrs.

The result is in attached file.

Then I tried 13 variable 100 elements and the calcualtion time is just 10 seconds (weird but super fast).

The result is also in attached file.

I think 13 variable 100 elements is good for the test.

However, as you can see in the file, Fitted_N are all the same as initial TTs.

I am wondering whether the code really does its job.

It is because in the below figure a closer overlap can be achieved by manually tuning TTs (upper plot).

The goal is to achieve a well overlopped "t" like this by tuning TTs.

Do you have any suggestion about the code? Is there any way to check the minerr()?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Doesn't look like it would work.

Your TT values have rather great maginitude while the t-values you compare are rather small in comparison. I could imagine that this is a problem for the numeric solver.

Maybe you could scale down your TT values in a smaller maginitude range.

Not sure if that would help, though.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

I have scaled down TTs by using log() and the result is the same (Fitted_N=initial TTs).

My t-values are between -1 to 1.

Do you think the magnitude is still too large?

My another curiosity is that how come the calculation time is so fast now (13 variables, 100 elements =>30s vs 25 variables, 200 elements=>2-7hrs).

As I remember (from the instruction), the minerr() should do 500(?) loop calculation and then come out whatever the final value.

I expect the process should take few minutes instead of 30s.

Do you think there is something wrong inside the code or this is the way it is?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Do you think the magnitude is still too large?

I am no specialist concerning numerical methods so I don't know exactly how the various algorithms will do. Scaling was just an idea out in the blue as I could imagine that varying a very large number for a specific (maybe small) difference would not change much in the outcome. So the guess was that Mathcad thinks it has reached convergence.

I think Richard had elaborated a bit on the LM algorithm in this post about a month ago.

Things you could try:

- Decrease the values of TOL and CTOL (standard is 10^-3) to

- chose another algorithm (right click the word minerr)

- use Find() instead of MinErr (be aware that Find() may tell you that it didn't found a solution while MinErr gives a result in any case.

- maybe you could even use minimize()?

My another curiosity is that how come the calculation time is so fast now (13 variables, 100 elements =>30s vs 25 variables, 200 elements=>2-7hrs).

I can imagine that every new parameter which MinErr has to vary could elongate the calculation time significantly. Aslo I would suspect that the change from 100 to 200 elements just doubles the time - BUT I may be wrong.

So if each new variable doubles the time we could calculate 13/100=30 sec --> 25/200=2.8 days!

If each new varaible add just 50% calc time we get 25/200=2.2 hours.

But be aware that all these calculations are pure gambling, don't know if its reasonable at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Another weird thing is that:

In the previous reply, I showed you the result from the V2 code.

When I replaced it to the V3 code, I suffered below issue.

But I think maybe V3 code won't make a difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I think there are errors in my sheet V3 which doesn't surprise me as without sample data all I see in the sheet is error.

The errormessage in a solve block usually is meaningless as its the same no matter what the real cause is. You can trace it by right clicking the red expression and then chose "Trace Error". Now clicking on "First" should bring you to the real cause of the failure and give you a better error message.

What I spotted upon looking again at my V3 sheet is the following:

1) all "m" (with exception of the meter in the definition of theta) should be replaced by "M".

2) the line "for j elementof 0..last(n)" should be changed to "for j elementof 0..last(TT)"

I used n in V2 but we do not calculate a vector n in V3, so this may have caused the error (and the error message was even correct then)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I keep using V2 code since it works okay in minerr().

About your suggestions, can you give me a hint of dealing with vector "f" using minimize()?

Things you could try:

- Decrease the values of TOL and CTOL (standard is 10^-3) to

==>I have change TOL/CTOL from 1 to 1E-6. The results are all the same.

- chose another algorithm (right click the word minerr)

Only LMA can be used to obtain the result.

==>In the non-linear algorithm, data is too big to compute (from the error message) for other two settings (Newtown and Conju..).

==>Linear algorithm shows another error message. I didn't go deeper

- use Find() instead of MinErr (be aware that Find() may tell you that it didn't found a solution while MinErr gives a result in any case.

==> I don't think we can get an exact solution (find a 13 variable set to satsify 100 elements). That's why we choose minerr(), trying to find a minimum error one.

- maybe you could even use minimize()?

==> I read the instruction but cannot write it correct. The instruction usually use a simple equation as an example.

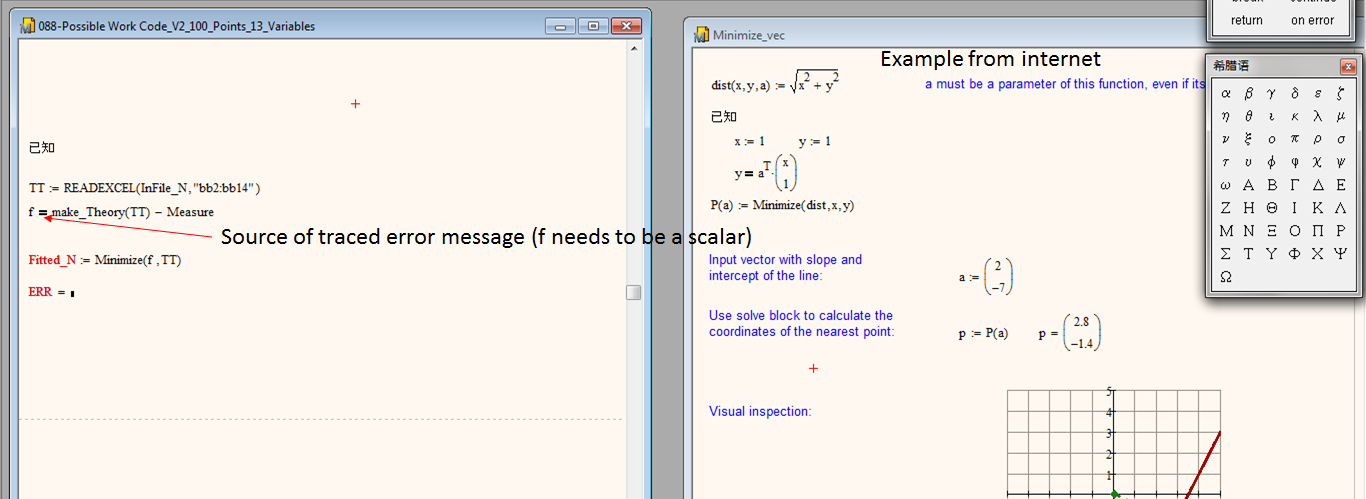

But my cause is to deal with a vector (100 elements). I traced it back and the message said that "f" has to be a scalar.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Think V3 should run a bit quicker but won't expect dramatic improvment, though. On the other hand you would only had to delete three routines and copy in one.

Think you a right about the usage of Find()

ad Minimize: You have to use f(TT):=make_Theory(TT)-Measure! It has to ve a function of all solve variables and you have to use an assignment, not a boolean comparison.

I don't know if its mandatory to move the function definition before the solve block, but I would do so.

Possibly its not necessary to put the definition of the guess values IN the block as in the example - I guess you can leave it before the "Given".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

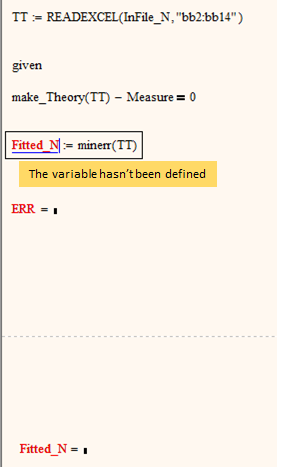

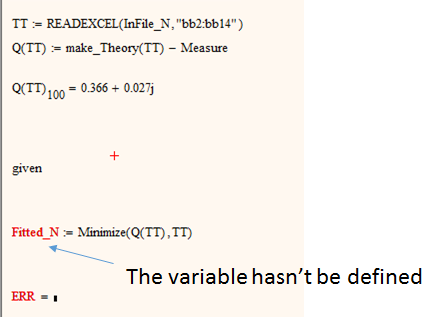

Thanks!!

I tried the suggestion and the message shows the undefined variable.

The concept of the minimize() is also to find a 13 TTs set which makes 100 elements close to zero.

I couldn't distinguish the difference to minerr().

However, doesn't it need a more complicated code structure (a loop to deal with 100 elements)?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I tried the suggestion and the message shows the undefined variable.

Did you use trace error to see what the error is?

You may omit the keyword given as you have no constraints.

The LM algorithm is not available with minimize, I think.

However, doesn't it need a more complicated code structure (a loop to deal with 100 elements)?

You could use the routine where you sum the residue squares instead

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

In MC15 (and 12, 13, and 14) solve block only give one error message, regardless of what the problem really is. That message could mean anything, including the possibility that it simply failed to converge to a solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Space is getting so small as of intendation cause the thread is rapidly growing, so I answered to the first post.

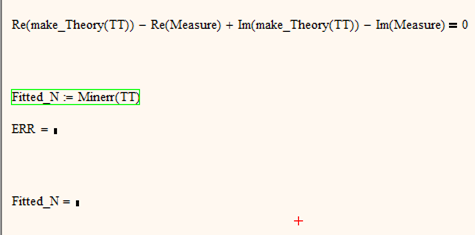

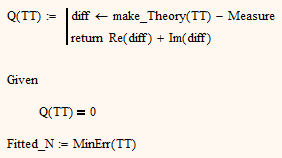

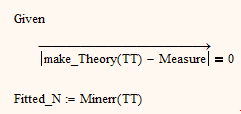

I have verified that minerr and minimize should work with vector arguments. At least minimize would require a function returning a scalar rather than e vector. This is best achieved by defining Q(TT):=|maks_Theory(TT)-Measure|, that way also getting rid of any imaginary elements.

Don't know if MinErr would have problems with imaginary elements.

I created a sheet (using a real signal only but you may expand if you like) to show that all methods (Minerr, Find, minimize) will work with a similar setup you have. I generate a signal depending on selectable number of parameters (amplitudes, corresponding to your TT), sample a selectable number of datapoints (Measure), add some noise and try to find the best fit. Find() will only return a result if no noise is added so a perfect fit is possible.

MinErr and Minimize basically return similar results. Thats interesting as MinErr will use LM algorithm by default and Minimize defaults to CG (LM not available here). If I force MinErr tu use CG the results are inferiour. Maybe thats because of the limited number of iterations minErr will do - you wrote its 500 - I was not able to find a number for that limit!? With higher values for number of amps and data points it shows that Minimize will take MUCH longer than MinErr.

Anyway, here is the sheet to play with. Add complex parts if you like but at least its a template how it could/should and does work 😉

And even with 25 amplitudes and 2000 data points its very quick.

I added at the end a time to see how much time a selectable number of calls to make_Theory take. You may do so likewise in your sheet to find out where optimization could help most. Of course the time will vary depending on number of sample points and number of amplitudes.

My machine is very lame and with 25 amplitudes and 2000 points it took about a minute for 1000 calls to make_Theory.

You may also add timing for the solve block to see whats the influence of an increase of parameters and an increase of points.

Of course I would expect longer calculation times in your sheet as make_Theory involves calls to two interpolation routines and a series of matrix operations. But I am not sure if the long times you report are justified by this.

The main problem still is that in your sheet MinErr seems not to change your guess values while it looks like it tries hard to do so (because of the long calc time). Did you try to call your SSE function with the guess values and then again with slightly modified guess values? Did the SSE change? Still thinking it could be some kind of scaling problem - just a vague idea. I remember that Alan Stevens had helped on solve blocks here in the past and used some sort of scaling in his solutions once or twice.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

Thanks a lot. I will read through the code in detail.

You inspired me the question whether minerr() can handle the complex number.

Now I think the answer is: No.

The code didn't work while using Q(TT):=|maks_Theory(TT)-Measure|.

By the use of Q(TT):=maks_Theory(TT)-Measure, the result came out in 30s and was the same as initial TTs (we already know).

When I use Q(TT):=Re(maks_Theory(TT))-Re(Measure), the result came out in 5 mins and gave me a different TTs set (close to my expectation).

When I use below code, the result came out also in 5 mins and gave me a different TTs set (also close to my expectation).

Therefore I think minerr() cannot handle the complex number.

Now I go back to try 25 TTs and 200 elements and check how long will it take.

I have another question:

Is it possible to setup below restriction to minerr()?

All calculated TTs cannot be larger than the first TTs (TT0) and cannot be smaller tha the last TTs (TT13).

As I know, we cannot guide TTs determined from minerr().

I am asking for comfirmation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I've been on an extended business trip and only just got back to looking at this again.

minerr can handle complex numbers, but it does then require complex guesses. Were your guess values complex?

The code in your image is not correct. You do not want to add the residuals for the real and imaginary parts because they could cancel. You should create a vector of residuals that contains both the real and imaginary residuals: stack(Re(make_Theory)-Re(Measure, Im(make_Theory)-Im(Measure))=0

You can use constraints with minerr, but a constraint is not treated as a hard constraint. The error in the constraint is just minimized along with the residuals. To get a hard constraint weight it very heavily. For example, if you want A to be less that B, write the constraint as (A-B)*10^15<0.

Regarding scaling issues, CTOL is an absolute number, not a fractional change in the residuals. If your residuals are very large, for example on the order of 10^12, and CTOL is the default value of 10^-3, you are asking for a relative error of 1 part in 10^15, which is on the order of numeric roundoff. It will either never converge, or it will take a very long time to do so. Make CTOL something realistic compared to the magnitude of your residuals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Had my reply open for just a while and so hadn't realized you already answered. Glad you are back 😉

The problem seems to be that the parameters to find (TT) are real numbers (in the range of 10^14 to 10^19, if I recall correctly), but the residuals are complex. make_Theory(TT) should be fitted to Measure, both are large vectors of complex numbers with real and imaginary parts smaller than 1.

Can it be that MinErr() isn't happy with a vector of complex residuals?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

OK. I misunderstood (I admit I didn't read the entire thread very carefully!). Minerr works with complex parameters, but I'm not sure about complex residuals. The LM algorithm would have to be written to specifically handle that by splitting out the real and imaginary parts, and I don't know if that's the case. It's possible it might work if the equality in the solve block is rewritten as make_Theory(TT)-Measure=0+0*i. The safest way would certainly be to handle it explicitly in the solve block though. That also gives a couple of options. One is to minimize the magnitude of the complex residual, the other is to create a vector of residuals with the real and imaginary parts stacked. For a large vector of residuals the absolute value approach is probably faster, because the time for non-linear least squares scales as the square of the number of residuals. For a small vector of residuals which approach is faster will probably depend on the relative overheads of stacking vectors versus taking the absolute value of vectors. I think only timing tests would answer that.

I'm also not sure about the consequences of the very large parameter values vs the small residual values. Minerr calculates numeric derivatives, and I wonder if there might be some issues there (a gradient that is so close to zero it is affected by numeric roundoff). I wonder if things would work better if the residuals were multiplied by a large number, say 10^15.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thanks!!

I will try to stack idea later.

Now I am using the loop containing absolute value (post underneath).

I have a question about the weight.

The code concept is:

1) Setup a 13 TTs set

2) 13 TTs set call values from the file to obtain 100 elements (make_Theory(TT))

3) Then minerr() is used to find proper 13 TTs, which make make_Theory(TT)-Measure=0

Is it possible to weight each TT differently (setup constrains) to achieve make make_Theory(TT)-Measure=0?

For example:

30% difference of make make_Theory(TT)-Measure=0 comes from TT0, 20% difference of make make_Theory(TT)-Measure=0 comes from TT1......to TT3.

Now I have setup contrains A<TTs<B and TT0>TT1>..>TT13.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You could weight each residual easily. Just multiply the vector of residuals by a vector of weights (using the vectorize operator of course). If all the weights add to 1 then the value of each weight is the fractional weighting for the given residual. However, since you are minimizing the sum of the squares of the residuals the fractional weighting is not equal to the fractional contribution of each residual to the final total residual, because big residuals have a much bigger effect on the optimization than small residuals.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You inspired me the question whether minerr() can handle the complex number.

Now I think the answer is: No.

I am not sure and I didn't find anything about this in the help doc.

BTW, where did you read about the minerr limit of 500 iterations?

The code didn't work while using Q(TT):=|maks_Theory(TT)-Measure|.

You then had Q(TT)=0 in the solve block, right? As I understand it it should work, while it is recommended to use a constraint wich results a vector of residulasWhat does "didn't work" mean? Did you get an error message?

When I use Q(TT):=Re(maks_Theory(TT))-Re(Measure), the result came out in 5 mins and gave

What you showed in the picture is an expression which calls make_Theory() twice, which is very inefficient because make_Theory() will take some time to evaluate. It would be better to do it that way:

But you have to be aware that a difference of 3 - 3i would then result in zero, which is not what you want. So you should in any case add absolute values!

A short alternative would be the following, which also returns (a different) vector of real values:

Notice that the vectorization is over the whole expression, including the absolute value.

Therefore I think minerr() cannot handle the complex number.

Possible, but I can't verify it. We would need a short, clearly arranged example to play with, like the template sheet with real values I provided.

Now I go back to try 25 TTs and 200 elements and check how long will it take.

Did you already made calc time tests with different parameters and different data points to see which affects time in which way - either with your sheet or (probably quicker) with my template?

Is it possible to setup below restriction to minerr()?

Yes, you can (and according to help, even should) add constraints in the solve block.

All calculated TTs cannot be larger than the first TTs (TT0) and cannot be smaller tha the last TTs (TT13).

You mean that the vector TT should be sorted ascending by size, right?

You may add T[0<T[1<T[2<..... in the solve block, but you should not expect that Mathcad will perfectly stick to it. It will override it if it finds a better fit that way.

I tried in my sheet and added T[7>0.1 and I got a value which was lower than 0.1 but higher than without that constraint. When I added T[7>0.5 I got a value for T[7 which actually was higher than 0.1. This shows that MinErr will consider these constraints (as good as it thinks is possible).

As I know, we cannot guide TTs determined from minerr().

You cannot force them to be taken from a given set of numbers only, which was your initial question (and I think we are far away from that now), but you can add constraints. But its an approximation, so neither Q(TT) will be exactly zero nor will the solution given necessarily live up to all those constraints.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi:

1) I have gottn the reply from Richard. He is right. Minerr() can handle the complex number but the guess value has to be complex number, too. My initial TTs are all real number. Probably thats where the problem comes from.

Since I use TTs to form the complex number in the database, I guess use Re()/Im() is what I can do.

For the number 500, maybe I have a wrong memory. My point is that minerr() should certain loop calculation and then come out whatever the result.

2) I used |maks_Theory(TT)-Measure|=0 in the solve block but it didn't work. I didn't check the detail error message.

3) Not yet. As I know 13 TTs 100 elements needs 6 mins and 13 TTs 200 elements needs 12 mins.

But 25 TTs 100 elements at least needs > 40mins (I stopped the calculation).

So I think TTs number is the key. Now I followed your suggestion for Q(TT).

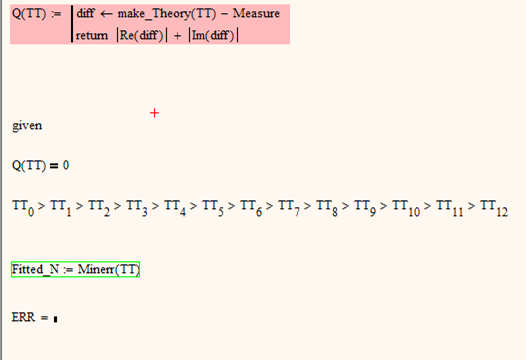

Without absolute value, it takes 6 mins (13 TTs 100 elements).

When adding absolute value (attached file), it takes much more than 6 mins (close to 20-30mins).

I don't know the reason.

4) Yes. I also observed that constrains are just for reference, like your observation.

Good to know the constrain (T[0<T[1<T[2<...).

But...are you talking about T[0 or T[0]?

I guess I can setup constrains for each TT (hoepfully).

5) For this part you are right. Now I use log(TT) for the calculation. If I use TT (10^14 to 10^19), the result will be wrong. Inital TTs with a very big mignitude difference might influnce to final result.

The problem seems to be that the parameters to find (TT) are real numbers (in the range of 10^14 to 10^19, if I recall correctly), but the residuals are complex. make_Theory(TT) should be fitted to Measure, both are large vectors of complex numbers with real and imaginary parts smaller than 1.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

So I think TTs number is the key. Now I followed your suggestion for Q(TT).

Or Richards suggestion stacking the real and imaginary parts of the residuals on top of each other (but in a way so you do not have to call make_Theory() twice!). May result in a better and quicker fit (don't know).

The numeric algorithm seemingly has to go through more combinations by increasing TT size. That way calling make_Theory() more often. Guess this routine is the key. Would be fine if you could find a way to get that denominator (the sum of the four elements of the matrix) without multiplying 26 matrices.

When adding absolute value (attached file), it takes much more than 6 mins (close to

You did it wrong. The way you wrote it Q(TT) returns a simple scalar, not a vector of scalar residuals. You would have to vectorize the absolute values similiar to my suggestion above to het a vector.

4) Yes. I also observed that constrains are just for reference, like your observation.

But...are you talking about T[0 or T[0]?

I guess I can setup constrains for each TT (hoepfully).

just reference? Look at Richards post about not being hard constraints and how to weight them if necessary.

Is was talking about TT[0, etc. Thats the way you would access a vector element in Mathcad, TT[0] is the way to do it in many programming languages.

What do you mean with "for each TT"? Thought what you want is TT[0<TT [1<TT[2<... Even more work if you'd like to weight it like Richard suggested.

5) For this part you are right. Now I use log(TT) for the calculation. If I use TT (10^14 to 10^19), the result will be wrong. Inital TTs with a very big mignitude difference might influnce to final result.

Yes, indeed it was my fear that that huge range and big magnitude could make MinErr fail, but I am not well versed enough with the internas of the algorithms to be able to argue that in a reasonable way.

After all it seems to me you are on a good way in the meantime. Testdrives finishes in accaptable time and parameters now really change and converge. Whats left seems to be to find the best fitting equation (I'll vote for Richtards idea) and especially to speed up the calculation for a larger set of parameters (which probably only could be done with a better understanding of the underlying model as I think the speedup my V3 could have over V2 is just minimal but nevertheless maybe worth a try.