- Community

- ThingWorx

- ThingWorx Developers

- Re: How to not lose data when adding stream entrie...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to not lose data when adding stream entries rapidly

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How to not lose data when adding stream entries rapidly

I've noticed that I lose data when stream entries are added very rapidly. This occurs due to the entries being added simultaneously in different threads. The stream cannot have data with the same timestamp, so only one of them gets added if there are multiple entries being added simultaneously. To prevent this from occurring, I've added random milliseconds to the timestamp. However, when so many entries are being added simultaneously, I lose data since the simultaneous entries still seems to occur even with the random milliseconds. Is there a better way to add stream entries without losing data?

var timestamp = new Date();

timestamp.setMilliseconds(Math.floor(Math.random() * 1000));

Things[StreamName].AddStreamEntry({

sourceType: undefined /* STRING */,

values: values /* INFOTABLE */,

location: undefined /* LOCATION */,

source: me.name /* STRING */,

timestamp: timstamp /* DATETIME */,

tags: tags /* TAGS */

});

- Labels:

-

Coding

-

Troubleshooting

- Tags:

- streams

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Willie does your actual stream data that you are entering have unique timestamps? If so, you could use that as your timestamp. A couple other things I have done when I run into similar problems:

- with streams, if it's not a service that I'm running often, I'll just use the built-in pause() function and pause for 2 or 3 milliseconds between row adds

- you can loop through the data first and assign sequential timestamps/milliseconds/etc. before you run the insert function

- if you really want to use random (which would likely throw off your queries), you could keep a JSON object with a record of all the timestamps you've used already and do a hasOwnProperty() test on each new timestamp to ensure it's unique (that's kind of an ugly way to do it though and I'm not sure that's thread-safe)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- does your actual stream data that you are entering have unique timestamps?

No, some of them have the same timestamp.

I have a separate Timestamp column defined in the Data Shape for the asset time.

Precision is to HH:mm:ss, so milliseconds difference doesn't really matter.

I am using the stream timestamp for record keeping purposes for when it was added to the stream.

- if it's not a service that I'm running often, I'll just use the built-in pause() function and pause for 2 or 3 milliseconds between row addsIt's a service that runs pretty often. It runs based on a subscription to a data change in a string property. Since Axeda Agents (edge) do not have infotable properties, I am passing a line of text as a string property and parsing the string using a service I wrote to create stream entries.

- you can loop through the data first and assign sequential timestamps/milliseconds/etc. before you run the insert function

I can't loop it since I can't predict when and how much data is coming in from the edge.

- if you really want to use random (which would likely throw off your queries), you could keep a JSON object with a record of all the timestamps you've used already and do a hasOwnProperty() test on each new timestamp to ensure it's unique (that's kind of an ugly way to do it though and I'm not sure that's thread-safe)

This method sounds interesting but I'm not clear on this.

Maybe I can do a do while loop when adding the stream entry. If the stream entry throws an error when adding an entry with the same timestamp, it will keep trying until it was able to add. Do you know if the stream entry service throws an error when adding an entry with the same timestamp? I have a feeling it just ignores and doesn't throw an error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm not sure if a stream entry with a timestamp conflict throws an error. Could you just modify your timestamp on the Axeda edge to have milliseconds?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I just tried adding a stream entry with the same timestamp. It did not throw an error.

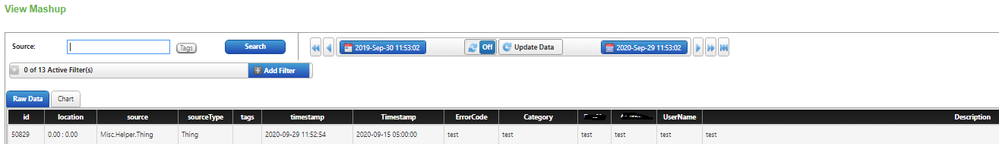

Axeda edge doesn't have precision to milliseconds, only to seconds. However, I am storing the asset Timestamp as a Data Shape property and not the stream meta data property so it wouldn't matter if Axeda edge had precision to milliseconds. Please see image below:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Got it.

I was suggesting that you add a faux-millisecond value on the Axeda edge when you create your string property, and then use that same timestamp for your stream metadata timestamp.

Another possible solution would be to have a 'staging' DataTable or InfoTable property (that won't reject duplicates) and then have a service that runs on a schedule or other trigger to process those rows into the stream.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

- I was suggesting that you add a faux-millisecond value on the Axeda edge when you create your string property, and then use that same timestamp for your stream metadata timestamp.

That's actually what I was doing initially for another reason, but I was able to resolve that issue so I reverted

back to this method, which I felt was the proper way. I had the Axeda edge timestamp in the stream

metadata and the server timestamp in Timestamp property. It was working, but it just didn't feel like it was the

proper way. It worked because I had about a maximum of 4 same edge timestamps simultaneously.

The probability of having the same timestamp with random milliseconds or data loss was 4/1000 or 0.4%.

- Another possible solution would be to have a 'staging' DataTable or InfoTable property (that won't reject duplicates) and then have a service that runs on a schedule or other trigger to process those rows into the stream.

This method sounds do-able but it doesn't seem optimal since I want the data to be in the stream

immediately. I could setup another subscription that triggers on data change but it gets too complex for a

simple stream entry. I would also have to create a value stream for the stream.

Also, my understanding is that DataTables don't perform well with speed.

Reference:

https://www.ptc.com/en/support/article/CS204091?&language=en&posno=1&q=CS204091&source=search

After re-reading CS204091, it seems that I should be using the metadata timestamp as the asset timestamp

as queries should be based on the stream timestamp for timeseries data. Is my understanding correct?

Is this the proper way?

Excerpt below:

- There are some exceptions to storing more than 100,000 records

- If using a Stream to store more than 100,000 records and the query or get that is being exercised on that Stream to return data always has the timestamp fields filled out there should not be a huge performance hit because, only a specific number of records will be returned for that subset of time that is specified in the initial query based on the timestamp

- The key here is to utilize the default timestamp field when adding records to the Stream and not attempting to query on a custom timestamp field within the query parameter. This is because the initial pull of data from the Stream will return the entire Stream instead of grabbing a subset of records based on the default timestamp field and that is where the performance hit will be seen

- Once that initial subset is returned, anything in the query parameter will be applied to that subset of rows, thus thoroughly reducing the amount of records ThingWorx needs to apply a query to

- Streams index on the timestamp field, so this should be taken advantage of

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@Willie ,

Correct. Your stream metadata timestamp should be the asset timestamp. If you look at the schema of the Stream table in your persistence provider, you'll see that there are only a few columns for the metadata (including timestamp) and then a JSON object that actually holds all of the datashape data. The streams only query efficiently if the metadata timestamp is the same as the timestamp of your data point.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I have observed this behavior earlier, I have handled it by using source field. While inserting the rows to stream, i have set source as actual source concatenate with GUID. As source is primary key if the source changes it will write it to stream without any issues. It will allow duplicate timestamp as source changes.

Tags which i mentioned earlier is indexed column but not primary key.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator