- Community

- ThingWorx

- ThingWorx Developers

- How to sync thingworx internal database with exter...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to sync thingworx internal database with external database?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How to sync thingworx internal database with external database?

Hi All,

Is there a way so that I can sync my Thingworx data tables with Mysql database?

My actual use case is:

I have thousands of data table each with at least 100000 records. Till now I was using Thingworx Internal database neo4j and to access data I wrote all services by using built in snippets given by twx platform for data tables.

Now because of huge amount of data I need to use Mysql database but If I use MySQL database the biggest problem is that I will need to change my all services I've written with built in snippets, If I use MySQL then I will need to write my own queries. It will increase lot of work for me.

Is there a way so that I can use the same snippets(snippets for data table) for mySQL database?

Looking forward for help ASAP.

Thanks and Regards,

Meenakshi

- Labels:

-

Troubleshooting

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

What about moving to PostgresSQL and Cassandra ? both are provided as Persistence providers ( PostgresSQL for model and stream data, and Cassandra for Stream data ).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hey Carles Coll

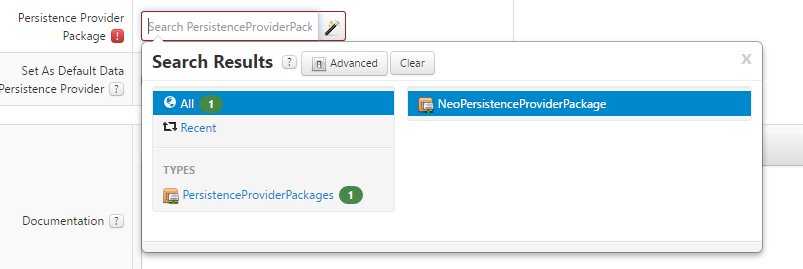

I don't know How could I access PostgresSQL and Cassandra as I have only 1 persistence provider that is NeoPersistenceProvider.

may I use external PostgresSQL as twx persistence provider?

Thanks,

Meenakshi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Well, moving to PostgresSQL won't be that easy. You should have to export everything and import it again to a ThingWorx instance with PostgresSQL support.

Moving to cassandra it's about moving only streams, and here I don't have experience either I don't know if additional licences are needed, better you contact support for this.

Carles.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

where could I find PostgresSQL Persistence Provider Package?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

It's not a package, it's the whole ThingWorx Installation, I don't think that Neo4j and PostgresSQL can live in the same instance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Unfortunately, if you have that much data being stored, migrating to a Thingworx version using PostgreSQL as the default persistence provider won't solve your problems. There are known and proven performance issues once datatables exceed a certain size. The only way to overcome these performance issues it to migrate your data to a relational database. And yes, you will need to write queries to access all your data. All my designs include a relational database whenever I know I'll be dealing with large volumes of data. The only data I store in the TWX database are my TWX modeled objects and basic lookup table type data to drive list boxes.

The best way to approach this is to stand up whatever DB instance you'd like (PostgreSQL, MySQL, Oracle, SQL Server) and use the JDBC DB extension to create a new DB Thing which you can configure to talk to your DB instance. Build your relational DB schema, created stored procedures for complex queries, insert, update, delete operations, and then create SQL Command Services in your DB thing to call your stored procedures.

To migrate your data, you'll need to write services that loop through your datatables and call your insert services.

It's a lot of work, but once it's done you'll wonder why you didn't just use a relational DB to begin with. The flexibility of how you can query for and/or update data is vastly superior to basic datatable functionality.

BTW, streams are not immune to these performance issues either. We've had issues where our streams got so large, the purge operations couldn't keep up with the volume of data and caused a huge performance hit. We ended up also using our external relational DB to store stream data and then set up revolving purge jobs that run every week to clean out data older than X.