- Community

- ThingWorx

- ThingWorx Developers

- ThingWorx Analytics Creating New Predictive Model ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

ThingWorx Analytics Creating New Predictive Model - Constant RUNNING status

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

ThingWorx Analytics Creating New Predictive Model - Constant RUNNING status

Hello All,

I am facing the problem with Creating New Predictive Model process.

Background:

- Local ThingWorx H2 8.0.4 (port 8080)

- Local Analytics Server 8.0.0 (port 8282) (DOCKER)

- 2 x gridworker 8GB RAM, tomcat 8GB RAM

- UploadThing 1.3

- BeanPro Sample Data

Issue:

While trying to create new predictive model for sample data (52k rows, no filters) I am stuck on the RUNNING state.

Another Issue:

There is no option to look into the Gridworker logs, cause there is SLF4J conflict (multiple binding)

Part of the gridworker log:

| /opt/gridworker/config/startup.sh: line 13: | 11 Killed | $JAVA -Xmx$MEM -Dsun.jnu.encoding=UTF-8 -Dfile.encoding=UTF-8 -Duri-builder=uri-builder-standard.xml -Dneuron.workerLogHome=$LOG -Dneuron.logHost=$LOG -Dzk_connect=$ZK_CONNECT -Dtransfer_uri=file:/ |

/${APP_TRANSFER_URI}/ -Daws.accessKeyId=$ACCESS_KEY -Daws.secretKey=$SECRET_KEY -Dcontainer=worker-container.xml -Denvironment=$ENVIRONMENT -Dspark-context=spark/spark-local-context.xml -cp `ls ${APP_BIN}/*.jar | xargs | sed -e "s/ /:/g"`:/opt/spark/lib/spark-assembly-1.

4.0-hadoop2.4.0.jar com.coldlight.ccc.job.dempsy.DempsyClusterJobExecutor

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/gridworker/bin/slf4j-log4j12-1.7.12.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/spark/lib/spark-assembly-1.4.0-hadoop2.4.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

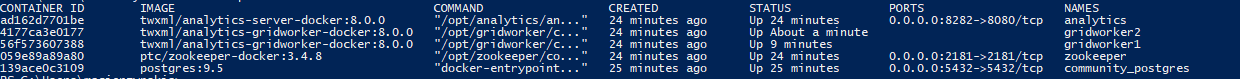

Docker Images: (Gridworkers are restarting every couple of minutes)

Any help where I should focus to find the issue?

Regards,

Adam

Solved! Go to Solution.

- Labels:

-

Analytics

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I decided to switch myself to Linux Standalone version of Analytics and it is working correctly now.

Maybe in the future I will come back to docker version and install it as you described above.

Thanks,

Adam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Adam, how much memory does your machine have in total? Offhand, without looking into logs, insufficient memory would be the first thing I point my finger at. OOTB Analytics Docker containers are rather memory-hogging.

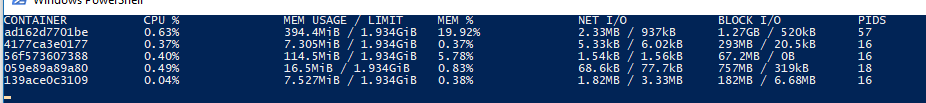

What does 'docker stats' output?

Have you tried to create a model with smaller datasets?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Dmitry,

Machine: 32GB

Docker stats:

I tried to reduce data set to 8k rows but nothing changed.

EDIT:

I found two new logs which are apearing every 15 seconds:

GetModelJobConfiguration: Error calling DescribePredictionsModelResults(): JavaException: org.apache.http.HttpException: {"errorId":"cbe5bd57-7ae9-4453-846a-ef4f72073622","errorMessage":"The file semaphore file:///opt/analytics/data/transfer/job_1515489080037_3_0000000005/TRAINING_DESCRIPTION_MODEL.json.touch doesn't exist."}

GetModelStatistics: Error retrieving Model Results: JavaException: org.apache.http.HttpException: {"errorId":"e469503d-a815-4092-b037-f6ac4a88f2cb","errorMessage":"The file semaphore file:///opt/analytics/data/transfer/job_1515489080037_3_0000000005/TRAINING_DESCRIPTION_MODEL.json.touch doesn't exist."}

Best,

Adam

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Adam

I personally haven't experienced this issue myself, so can only make somewhat-educated guesses.

Also, I'm not sure of your experience / knowledge with TW Analytics and/or Docker, thus my suggestions could be rather basic, to make sure nothing is missed. You could have already checked it all, so sorry if that's the case. Anyways, my experience shows that the causer of an issue could sometimes be very unlogical and even after finding it, I often don't understand why the particular thing lead to an error.

So, I'd do / check the following things:

1) You're running Docker on Windows, and, if I'm not mistaken, it shows that there are only 2G allocated to Docker.

2) Seeing that the uptime of the "second" gridworker is 9 minutes, I wonder if it crashes occasionally too, in turns with "first", or you just (re-)started it 9 minutes ago?

3) Have you tried to disable second gridworker and see if anything changes?

4) Have you tried removing existing gridworkers and adding new one using "addgridworker.sh 8G"?

5) Not sure if it matters, but have you tried to run the containers in the recommended order - Zookeeper, PostgreSQL Server, GridWorker? According to your screenshot, postgres was started before zookeeper.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Adam

As mentioned by Dmitry Tsarev, memory can be an issue.

Here it seems that you have the default memory setting of 2Gb for the docker daemon which will definitely not be enough.

I can't tell if that is the reason for the issue you are having right now but this will come into play at some point, so you should increase the memory allocated.

From the settings you indicated in the original post you have at least 2 gridworker with 8Gb of memory, so your docker daemon should be able to allocate this, which is not the case if it has got only 2 Gb.

See CS275881 to change the memory settings.

Regarding getting the logs for gridworker, look at CS268761 , docker cp gridworker1:/opt/gridworker/logs .\ is a command you can use to fetch the log files.

Finally, depending on the version of the beanpro sample data you have, they are likely to not work with ThingWorx Analytics 8.0 due to spaces. You can download an updated version of the dataset at CS266101 .

Hope this helps.

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I decided to switch myself to Linux Standalone version of Analytics and it is working correctly now.

Maybe in the future I will come back to docker version and install it as you described above.

Thanks,

Adam