- Community

- ThingWorx

- ThingWorx Developers

- Re: How to interpret Predictive Scoring & Importan...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

How to interpret Predictive Scoring & Important Field Weights

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

How to interpret Predictive Scoring & Important Field Weights

Hello how are you community, I hope very well.

I want to share with you a question about Thingworx Analytics, specifically about how to use the Predictive Scoring option available in Analytics Builder and interpret its results. I finished the learning path on "Vehicle Predictive Pre-Failure Detection with ThingWorx Platform", which helped me to understand several concepts about Thingworx Analytics, managing to generate predictions for values in "real time".

I would like to complement the predictions obtained with uncertainty probability or other practical information. Unfortunately, this guide does not cover topics that complement the predictions with information such as Predictive Scoring or confidence modeling. For my part I wanted to try and used the data and the model created to perform Predictive Scoring tests obtaining successful results but without knowing how to give a practical meaning to the Important Field Weights. On the other hand, according to the ThingWorx Analytics 9 documentation, the confidence models (which provide a probability of uncertainty about the prediction) are only available for continuous or ordinal data.

So I would like to know if there is extra information with which I can complement the predictions for the example "Vehicle Predictive Pre-Failure Detection with ThingWorx Platform", and how I could interpret the Important Field Weights.

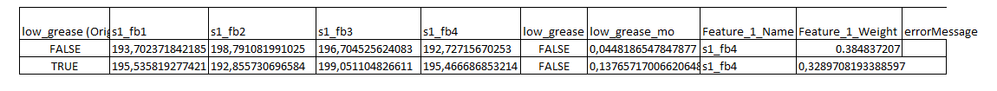

At the end of the text I attach an image with 2 predictive scoring results and Important Field Weights (Feature Weigth).

Thank you for reading.

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @JimmyZamora ,

In order to deliver practical value, I recommend you discuss with our Field team. They can advise once you have a concrete use case, or discuss sample use cases.

Regarding your questions:

1) You can check for model calibration by splitting your validation set predictions in bins: [0-0.1),[0.1-0.2), ..., [0.9-1]. Then, in each bin you compute the predicted (average predictions) and actual risk (percent failure from your data). Plot the predicted vs actual risk for each bin. If the model is calibrated as a probability, then your points will arrange somewhat close to the main diagonal. Note that even if the model is not calibrated, it can still be very successfully used for risk prediction, but the predictions cannot be interpreted as probabilities. In that case, the model automatically identifies the "optimal" threshold to transform the scores ("_mo" values) into failure predictions.

2) Typically, the requirements for the solution are use case dependent. From a data science perspective, you want to have enough high quality data to build accurate models. There is not a hard and fast number for the size of the dataset, but generally speaking, the more variables you want to model, the more data you need. Also, if failures are rare, you need to track the process / assets over a longer period of time to ensure enough failures are collected. Before building models you may want to perform some data cleanup (drop variables with too much missing data, fill missing data otherwise, check for outliers / incorrect values, etc).

Regards,

--Stefan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you for posting to the PTC Community.

Your questions are a bit advanced, I will do my best to assist you with your questions.

Have you had the opportunity to review our HelpCenter Documentation: Working With Predictive Models

We also have an older Community Post, which can be found here: PTC Community - Predictive Analytics

Regards,

Neel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Neel, thanks for your time

My goal is to explore the scope/limitations of Thingworx Analytics and the technical requirements to transform the business problem to an IoT + Analytics project, and to be able to explain these points to potential customers. Ideally it would be great to have a mockup on Prescriptive Models to show the great potential of Thingworx Analytics, but Predictive Models are enough for now. Any information that complements the prediction, such as the likehood of the prediction, is appreciated. For this purpose I have been studying the field weights of the Predictive Scores.

About the field weights the following is explained:

"Important field weights - For each important field, a field weight represents the relative impact of that field on the target variable. If the field weights of all fields in a training data set could be summed for a record, the sum would equal 1. In the sample results shown below, the weights of the important fields in each row add up to something less than one."

The interpretation of the weights seems to be similar to Signals, where a value of the relevance of the signal with respect to the target signal is given, but in this case only for one record. Unlike Signals there is no indication of the measurement method e.g. Mutual Information for Signals.

It would be great if you could confirm or refute this hypothesis.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

About complementary information I have found information that helps to validate, compare and refine the models, but I have not found so much complementary information about individual predictions.

I have read the documentation finding several interesting parameters such as ROC, RSME, Pearson Correlation, Confution Matrix, etc which are useful to refine the model, but these parameters are oriented to validate, compare and refine the models, not individual predictions. .

As I mentioned before it would be very useful to deliver the prediction and complement it with for example the probability of uncertainty of this one.

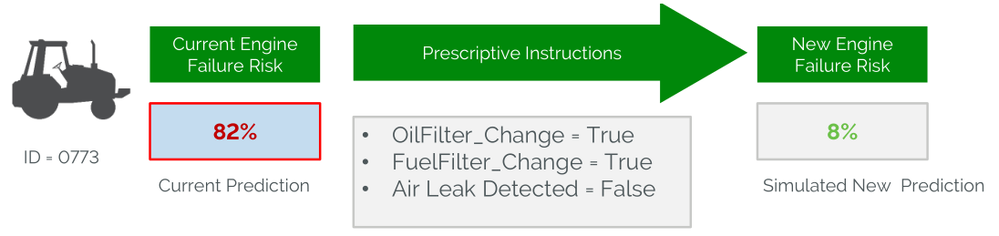

I take this opportunity to ask you about Prescriptive Models with an example that I found very interesting. At the end of the post you will find an image in which the current probability of engine failure is indicated. Then actions are recommended on relevant variables of the process, which impact on failure probability reducing it significantly. That said, my questions are the following:

1) How could I set up a model of this nature, i.e. target variable indicating the probability of failure for 3 different parts?

2) What would be the nature of the target variable (I assume it is probability of failure/failure)? This probability could come from confidence model for a boolean data (failure - no failure)?3) Where in ThingWorx Analytics do I declare the actions to start building prescriptive models?

These questions could perhaps be continued in another thread.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

As these questions are beyond my ability to answer, I have requested one of our Analytics Field Team and use case experts to review your questions.

Either myself or they will respond to this thread.

Regards,

Neel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@JimmyZamora ,

I have private messaged you regarding this post, as the people I requested to review indicated a case and a meeting would be the best path forward as it is use case, process based, and enablement based questions.

Regards,

Neel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @JimmyZamora ,

There are several questions in the discussion above. Let's look at them individually:

- Regarding important field weights for an individual prediction, the algorithm is starting with the inputs provided, and it performs a prediction sensitivity analysis. For each variable it varies its value from min to max in a number of increments (for categorical variables it looks at all values), while keeping all the other variables identical as in the original example. That gives us a prediction range for each variable. We take all these ranges and we normalize by the sum of the ranges. Therefore the relative weights of the variables sum up to 1. In your case, because the prediction above only shows the weight for the top most important variable, it will be a number less than 1, whereas if you predicted say with all important features, the weights will sum up to 1.

- In order to setup models to predict the multiple failure modes above, you could start by building a dataset where your sensor readings are columns, and you have a column for each one of the failure modes (3 in this case). The latter will all be of type Boolean (can use 0 for normal, 1 when it is a failure). You may choose to have one row for each asset, or even multiple rows for each asset, taken at various times. Then, train 3 models, one for each failure mode. The output of these models will be true/false (failure / no failure) and the "_mo" value you see in the prediction above is the actual score coming from the model, that gets converted into this true/false prediction. Thingworx internally computes the conversion threshold and it can be found in the pmml of the model (which can for example be exported from the UI). The higher the "_mo", the more confidence in the fact that the asset is going to fail. If your model is properly calibrated, you can think of the "_mo" as the likelihood of failure for that asset.

- Regarding Prescriptive Analytics, the process is the following: you first build a predictive model either visually, or using the APIs in ThingWorx (look for a Thing name something like AnalyticsServer_TrainingThing and use CreateJob, which is the API used by the UI - that will train the model and return its id - refer to the documentation for working w/ the APIs). Once you have the model, you need to run prescriptions programmatically either by calling the API directly or from another Thingworx service. Look for a Thing named something like AnalyticsServer_PrescriptiveThing (name may differ slightly based on your install process) with an API called BatchScore or RealtimeScore. There you will need to pass the predictive model id, the levers (the variables that you want a prescription for), the data to prescribe on, and whether you want to maximize or minimize your goal (e.g. chance of failure).

If you would like to delve deeper into the details of your use cases, we would be happy to connect you with our Field team who can advise how to tailor the approach to your specific needs.

Regards,

Stefan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @sniculescu,

Thank you very much for the answers Stefan, knowing that important field weightings perform a sensitivity analysis of the prediction clarifies the big picture for me. But I still can't figure out how to deliver practical value with this information. I need to give it some thought, if you have an example that would be very helpful.

The information on the construction of the multi-failure model for an asset is very interesting and confirms a couple of theories. The main question on this topic is

1) How to be sure that the model is correctly calibrated and the "_mo" value corresponds effectively to the real probability?

2) What are the requirements for this?

I reiterate my thanks for your willingness to help and solve with clarity the doubts of the community. For my part, I will be building and refining predictive models. Once I get good datasets and have correctly posed the data science problems I will move on to prescriptive models, which look super interesting.

Best regards,

Jimmy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @JimmyZamora ,

In order to deliver practical value, I recommend you discuss with our Field team. They can advise once you have a concrete use case, or discuss sample use cases.

Regarding your questions:

1) You can check for model calibration by splitting your validation set predictions in bins: [0-0.1),[0.1-0.2), ..., [0.9-1]. Then, in each bin you compute the predicted (average predictions) and actual risk (percent failure from your data). Plot the predicted vs actual risk for each bin. If the model is calibrated as a probability, then your points will arrange somewhat close to the main diagonal. Note that even if the model is not calibrated, it can still be very successfully used for risk prediction, but the predictions cannot be interpreted as probabilities. In that case, the model automatically identifies the "optimal" threshold to transform the scores ("_mo" values) into failure predictions.

2) Typically, the requirements for the solution are use case dependent. From a data science perspective, you want to have enough high quality data to build accurate models. There is not a hard and fast number for the size of the dataset, but generally speaking, the more variables you want to model, the more data you need. Also, if failures are rare, you need to track the process / assets over a longer period of time to ensure enough failures are collected. Before building models you may want to perform some data cleanup (drop variables with too much missing data, fill missing data otherwise, check for outliers / incorrect values, etc).

Regards,

--Stefan