- Community

- ThingWorx

- ThingWorx Developers

- Re: not able to find "queue" directory after insta...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

not able to find "queue" directory after installing dataconnect rpm package

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

not able to find "queue" directory after installing dataconnect rpm package

Hi,

I am trying to install thingworx dataconnect 8.0, on centos7 machine.

As mentioned in a DataConnect Installation guide, I need to install Apache spark 1.4.0 with Hadoop 2.4,

but I wasn't able to find the given version of apache spark.

So I used "Apache spark 1.6.0" with "hadoop 2.6" version instead. And installed all the other required softwares.

And upon these third party software setup I installed Dataconnect 8.0. But after installation of dataconnect rpm package,

I am not able to find the "queue" package in /opt/dataconnect directory.

And I also wants to mention that I have installed Thingworx analytics server 8.0 in the same centos machine.

Regards

Rahul T

- Labels:

-

Analytics

-

Install-Upgrade

- Tags:

- dataconnect

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul,

You could find spark 1.4.0 under the below link:

https://archive.apache.org/dist/spark/spark-1.4.0/

spark-1.4.0-bin-hadoop2.4.tgz download this file from the page then retry the installation using Spark 1.4.0

Hopefully, this will solve the issue you are facing.

Best Regards,

Amine

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

I tried the installation using spark 1.4.0 but still I am facing the same issue,

is there any specific reason for this problem?

Regards

Rahul T

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul

/opt/dataconnect/queue is not a package. It is the directory where jobs are queued.

From what I could find, if it does not exist it should be created upon first job execution.

Do you face a specific issue while running a job ?

if not, why did you look at that specific location (I am just curious)?

Thanks

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi,

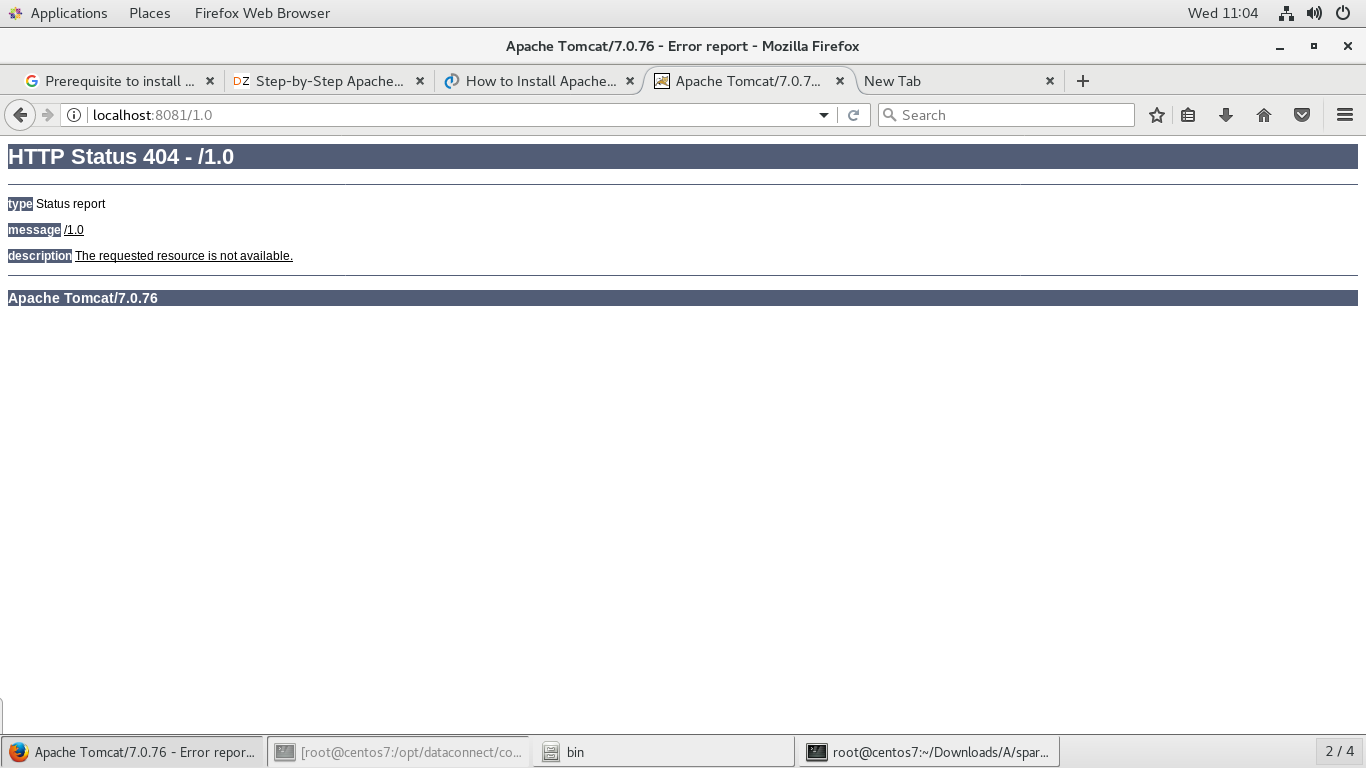

I am sharing the dataconnect.conf file and the screenshot of the browser when I hit link "http://localhost:8081/1.0"

Could you please suggest me what I am doing wrong with setup installation.

Regards

Rahul T

dataconnect.conf

# Please ensure that all bracketed placeholders have been replaced with proper

# configuration values before starting Tomcat.

# This property specifies where the job queue is maintained

jobqueue.dir=/opt/dataconnect/queue

# This property specifies the number of jobs which can be placed in the job

# queue before it is full. Once the job queue is full, no more jobs will be

# accepted until the queue has space.

jobqueue.capacity=2000

# This property specifies the number of active job executors

jobexecutor.num.threads=3

# This property specifies the number of seconds a full job queue will wait before

# rejecting new jobs

jobexecutor.offer.timeout.seconds=5

# This is the URL of neuron instance or load balancer where datasets

# will be submitted.

neuron.base.url=[http://neuron-url]/1.0

# This is the URL of the dataconnect server

dataconnect.webservices.url=[http://local-address]

# This is the base URI of a central file store where to save files uploaded to dataconnect.

dataconnect.transfer.uri=[s3://bucketname/]

# These are the AWS access key id and secret key. They are only needed if using S3 as a central file repository

dataconnect.aws.accessKey=

dataconnect.aws.secretKey=

# The following properties are database connection properties

# Dataconnect currently supports the H2 database and PostgresQL

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul

Your dataconnect.conf is having some mistake.

neuron.base.url

dataconnect.webservices.url

dataconnect.transfer.uri

need to be set to correct value for your system.

you can see a sample fof that file with correct values at How to use DataConnect when sending property values from the ThingWorx platform to ThingWorx Analytics.

Note that for 8.0 neuron.base.url would probably need to have analytics added in the URI, for example: http://myserver:myport/analytics/1.0

Once the file is updated restart tomcat.

You may want to check Re: I do not see any dataset in the Analytics Builder with the Tractor sample. too

Kind rgeards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello Christophe Morfin,

After updating the neuron.base.url I am still facing the same issue. i.e. post restart of tomcat, when i hit http://localhost:8081/1.0 i am getting 404 not found. Few things I wanted to know here .

1) What does this command do yum install /<path to RMP file>/DataConnect-<version>.noarch.rpm?

2) Where can I find the log files of server? I know the path /opt/dataconnect/logs will have log specific to jobs, but i want to know the logs about application?

Thanks in Advance.

Rahul.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul

The yum commands install the DataConnect components (under /opt) and deploy the applicaiton inside Tomcat

/opt/dataconnect/logs does not contains only log about job execution, some of the issues upon startup will also be written there (especially dataconnect.log file)

<tomcat>/logs is the other location to check if Tomcat cannot deploy the application.

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

As I mentioned in previous communication I have updated the opt/dataconnect/config/dataconnect.conf

but I am still facing the same issues, I am sharing dataconnect.conf file and tomcat log file.

please check these files and suggest me what is missing in the setup.

Regards

Rahul T

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I am sharing the dataconnect.conf file and tomcat log file here

opt/dataconnect/config/dataconnect.conf

Please ensure that all bracketed placeholders have been replaced with proper

# configuration values before starting Tomcat.

# This property specifies where the job queue is maintained

jobqueue.dir=/opt/dataconnect/queue

# This property specifies the number of jobs which can be placed in the job

# queue before it is full. Once the job queue is full, no more jobs will be

# accepted until the queue has space.

jobqueue.capacity=2000

# This property specifies the number of active job executors

jobexecutor.num.threads=3

# This property specifies the number of seconds a full job queue will wait before

# rejecting new jobs

jobexecutor.offer.timeout.seconds=5

# This is the URL of neuron instance or load balancer where datasets

# will be submitted.

neuron.base.url=http://10.1.101.15:8080/analytics/1.0

# This is the URL of the dataconnect server

dataconnect.webservices.url=http://10.1.101.15:8081/1.0

# This is the base URI of a central file store where to save files uploaded to dataconnect.

dataconnect.transfer.uri=[s3://bucketname/]

# These are the AWS access key id and secret key. They are only needed if using S3 as a central file repository

dataconnect.aws.accessKey=

dataconnect.aws.secretKey=

# The following properties are database connection properties

# Dataconnect currently supports the H2 database and PostgresQL

dataconnect.database.driver=org.h2.Driver

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect

dataconnect.database.username=[username]

dataconnect.database.password=[password]

These is the TomcatLog

Caused by: java.io.FileNotFoundException: /opt/dataconnect/db/dataconnect.mv.db (Permission denied)

at java.io.RandomAccessFile.open0(Native Method)

at java.io.RandomAccessFile.open(RandomAccessFile.java:316)

at java.io.RandomAccessFile.<init>(RandomAccessFile.java:243)

at java.io.RandomAccessFile.<init>(RandomAccessFile.java:124)

at org.h2.store.fs.FileNio.<init>(FilePathNio.java:43)

at org.h2.store.fs.FilePathNio.open(FilePathNio.java:23)

at org.h2.mvstore.FileStore.open(FileStore.java:153)

... 88 more

Regards

Rahul T

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Rahul

You still have properties with defualt values in your dataconnect.conf (all the value in bracket [] need to be replaced with actual values, as shown at How to use DataConnect when sending property values from the ThingWorx platform to ThingWorx Analytics. )

you still need to update

dataconnect.transfer.uri

dataconnect.database.username

dataconnect.database.password

the last 2 properties can initially be set to whatever username and password you want, this will be created at runtime by the software.

If you still face issue after that please send:

- /opt/dataconnect/conf/dataconnect.conf

- Tomcat/logs folder

- /opt/dataconnect/logs folder

Hope this helps

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi ,

I am sharing

1)/opt/dataconnect/conf/dataconnect.conf file and

2)var/log/tomcat file.

I am getting opt/dataconnect/log directory but no files are present

inside this directory.

please suggest me wherever changes are require.

Regards

Rahul T

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul

Could you do the following:

1) Stop Tomcat

2) delete the files under /opt/dataconnect/db

3) restart Tomcat - make sure to start it as root user.

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Rahul

I notice that you have opened a ticket to Technical Support for the same topic, which is a good idea.

However to avoid duplicated effort and risk of conflicting information, I would advise to keep the activity on this installation within the Technical Support ticket.

We wil ltherefore consider this Community thread closed.

Thank you

Kind regards

Christophe