- Community

- ThingWorx

- ThingWorx Developers

- Re: org.hibernate.exception.GenericJDBCException: ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

org.hibernate.exception.GenericJDBCException: Could not open connection

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

org.hibernate.exception.GenericJDBCException: Could not open connection

Hi to everyone,

I've this problem going to Analytics server ( and Builder). I've attached a log file.

The connection test is ok, but when i click to "Data" then appear this error in the log and on popup message: "org.hibernate.exception.GenericJDBCException: Could not open connection".

Someone is my situation?

Help me please!

Solved! Go to Solution.

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Summary of what has been found offline:

The remaining issue with error "Unexpected exception for job [10]: com.coldlight.dataconnect.transformer.exception.TransformationException: ExecutorLostFailure (executor driver lost)]". was related to the data and not DataConnect configuration.

- 2 features were string features that were not changing over time. It was advise to change these feature as static instead of timed, or to simply remove them from the DAD. See also https://support.ptc.com/appserver/cs/view/solution.jsp?n=CS263713 for details.

- the time sampling interval was set to 10 ms while the data were received at 500ms. Changing the time sampling to 500 ms to match the data, also allowed to reduce the transformation work to be done by DataConnect.

The 2 above points were leading to high memory usage and the termination of the process, hence the error message.

An additional issue came out with error "Failed to create directory within 10000 attempts (tried 1496164223593-0 to 1496164223593-9999)]" due to the deletion of the temp folder of Tomcat. See https://support.ptc.com/appserver/cs/view/solution.jsp?n=CS263805 for details on how to fix.

DataConnect job was successful after those modification

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

These are my DataConnect.conf informations:

neuron.base.url=http://localhost:8080/1.0

# This is the URL of the dataconnect server

dataconnect.webservices.url=http://localhost

# This is the base URI of a central file store where to save files uploaded to dataconnect.

dataconnect.transfer.uri=file:///tmp/DataConnect

# These are the AWS access key id and secret key. They are only needed if using S3 as a central file repository

dataconnect.aws.accessKey=

dataconnect.aws.secretKey=

# The following properties are database connection properties

# Dataconnect currently supports the H2 database and PostgresQL

dataconnect.database.driver=org.h2.Driver

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect

dataconnect.database.username=dcuser

dataconnect.database.password=ts

dataconnect.database.type=H2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

Is this a new ThingWorx Analytics Server VM that you just built or is it something that used to work before ?

From the error you get when selecting DATA in Builder, we seem to have an issue with connecting to the database.

The first thing to check is if the PostgreSQL database has been successfully installed on this VM. ps -ef | grep post should show if some PostgreSQL processes are running or not.

There is currently an issue with the VM packer script that attempt to install an obsolete PostgreSQL version and so the PostgreSQL database ends up not being installed.

The workaround is simply to change the download link and recreate the ThingWorx Analytics Server VM. See https://support.ptc.com/appserver/cs/view/solution.jsp?n=CS256240 for details on that.

Hope this helps

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I've installed this new Thingworx Analytics Server VM on December 2016, and it worked until yesterday when I've installed and configured Dataconnect.

This is the result of ps -ef command:

For more details see ps(1).

[root@itfioxtdb04 /]# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 11:59 ? 00:00:02 /usr/lib/systemd/systemd --switc

root 2 0 0 11:59 ? 00:00:00 [kthreadd]

root 3 2 0 11:59 ? 00:00:00 [ksoftirqd/0]

root 5 2 0 11:59 ? 00:00:00 [kworker/0:0H]

root 7 2 0 11:59 ? 00:00:00 [migration/0]

root 8 2 0 11:59 ? 00:00:00 [rcu_bh]

root 9 2 0 11:59 ? 00:00:00 [rcuob/0]

root 10 2 0 11:59 ? 00:00:00 [rcuob/1]

root 11 2 0 11:59 ? 00:00:00 [rcu_sched]

root 12 2 0 11:59 ? 00:00:00 [rcuos/0]

root 13 2 0 11:59 ? 00:00:00 [rcuos/1]

root 14 2 0 11:59 ? 00:00:00 [watchdog/0]

root 15 2 0 11:59 ? 00:00:00 [watchdog/1]

root 16 2 0 11:59 ? 00:00:00 [migration/1]

root 17 2 0 11:59 ? 00:00:00 [ksoftirqd/1]

root 19 2 0 11:59 ? 00:00:00 [kworker/1:0H]

root 20 2 0 11:59 ? 00:00:00 [khelper]

root 21 2 0 11:59 ? 00:00:00 [kdevtmpfs]

root 22 2 0 11:59 ? 00:00:00 [netns]

root 23 2 0 11:59 ? 00:00:00 [perf]

root 24 2 0 11:59 ? 00:00:00 [writeback]

root 25 2 0 11:59 ? 00:00:00 [kintegrityd]

root 26 2 0 11:59 ? 00:00:00 [bioset]

root 27 2 0 11:59 ? 00:00:00 [kblockd]

root 28 2 0 11:59 ? 00:00:00 [md]

root 33 2 0 11:59 ? 00:00:00 [khungtaskd]

root 34 2 0 11:59 ? 00:00:06 [kswapd0]

root 35 2 0 11:59 ? 00:00:00 [ksmd]

root 36 2 0 11:59 ? 00:00:00 [khugepaged]

root 37 2 0 11:59 ? 00:00:00 [fsnotify_mark]

root 38 2 0 11:59 ? 00:00:00 [crypto]

root 46 2 0 11:59 ? 00:00:00 [kthrotld]

root 48 2 0 11:59 ? 00:00:00 [kmpath_rdacd]

root 50 2 0 11:59 ? 00:00:00 [kpsmoused]

root 51 2 0 11:59 ? 00:00:00 [ipv6_addrconf]

root 52 2 0 11:59 ? 00:00:00 [kworker/1:2]

root 71 2 0 11:59 ? 00:00:00 [deferwq]

root 102 2 0 11:59 ? 00:00:00 [kauditd]

root 279 2 0 11:59 ? 00:00:00 [ata_sff]

root 280 2 0 11:59 ? 00:00:00 [scsi_eh_0]

root 281 2 0 11:59 ? 00:00:00 [scsi_tmf_0]

root 282 2 0 11:59 ? 00:00:00 [scsi_eh_1]

root 283 2 0 11:59 ? 00:00:00 [scsi_tmf_1]

root 286 2 0 11:59 ? 00:00:00 [events_power_ef]

root 287 2 0 11:59 ? 00:00:00 [mpt_poll_0]

root 288 2 0 11:59 ? 00:00:00 [mpt/0]

root 291 2 0 11:59 ? 00:00:00 [scsi_eh_2]

root 292 2 0 11:59 ? 00:00:00 [scsi_tmf_2]

root 298 2 0 11:59 ? 00:00:00 [ttm_swap]

root 374 2 0 11:59 ? 00:00:00 [kworker/0:1H]

root 415 2 0 11:59 ? 00:00:00 [kdmflush]

root 416 2 0 11:59 ? 00:00:00 [bioset]

root 427 2 0 11:59 ? 00:00:00 [kdmflush]

root 428 2 0 11:59 ? 00:00:00 [bioset]

root 441 2 0 11:59 ? 00:00:00 [xfsalloc]

root 442 2 0 11:59 ? 00:00:00 [xfs_mru_cache]

root 443 2 0 11:59 ? 00:00:00 [xfs-buf/dm-0]

root 444 2 0 11:59 ? 00:00:00 [xfs-data/dm-0]

root 445 2 0 11:59 ? 00:00:00 [xfs-conv/dm-0]

root 446 2 0 11:59 ? 00:00:00 [xfs-cil/dm-0]

root 447 2 0 11:59 ? 00:00:00 [xfs-reclaim/dm-]

root 448 2 0 11:59 ? 00:00:00 [xfs-log/dm-0]

root 449 2 0 11:59 ? 00:00:00 [xfs-eofblocks/d]

root 450 2 0 11:59 ? 00:00:00 [xfsaild/dm-0]

root 529 1 0 11:59 ? 00:00:01 /usr/lib/systemd/systemd-journal

root 551 1 0 11:59 ? 00:00:00 /usr/sbin/lvmetad -f

root 552 2 0 11:59 ? 00:00:00 [rpciod]

root 566 1 0 11:59 ? 00:00:00 /usr/lib/systemd/systemd-udevd

root 607 2 0 11:59 ? 00:00:00 [xfs-buf/sda1]

root 608 2 0 11:59 ? 00:00:00 [xfs-data/sda1]

root 609 2 0 11:59 ? 00:00:00 [xfs-conv/sda1]

root 610 2 0 11:59 ? 00:00:00 [xfs-cil/sda1]

root 611 2 0 11:59 ? 00:00:00 [xfs-reclaim/sda]

root 612 2 0 11:59 ? 00:00:00 [xfs-log/sda1]

root 613 2 0 11:59 ? 00:00:00 [xfs-eofblocks/s]

root 614 2 0 11:59 ? 00:00:00 [xfsaild/sda1]

root 654 2 0 11:59 ? 00:00:00 [kdmflush]

root 655 2 0 11:59 ? 00:00:00 [bioset]

root 665 2 0 11:59 ? 00:00:00 [xfs-buf/dm-2]

root 666 2 0 11:59 ? 00:00:00 [xfs-data/dm-2]

root 667 2 0 11:59 ? 00:00:00 [xfs-conv/dm-2]

root 668 2 0 11:59 ? 00:00:00 [xfs-cil/dm-2]

root 669 2 0 11:59 ? 00:00:00 [xfs-reclaim/dm-]

root 670 2 0 11:59 ? 00:00:00 [xfs-log/dm-2]

root 671 2 0 11:59 ? 00:00:00 [xfs-eofblocks/d]

root 672 2 0 11:59 ? 00:00:00 [xfsaild/dm-2]

root 696 1 0 11:59 ? 00:00:00 /sbin/auditd -n

root 712 696 0 11:59 ? 00:00:00 /sbin/audispd

root 717 712 0 11:59 ? 00:00:00 /usr/sbin/sedispatch

root 720 1 0 11:59 ? 00:00:00 /usr/sbin/alsactl -s -n 19 -c -E

dbus 722 1 0 11:59 ? 00:00:00 /bin/dbus-daemon --system --addr

root 725 1 0 11:59 ? 00:00:00 /usr/sbin/ModemManager

rtkit 726 1 0 11:59 ? 00:00:00 /usr/libexec/rtkit-daemon

root 727 1 0 11:59 ? 00:00:00 /usr/sbin/rsyslogd -n

libstor+ 729 1 0 11:59 ? 00:00:00 /usr/bin/lsmd -d

root 730 1 0 11:59 ? 00:00:00 /usr/libexec/accounts-daemon

root 732 1 0 11:59 ? 00:00:00 /usr/sbin/abrtd -d -s

root 736 1 0 11:59 ? 00:00:00 /usr/sbin/smartd -n -q never

root 737 1 0 11:59 ? 00:00:00 /usr/bin/abrt-watch-log -F Backt

root 739 1 0 11:59 ? 00:00:00 /usr/sbin/irqbalance --foregroun

chrony 742 1 0 11:59 ? 00:00:00 /usr/sbin/chronyd

root 743 1 0 11:59 ? 00:00:01 /usr/bin/vmtoolsd

root 744 1 0 11:59 ? 00:00:02 /sbin/rngd -f

avahi 745 1 0 11:59 ? 00:00:00 avahi-daemon: running [itfioxtdb

root 748 1 0 11:59 ? 00:00:00 /usr/lib/systemd/systemd-logind

root 749 1 0 11:59 ? 00:00:00 /usr/bin/abrt-watch-log -F BUG:

root 757 1 0 11:59 ? 00:00:00 /usr/sbin/NetworkManager --no-da

avahi 762 745 0 11:59 ? 00:00:00 avahi-daemon: chroot helper

root 764 1 0 11:59 ? 00:00:00 /bin/bash /usr/sbin/ksmtuned

root 783 1 0 11:59 ? 00:00:00 /usr/sbin/gssproxy -D

polkitd 789 1 0 11:59 ? 00:00:02 /usr/lib/polkit-1/polkitd --no-d

root 815 1 0 11:59 ? 00:00:00 /usr/sbin/wpa_supplicant -u -f /

zookeep+ 1079 1 0 11:59 ? 00:00:01 /usr/bin/java -cp /opt/zookeeper

tomcat 1085 1 99 11:59 ? 00:21:42 /usr/lib/jvm/jre/bin/java -Xmx10

root 1086 1 0 11:59 ? 00:00:00 /usr/bin/python -Es /usr/sbin/tu

root 1093 1 0 11:59 ? 00:00:00 /usr/sbin/sshd -D

root 1102 1 0 11:59 ? 00:00:00 /usr/sbin/cupsd -f

root 1105 1 0 11:59 ? 00:00:00 /usr/sbin/xrdp-sesman --nodaemon

root 1106 1 0 11:59 ? 00:00:00 /usr/sbin/xrdp --nodaemon

root 1112 1 0 11:59 ? 00:00:00 /usr/sbin/libvirtd

root 1122 1 0 11:59 ? 00:00:00 /usr/sbin/atd -f

root 1123 1 0 11:59 ? 00:00:00 /usr/sbin/crond -n

root 1125 1 0 11:59 ? 00:00:00 /usr/sbin/gdm

postgres 1200 1 0 11:59 ? 00:00:00 /usr/pgsql-9.4/bin/postgres -D /

root 1256 1125 0 11:59 tty1 00:00:00 /usr/bin/Xorg :0 -background non

postgres 1441 1200 0 11:59 ? 00:00:00 postgres: logger process

postgres 1487 1200 0 11:59 ? 00:00:00 postgres: checkpointer process

postgres 1488 1200 0 11:59 ? 00:00:00 postgres: writer process

postgres 1489 1200 0 11:59 ? 00:00:00 postgres: wal writer process

postgres 1490 1200 0 11:59 ? 00:00:00 postgres: autovacuum launcher pr

postgres 1491 1200 0 11:59 ? 00:00:00 postgres: stats collector proces

root 1764 1 0 11:59 ? 00:00:00 /usr/libexec/postfix/master -w

postfix 1782 1764 0 11:59 ? 00:00:00 qmgr -l -t unix -u

root 2435 1125 0 11:59 ? 00:00:00 gdm-session-worker [pam/gdm-laun

nobody 2481 1 0 11:59 ? 00:00:00 /sbin/dnsmasq --conf-file=/var/l

root 2483 2481 0 11:59 ? 00:00:00 /sbin/dnsmasq --conf-file=/var/l

gdm 2512 2435 0 11:59 ? 00:00:00 /usr/bin/gnome-session --autosta

gdm 2515 1 0 11:59 ? 00:00:00 /usr/bin/dbus-launch --exit-with

gdm 2520 1 0 11:59 ? 00:00:00 /bin/dbus-daemon --fork --print-

gdm 2529 1 0 11:59 ? 00:00:00 /usr/libexec/at-spi-bus-launcher

root 2530 1 0 11:59 ? 00:00:05 /bin/java -Dwrapper.pidfile=/run

gdm 2538 2529 0 11:59 ? 00:00:00 /bin/dbus-daemon --config-file=/

gdm 2544 1 0 11:59 ? 00:00:00 /usr/libexec/at-spi2-registryd -

gdm 2561 2512 0 11:59 ? 00:00:00 /usr/libexec/gnome-settings-daem

root 2568 1 0 11:59 ? 00:00:00 /usr/libexec/upowerd

gdm 2572 1 0 11:59 ? 00:00:00 /usr/libexec/gvfsd

gdm 2576 1 0 11:59 ? 00:00:00 /usr/libexec/gvfsd-fuse /run/use

root 2597 2530 0 11:59 ? 00:00:06 /bin/java -classpath /opt/yajsw/

gdm 2624 2512 0 11:59 ? 00:00:03 gnome-shell --mode=gdm

colord 2628 1 0 11:59 ? 00:00:00 /usr/libexec/colord

gdm 2641 1 0 11:59 ? 00:00:00 /usr/bin/pulseaudio --start --lo

gdm 2659 1 0 11:59 ? 00:00:00 /usr/libexec/dconf-service

gdm 2668 2624 0 11:59 ? 00:00:00 ibus-daemon --xim --panel disabl

gdm 2673 2668 0 11:59 ? 00:00:00 /usr/libexec/ibus-dconf

gdm 2675 1 0 11:59 ? 00:00:00 /usr/libexec/ibus-x11 --kill-dae

gdm 2682 1 0 11:59 ? 00:00:00 /usr/libexec/mission-control-5

gdm 2684 1 0 11:59 ? 00:00:00 /usr/libexec/caribou

root 2687 1 0 11:59 ? 00:00:00 /usr/libexec/packagekitd

gdm 2698 1 0 11:59 ? 00:00:00 /usr/libexec/goa-daemon

gdm 2712 1 0 11:59 ? 00:00:00 /usr/libexec/gvfs-udisks2-volume

root 2724 1 0 11:59 ? 00:00:00 /usr/lib/udisks2/udisksd --no-de

gdm 2730 1 0 11:59 ? 00:00:00 /usr/libexec/goa-identity-servic

gdm 2750 1 0 11:59 ? 00:00:00 /usr/libexec/gvfs-gphoto2-volume

gdm 2754 1 0 11:59 ? 00:00:00 /usr/libexec/gvfs-mtp-volume-mon

gdm 2758 1 0 11:59 ? 00:00:00 /usr/libexec/gvfs-afc-volume-mon

gdm 2763 1 0 11:59 ? 00:00:00 /usr/libexec/gvfs-goa-volume-mon

gdm 2788 2668 0 11:59 ? 00:00:00 /usr/libexec/ibus-engine-simple

postgres 2848 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2849 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2850 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2851 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2852 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2853 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2854 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2855 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2856 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postgres 2857 1200 0 12:00 ? 00:00:00 postgres: neuronuser neuronuser

postfix 3597 1764 0 12:00 ? 00:00:00 pickup -l -t unix -u

root 3604 1106 0 12:00 ? 00:00:08 /usr/sbin/xrdp --nodaemon

root 3605 2 0 12:00 ? 00:00:00 [kworker/1:2H]

root 3690 1105 0 12:01 ? 00:00:00 /usr/sbin/xrdp-sessvc 3692 3691

root 3691 3690 0 12:01 ? 00:00:00 /usr/sbin/xrdp-sesman --nodaemon

root 3692 3690 7 12:01 ? 00:01:17 Xvnc :10 -geometry 1280x773 -dep

root 3695 3691 0 12:01 ? 00:00:00 /bin/gnome-session --session=gno

root 3710 3690 0 12:01 ? 00:00:00 xrdp-chansrv

root 3799 1 0 12:01 ? 00:00:00 dbus-launch --sh-syntax --exit-w

root 3800 1 0 12:01 ? 00:00:00 /bin/dbus-daemon --fork --print-

root 3867 1 0 12:01 ? 00:00:00 /usr/libexec/imsettings-daemon

root 3870 1 0 12:01 ? 00:00:00 /usr/libexec/gvfsd

root 3874 1 0 12:01 ? 00:00:00 /usr/libexec/gvfsd-fuse /run/use

root 3926 3695 0 12:01 ? 00:00:00 /usr/bin/ssh-agent /etc/X11/xini

root 3931 1 0 12:01 ? 00:00:00 /usr/libexec/at-spi-bus-launcher

root 3935 3931 0 12:01 ? 00:00:01 /bin/dbus-daemon --config-file=/

root 3939 1 0 12:01 ? 00:00:00 /usr/libexec/at-spi2-registryd -

root 3954 3695 0 12:01 ? 00:00:00 /usr/libexec/gnome-settings-daem

root 3962 1 0 12:01 ? 00:00:00 /usr/bin/gnome-keyring-daemon --

root 3973 1 0 12:01 ? 00:00:00 /usr/bin/pulseaudio --start

root 3983 3695 27 12:01 ? 00:04:45 /usr/bin/gnome-shell

root 3999 1 0 12:01 ? 00:00:00 /usr/libexec/gsd-printer

root 4007 3983 0 12:01 ? 00:00:00 ibus-daemon --xim --panel disabl

root 4012 4007 0 12:01 ? 00:00:00 /usr/libexec/ibus-dconf

root 4014 1 0 12:01 ? 00:00:00 /usr/libexec/ibus-x11 --kill-dae

root 4016 1 0 12:01 ? 00:00:00 /usr/libexec/gnome-shell-calenda

root 4025 1 0 12:01 ? 00:00:00 /usr/libexec/mission-control-5

root 4029 1 0 12:01 ? 00:00:01 /usr/libexec/caribou

root 4032 1 0 12:01 ? 00:00:00 /usr/libexec/evolution-source-re

root 4034 1 0 12:01 ? 00:00:00 /usr/libexec/dconf-service

root 4043 1 0 12:01 ? 00:00:00 /usr/libexec/goa-daemon

root 4061 1 0 12:01 ? 00:00:00 /usr/libexec/gvfs-udisks2-volume

root 4076 1 0 12:01 ? 00:00:00 /usr/libexec/gvfs-gphoto2-volume

root 4090 1 0 12:01 ? 00:00:00 /usr/libexec/gvfs-mtp-volume-mon

root 4104 1 0 12:01 ? 00:00:00 /usr/libexec/goa-identity-servic

root 4106 1 0 12:01 ? 00:00:00 /usr/libexec/gvfs-afc-volume-mon

root 4121 1 0 12:01 ? 00:00:00 /usr/libexec/gvfs-goa-volume-mon

root 4133 3695 1 12:01 ? 00:00:12 nautilus --no-default-window --f

root 4138 3695 0 12:01 ? 00:00:00 abrt-applet

root 4139 3695 0 12:01 ? 00:00:00 /usr/bin/gnome-software --gappli

root 4140 3695 0 12:01 ? 00:00:00 /usr/bin/seapplet

root 4142 1 0 12:01 ? 00:00:01 /usr/bin/vmtoolsd -n vmusr

root 4148 3695 0 12:01 ? 00:00:00 /usr/libexec/tracker-extract

root 4150 3695 0 12:01 ? 00:00:00 /usr/libexec/tracker-miner-apps

root 4153 3695 0 12:01 ? 00:00:00 /usr/libexec/tracker-miner-fs

root 4157 3695 0 12:01 ? 00:00:00 /usr/libexec/tracker-miner-user-

root 4177 1 0 12:01 ? 00:00:00 /usr/libexec/tracker-store

root 4242 1 0 12:01 ? 00:00:00 /usr/libexec/gconfd-2

root 4247 1 0 12:01 ? 00:00:00 /usr/libexec/gvfsd-trash --spawn

root 4259 1 0 12:01 ? 00:00:00 /usr/libexec/evolution-calendar-

root 4267 4007 0 12:01 ? 00:00:00 /usr/libexec/ibus-engine-simple

root 4270 1 0 12:01 ? 00:00:00 /usr/libexec/gvfsd-metadata

root 4425 1 0 12:02 ? 00:00:00 /usr/libexec/gnome-terminal-serv

root 4428 4425 0 12:02 ? 00:00:00 gnome-pty-helper

root 4429 4425 0 12:02 pts/0 00:00:00 /bin/bash

root 4561 2 0 12:02 ? 00:00:00 [kworker/0:3]

root 4686 3983 5 12:05 ? 00:00:41 /usr/lib64/firefox/firefox

root 4794 2 0 12:06 ? 00:00:00 [kworker/u4:2]

root 4842 2 0 12:07 ? 00:00:00 [kworker/1:1]

root 5064 1 0 12:10 ? 00:00:00 /usr/bin/gedit --gapplication-se

root 5079 2 0 12:11 ? 00:00:00 [kworker/u4:1]

root 5114 2 0 12:12 ? 00:00:00 [kworker/0:0]

root 5209 4425 0 12:17 pts/1 00:00:00 /bin/bash

root 5221 1 0 12:17 ? 00:00:00 /usr/sbin/abrt-dbus -t133

root 5255 2 0 12:17 ? 00:00:00 [kworker/0:1]

root 5264 764 0 12:18 ? 00:00:00 sleep 60

root 5266 5209 0 12:18 pts/1 00:00:00 ps -ef

Do you note some issues?

On March I've already configured a Dataconnect with success (Re: Thingworx Analytics: Send Data From Dataconnect To Analytics Server) but now I don't Know what are happening.

I'm waiting your feedback.

Thank's

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I've done "sudo reboot -h now" and analytics builder now is running without exceptions popup!

The problem now is on dataconnect, I have these log informations:

- Received error from DataConnect for job status: DataSetName: [Tractor_DAD] - [500 - Internal Server Error: Unexpected Error. Please contact support.].

- No File Repository found in Configuration for [Tractor_DAD]. Falling back to default - [SystemRepository]

- Failed to submit to DataConnect: {"headers":{"Content-type":"application/json","Accept":"application/json","neuron-application-key":"1","neuron-application-id":"neuronuser"},"errorMessage":"Unexpected Error. Please contact support.","errorId":"6f4cad3e-fdb6-4106-9294-600aa3365062","responseStatus":{"reasonPhrase":"Internal Server Error","protocolVersion":{"protocol":"HTTP","major":1,"minor":1},"statusCode":500}}

- Error while processing task : Failed to submit to DataConnect: DataConnect was unable to process request.

- Task-1495533576377 Data Analysis Task Failed. Please check the logs for errors

- Task-1495533576377 Data Analysis Task In Error.

Do you Know what is the problem now?

Waiting your feedback,

thank's

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

Did you install DataConnect on the same VM as ThingWorx Analytics Server ?

As you mentioned, back in March you configure a system with DataConnect, is it a different one here or is it the same ?

Could you send the followign log:

- /opt/dataconnect/logs

-<tomcat>/logs

Thank you

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I've installed (like in March, but it was another project) on the same VM, Thingworx Analytics Server and Dataconnect.

The configuration is the same like March project (dataconnect.conf for example).

I attach the log and hope you find a mistake.

Thank's

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

The error message we get is

Caused by: org.h2.jdbc.JdbcSQLException: Wrong user name or password [28000-190]

so when attempting to connect to the H2 DataConnect database the username and password is not ok.

Thsi is created at runtime, but it is posible that during a previous start the dataconnect database got created with a different credentials not accepted anymore.

Could you try to modify /opt/dataconnect/config/dataconnect.conf and change

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect

to

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect2

then restart Tomcat and try again ?

If you still have issue, please send the dataconnect.conf in addition to the dataconnect.log and Tomcat log files.

Note though that it is recommended to have ThingWorx Analytics and DataConnect on 2 different machine (see DataConnect Guide, page 8, Requirements)

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

now, after changing

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect

to

dataconnect.database.url=jdbc:h2:/opt/dataconnect/db/dataconnect2

I've a new status for Dataconnect Tractor_DAD, from "not found" to "Queue".

I've attached the required files.

Now I've a doubt that dataconnect.conf isn't the last version of last project, Could you check it.

I Know that is recommended to have ThingWorx Analytics and DataConnect on 2 different machine (see DataConnect Guide, page 8, Requirements), but for project in march we had no issues.

I'm waiting your feedback.

Thank's

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

The DataConnect log file you attached are the same as earlier on, so I do not have the entries for when the job is queued.

I notice somethign incorrect though in your dataconnect.conf file

you have got

neuron.base.url=http://localhost:8080/twxml-connect/1.0

it should be

neuron.base.url=http://localhost:8080/1.0

neuron.base.url is the uri to ThingWorx Analytics Server, twxml-connect is the DataConnect webapp.

So you can change this, then restart Tomcat.

If still issue send again ll the latest log and conf files.

Thank you

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I've corrected dataconnect.conf and there are improvements but still mistakes.

I attach the ultimate log of dataconnect, tomcat and dataconnect.conf.

Now, this is the scenario: I've created a DAD for our analysis, and dataconnect creates a zip file with all csv skinny file that need to Analytics.

But, after a lot of hours of "running state" (6 hours approximately), i've got a mistake that I don't understood : "Unexpected exception for job [10]: com.coldlight.dataconnect.transformer.exception.TransformationException: ExecutorLostFailure (executor driver lost)]".

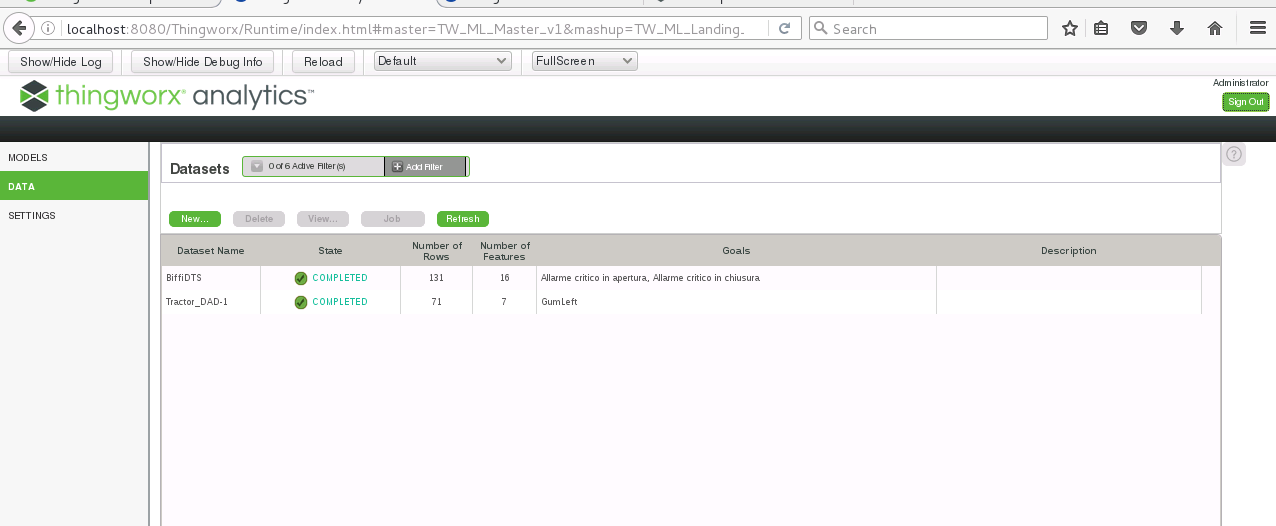

To complete the informations, I attach a DAD configuration screenshot.

Could you help me again?

Thank's

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

A few points here:

- I see that you changed DAD, did you confirm that all works ok with the Tractor_DAD ?

Before moving to your own one, I would rather stick to this Tractor (I assume this is the one from How to use DataConnect when sending property values from the ThingWorx platform to ThingWorx Analytics.) as I know it works.

Once we confirm it works for this one, we will be sure of the DataConnect configuration and then can move to your own DAD.

From the log I don't seem to see a successful Tractor_DAD. DataConnect complains of empty csv file. It is possible that the properties did not get populated so no data were available. The above blog provides a little simulator that allows to populate data - or you can also populate some manually.

- I also see some errors on null timeSamplingInterval, so make sure the time sampling interval is set in the DAD General tab

- I also see some error on empty csv file for your DAD, so here again it might be because of lack of value for the properties, or it can be something else this is why making sure it works with Tractor will help.

- you wrote the zip is created with skinny csv files in it. do you see some data in those csv file ? Could you attach the zip file ?

Thank you

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Christophe,

I've tested Tractor-DAD example and it works fine.

Now I don't know why other DAD doesn't work.

I attach a zip of skinny files.

I'm waiting your feedback.

Regards,

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Luigi

Could you please send also the payload.json file that is present in the same directory as the csv zip file ?

I'd like to make a few tests, to confirm the issue I can see with the data in csv files

Thank you

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

This is the Payload.json create by Dataconnect.

Regards,

Luigi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hy do you have any news related?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Marco

I have taken this offline with Luigi

Will update the threads when we get to the bottom of it

Kind regards

Christophe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Summary of what has been found offline:

The remaining issue with error "Unexpected exception for job [10]: com.coldlight.dataconnect.transformer.exception.TransformationException: ExecutorLostFailure (executor driver lost)]". was related to the data and not DataConnect configuration.

- 2 features were string features that were not changing over time. It was advise to change these feature as static instead of timed, or to simply remove them from the DAD. See also https://support.ptc.com/appserver/cs/view/solution.jsp?n=CS263713 for details.

- the time sampling interval was set to 10 ms while the data were received at 500ms. Changing the time sampling to 500 ms to match the data, also allowed to reduce the transformation work to be done by DataConnect.

The 2 above points were leading to high memory usage and the termination of the process, hence the error message.

An additional issue came out with error "Failed to create directory within 10000 attempts (tried 1496164223593-0 to 1496164223593-9999)]" due to the deletion of the temp folder of Tomcat. See https://support.ptc.com/appserver/cs/view/solution.jsp?n=CS263805 for details on how to fix.

DataConnect job was successful after those modification