Community Tip - Learn all about PTC Community Badges. Engage with PTC and see how many you can earn! X

- Community

- Augmented Reality

- Vuforia Studio

- Re: Arrow Navigator

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Arrow Navigator

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Arrow Navigator

I have a relatively large model and have made the experience that the user sees the important places (e.g. 3D img) simply over or can not find.

My idea would be a 3D model in the form of an arrow, this arrow should be fixed in front of the camera and always turn in the direction of the "important place (3D img)".

I have already seen in other articles that binding widgets in front of the camera is no problem e. g. https://community.ptc.com/t5/Vuforia-Studio/Finding-distance-from-the-model/m-p/647022 but how can I make this widget rotate in the direction of the "important place (3D img)"?

How can I implement such functionality? I am sure other users would also benefit from something like this. Thanks a lot in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @Lucas ,

I think this will be possible using the eye tracking event of the device. In the post "https://community.ptc.com/t5/Vuforia-Studio/Get-surface-coordinates-of-a-model/m-p/646408#M7459" is demonstrated how to received the current position of your device. The relevant code is this:

////////////////////////////////////

$rootScope.$on('modelLoaded', function() {

$scope.check_if_works=false

//=====================

// set some properties

//the next prop is important that the tracking event will use the callback

$scope.setWidgetProp('3DContainer-1','enabletrackingevents',true);

$scope.setWidgetProp('3DContainer-1','dropshadow',true);

$scope.setWidgetProp('3DContainer-1','extendedtracking',true);

$scope.setWidgetProp('3DContainer-1','persistmap',true);

//====================

$timeout($scope.setMyEYEtrack(), 2500) //call with delay 2.5 sec

});

/////////////////////////////////////////////////////

////////////////////////////////////////////////////////////

$scope.setMyEYEtrack= function() {

if(tml3dRenderer)

{

try {

tml3dRenderer.setupTrackingEventsCommand (function(target,eyepos,eyedir,eyeup) {

var scale=2.0; //distance to eye

//moved the widget in regards of the new viewpoint position of the device

$scope.setWidgetProp('3DImage-1', 'x', ( eyepos[0]+eyedir[0]*scale));

$scope.setWidgetProp('3DImage-1', 'y', ( eyepos[1]+eyedir[1]*scale));

$scope.setWidgetProp('3DImage-1', 'z', ( eyepos[2]+eyedir[2]*scale));

$scope.$applyAsync();

},undefined) } catch (e) { $scope.setWidgetProp('3DLabel-1', 'text', "exception=");}

}else

$scope.setWidgetProp('3DLabel-1', 'text', "null tml3dRenderer object on HoloLens");

} ////// finish setEYEtrackThe code shows one time what of the properties of 3d container widget should be set (better to set this manually and not via JS)

Another point is that we move with the device aother widget which will update always its postion in relation to the device movement.

In the article https://community.ptc.com/t5/Vuforia-Studio/Finding-distance-from-the-model/m-p/647022 the distace was calculated as vector which is the diference between the eyepos vector and the modelWidget postion. In your case it (dirVec = yourWidgetPos - eyePosDevice) should be the postion of your widget what you want to follow with the arrow. The location of the arrow will be easy to set - something like posVec + scale* dirVec but it will be more difficult to calculate the correct angle to follow the direction. An easiare approach chould be to use instead dots /balls or e.g. 3 balls with different size which will be set in the object direction:

posBall1 =posVec + scale1* dirVec

posBall2 =posVec + scale2* dirVec

posBall3 =posVec + scale3* dirVec

so that the balls will point in the direction of the object

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Thank you very much for your answer @RolandRaytchev. I think the approach with the balls is good. Unfortunately, I can not imagine how I should implement this. Can you send me an example experience?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I am will check if there is such example or will create one next couple of days / for this post and the post https://community.ptc.com/t5/Vuforia-Studio/Distance-between-camera-and-model/m-p/748593#M10118 .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello Roland, thank you very much!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @Lucas ,

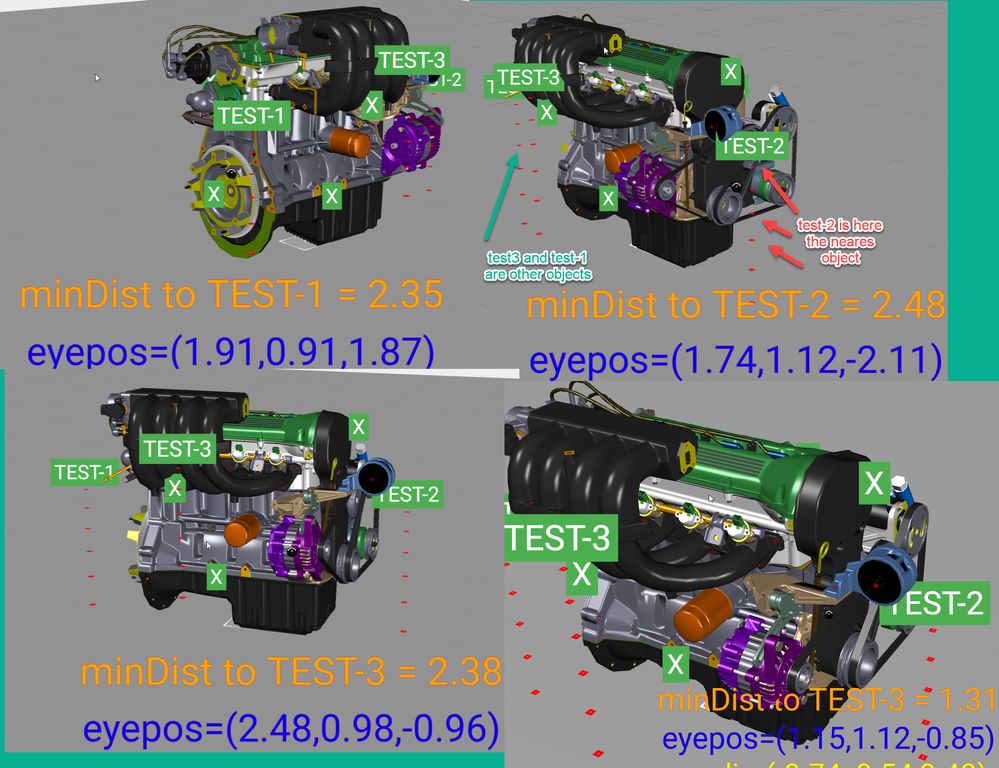

here is an example which should demonstrate the principle of such solution. It definitely not perfect. Here I used a couple of 3d widget ( any 3d like label, image etc.) and display them as path showing the direction to an object. Here the objects are 3D Text widgets with specific coordinates. Depending on the position of the device it will display the distance to the nearest object.

The disadvantage of such solution is that for the path we need to define axillary widgets. Here I used per path 6 -> 3d Widget. So another option is to define only one path which will be displayed to nearest widget.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @RolandRaytchev , I have been working on this solution for quite some time. Unfortunately, I can not show a positive result. The user definitely does not find the object. Is there no way to put an arrow directly in front of the camera that rotates according to the "point of interest"?

Thanks for your help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @Lucas ,

I agree that this is not trivial and there is no best solution. We can try different things so possibly with some consideration and trial and error we can achieve appearance which could be used. We need to calculated the angle to the gaze vector the Euler angels to the vector -some matrix transform mathematics.

Here is an another suggestion – demo project which is ok for me. It will scan a widget with specific name (containing some string) and will find which the nears to the device and will display a arrow item with a text(move and rote it) so that it could be used as hint . I hope this is helpful

I attached a sample project to the post.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @RolandRaytchev,

I was able to finish my last project with the previous examples. Thanks a lot for your support!

Unfortunately, this does not work for my current project. This time I need an offscreen target navigation. I have attached an example video:

https://www.youtube.com/watch?v=gAQpR1GN0Os

Is this possible? Does it already exist? In games this is quite normal.

I also put it in the Arrow Navigator section, because I think it's the same topic.

Thanks a lot in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Lucas ,

I reviewed the YouTube video link. Regarding to the question if it exist. So far I know , the answer is no (at least no by PTC standard implementations.

Regarding to the unity game. There is one very significant difference. In unity your first person controller could move with specific velocity (velocity vector) , or 3d object could move in space. In Augmented realty you first controller is your device. There you have the eye vectors. The 3D objects are fixed in space. So that the only movement is the movement of your device.

Here in the video I see that , they used in code the bounding box information. By default we do not have this information with the meta data json file but you can check this posts:

https://community.ptc.com/t5/Vuforia-Studio/Obtaining-Bounding-Boxes-for-3D-Models/m-p/779434#M10634

https://community.ptc.com/t5/Vuforia-Studio/metadata-vuforia-experience-service/m-p/779433#M10633

So, we can obtain the bounding box info when we extract json metadata with the mentioned there extension

So , in the video what you mentioned , there is the algorithm that to select the moved objects uses the bounding box information and coordinates of the target objects .

So what we can do in Studio , is to load the bounding box as array for the different components and to ray- trace device eye vector an to check which bounding box the eye vector will strike . In this case we can use as additional filter the distance to the object - so means that only objects which are inside of scope of some minimal and maximal distance will be selected and e.g. highlighted.

So at the end , when we consider the difference between the AR(Studio) and the Unity (VR) we could implement similar sections algorithms based on the imported bounding boxes and checking of the device eye vector. When we see in unity the used code is not so simple- so I think we will have similar complexity also with Studio Javascript code.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @RolandRaytchev,

thank you very much for your quick reply.

Why don't we do without the bounding boxes and take the xyz coordinates of the widgets? Around these widgets we simply assume a sphere with radius x.

If the eye vectors do NOT hit this sphere, we insert a 2D image (of an arrow) on the UI and this must then rotate around the center of the UI and of course point to the corresponding widget.

If the eye vectors hit the sphere, we remove the 2D image (arrow).

Is this easier, possible?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Lucas ,

I think this could work using a sphere . But

Let consider the bounding box- it consist of 2 points which x,y,z min and x,y,z max - and inside this box we have the complete part.

In studio we have only x,y,z and rx,ry,rz. It depends on the assembly and the used conventions when we created all the parts and assemblies. So means when we have in each part/subassembly/ component a coordinate system which is near of the center of the bounding box - in this case it should work fine with sphere. But when we have some large shifts between modelItems x,y,z coordinates and the real part geometry, then in this case our sphere could be outside the part geometry.

According to the calculation - inside the tracking event

tml3dRenderer.setupTrackingEventsCommand (function(target,eyepos,eyedir,eyeup) {

//do here calcuatlion with eyepostion and aeyedirection

})where we need to consider the following vector relations:

cos(alpha) = dot(normilized(eyedir),normilized(partWorldXYZ-eyepos))

tan(alpha) = sin(alpha)/cos(alpha)=

=Math.sqrt(1-Math.pow(cos(alpha),2.0))/cos(alpha)

radius_hit=(partWorldXYZ-eyepos)*tan(alpha)

if(radius_hit < radius_part) this_component was hit;

else the component was not hit

...

later when the component in not hit but you want to move you device in this direction - In this case you nee to find the direction between the eye vector and the middle of the object/part. In this case we need to project the 2 points -> partXYZ middle point and the eyeposition on the display plane (this is the plane where you want to display the arrow and it should be normal to eyedir vector) - in this case the arrow should be from eyeposition projection to partXYZ projection point.

Similar calculation are done in the techniques suggested by the post https://community.ptc.com/t5/Vuforia-Studio-and-Chalk-Tech/How-can-we-make-a-3D-Widget-on-HoloLens-visible-in-front-of-me/ta-p/658541