Community Tip - Did you get called away in the middle of writing a post? Don't worry you can find your unfinished post later in the Drafts section of your profile page. X

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

global fit multiple data sets

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

global fit multiple data sets

hi all,

title (hopefully) says it all. I have several data sets. Each one will be fit the to the same fit function and the sets will share some, but not all, of the fit variables.

I have a clumsy attempt at this in the attached sheet (Prime 4.0 along with excel data). I basically use "minimize" to minimize a standard error expression which has several fit expressions in it (one for each data set). The problem is that the resulting fit values are nearly identical to the initial guesses! I've tried reducing the TOL but that doesn't help.

cheers,

blake

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Guess you have a typo in the definition of sse. You wrote A.4 instead of A.1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

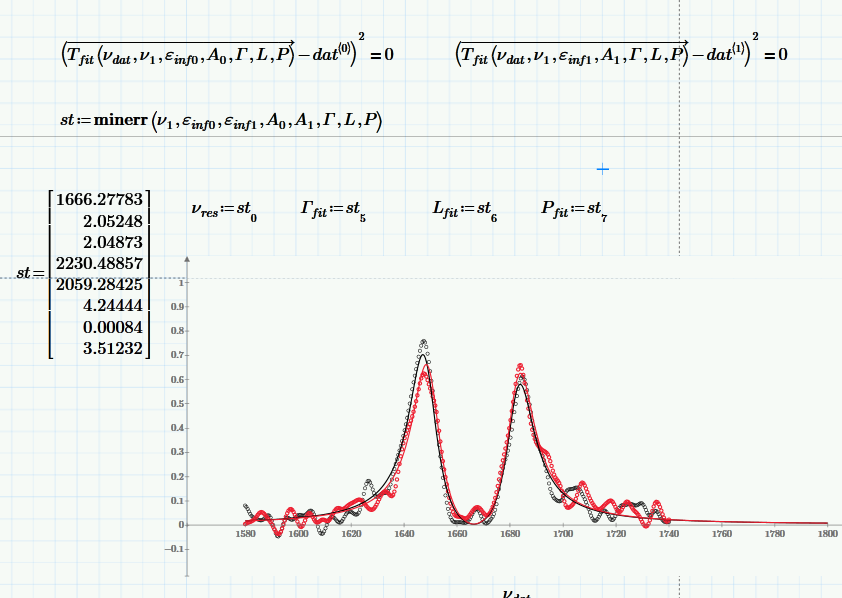

Here is an approach without your sse function using minerr and letting Prime do the sse minimization that way. Its better with this approach to set the vectors to 0 and not the sum of its elements.

Are the results more suitable?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

thanks. the "minerr" approach is better. it gives results that are not locked to original guesses (and is much faster!). however, the results are quite sensitive to initial guesses. i'll dig into a different data set.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Just noticed that you had the very same typo (4 instead of 1) at the variable epsilon.inf, too

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

also a bit confused. don't i still need the summation symbol before the ()^2 expressions?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

@bsimpkins wrote:

also a bit confused. don't i still need the summation symbol before the ()^2 expressions?

No, you don't even need the square. The expression results in a vector and we demand it to be the zero vector by writing =0.

Prime's minerr will try to minimize the error in ALL conditions. This means that every condition in a solve block with minerr is considered a soft constraint. if you write 3<L<15 this is also a constraint which might be considered. Failing to find a suitable value for L would just influence the overall error Prime tries to minimize.

To make it a harder constraint you may use something like <negation>(3<L<15)*10^3 = 0.

3<L<15 can only take the values 1 (true) or 0 (false). We want it to be true, so we want the negation to be false -> 0. Multiplying the result with a large number like 1000 means that if the constraint is not fulfilled we get 1000 and this is a much bigger error (it should be 0) than if its just 1. So Prime will try harder to get this constraint straight.

Guess there is not much you can do against the sensitivity wrt the guesses. Maybe you can manage to calculate a suitable guess individually for every set of data depending on some characteristics like position of peak values, etc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

good eyes! thanks.

but i still have the same problem. the solutions end up being nearly identical to starting guesses.