- Community

- ThingWorx

- ThingWorx Developers

- Re: Azure IoT Hub Connector issue with AzureIotSen...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Azure IoT Hub Connector issue with AzureIotSendMessage service!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Azure IoT Hub Connector issue with AzureIotSendMessage service!

In the projects we have, we often use the AzureIoTSendMessage service to send commands to the machines. (C2D message)

after 5 January 2019 we started to have problems below.

It resolves briefly by restarting the connector but after a short time the problem occurs again.

I have both 8.1 production infrastructure and 8.3 for Development.

The problem is the folowing:

When I use the AzureIoTSendMessage service, dosen't work. Gives me the error below:

Execution error in service script [THGF_LALLAVAT1 IoTRestService] : Wrapped java.util.concurrent.TimeoutException: Timed out APIRequestMessage [requestId: 13762, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-3d164c0f-f22a-4a8c-80e4-48b576cd6c53, characteristic: Services, target: AzureIotSendMessage] Cause: Timed out APIRequestMessage [requestId: 13762, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-3d164c0f-f22a-4a8c-80e4-48b576cd6c53, characteristic: Services, target: AzureIotSendMessage]

My configuration:

PRODUCTION:

-Virtual machine front end (Windows server2016 Os Build 14393.2670 ): azure virtual machine B4m

-Thingworx 8.1

-Java JDK 1.8.0_92

-Virtual Machine with connector (Linux Ubuntu 18.04 )

-Java JDK 1.8.0_191

-Azure IoT 2.0.0 Connector

DEV:

-Virtual machine front end (Windows server2012 R2 Os ): azure virtual machine B4m

-Thingworx 8.3.0

-Java JDK 1.8.0_92

-Virtual Machine with connector (Linux Ubuntu 18.04 )

-Java JDK 1.8.0_191

-Azure IoT 2.0.0 Connector

This configuration works until 5 of jannary.

I think that the problem is the Azure IoT connector 2.0.0.

Problably something has been updated on the azure side and now the connector is no longer compatible.

Can you help me please?

Have you found same issue? Can you try the service?

Thanks

ps: I attached the application log

Solved! Go to Solution.

- Labels:

-

Cloud

-

Connectivity

-

Troubleshooting

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Description Problem:

When I started the thingwox connector (which was always good for an abundant year), thousands of threads were opened constantly and grew.

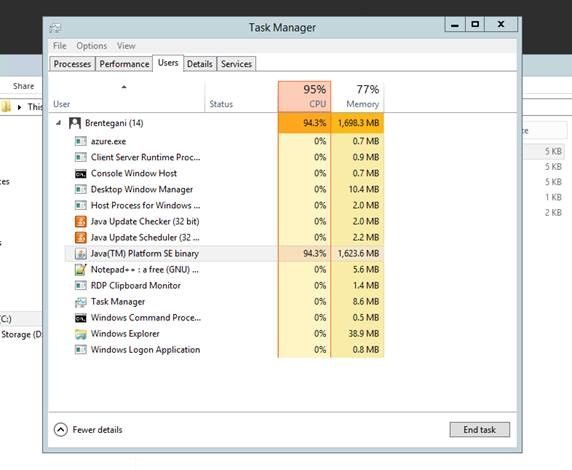

This caused the saturation of RAM and CPU memory:

and when I tried to send C2D messages or read D2C message in thingworx log it was as follows:

Wrapped java.util.concurrent.TimeoutException: Timed out APIRequestMessage [requestId: 179343, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-f7f78e8f-7ed1-4ee5-be25-3e13b7498724, characteristic: Services, target: AzureIotSendMessage] Cause: Timed out APIRequestMessage [requestId: 179343, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-f7f78e8f-7ed1-4ee5-be25-3e13b7498724, characteristic: Services, target: AzureIotSendMessage]

The connector became unusable after a reboot.

I then activated, to put a patch to the problem, a sheduler in ubuntu: crontab that every 20 min restarts the service of the connector to avoid the saturation of resources.

The following warn was repeated in the connector log:

08:30:30.093 [Thread-42] DEBUG servicebus.trace - entityName[iothubproduction/ConsumerGroups/westeurope/Partitions/0], condition[Error{condition=null, description='null', info=null}]

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: Failure creating client or receiver, retrying

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: Caught java.util.concurrent.ExecutionException: com.microsoft.azure.servicebus.ServiceBusException: The supplied offset '137459006072' is invalid. The last offset in the system is '4604792' TrackingId:54e3a5a5-f7ec-4dcc-b3fc-84554378d7d2_B10, SystemTracker:iothub-ns-iothubprod-1202394-e704e8b244:eventhub:iothubproduction~8191, Timestamp:2019-01-21T08:30:29 Reference:d8a82a0e-7b13-45ff-8a00-15977a8e67bd, TrackingId:74d4a55a-7b08-4051-b45f-ee5ff7b90afd_B10, SystemTracker:iothub-ns-iothubprod-1202394-e704e8b244:eventhub:iothubproduction~8191|westeurope, Timestamp:2019-01-21T08:30:29 TrackingId:f0f7fb9ed7974ca4a1201437327ad188_G7, SystemTracker:gateway5, Timestamp:2019-01-21T08:30:29, errorContext[NS: iothub-ns-iothubprod-1202394-e704e8b244.servicebus.windows.net, PATH: iothubproduction/ConsumerGroups/westeurope/Partitions/0, REFERENCE_ID: 26e7ba_d188_G7_1548059429671]

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:357)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.EventHubPartitionPump.openClients(EventHubPartitionPump.java:126)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.EventHubPartitionPump.specializedStartPump(EventHubPartitionPump.java:46)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.PartitionPump.startPump(PartitionPump.java:70)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.Pump.lambda$createNewPump$0(Pump.java:61)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.FutureTask.run(FutureTask.java:266)

Problem Resolution:

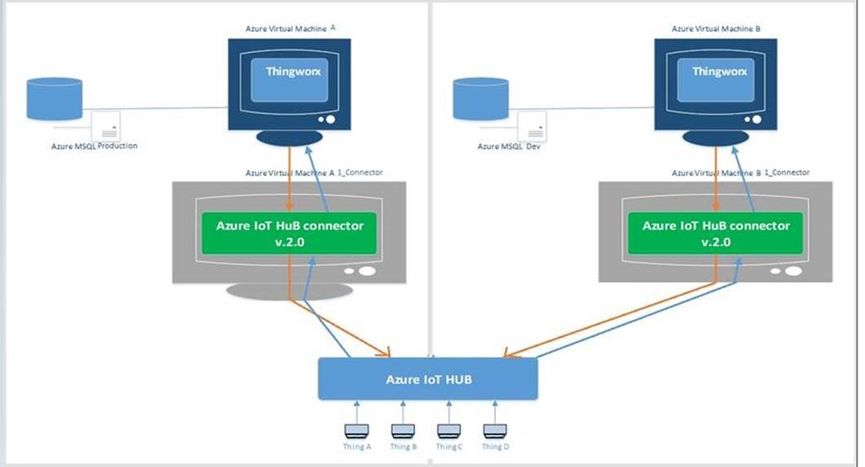

The architecture used until now is as follows:

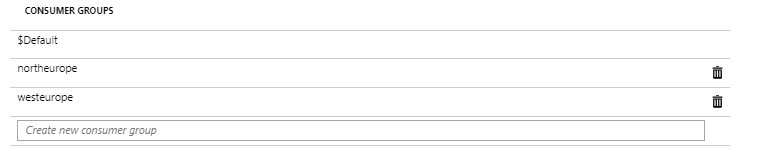

On iot hub there are two different consumer groups for each connector:

Support PTC thanks to 'Mainente, Stephane' after talking to and investigating with the connector developer advised me to do the following things:

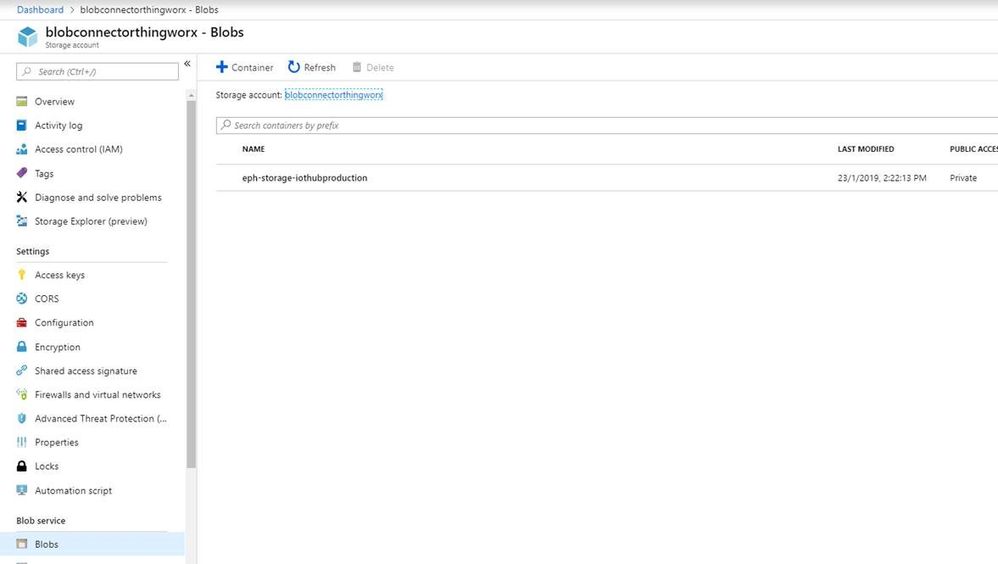

The connector uses an Azure storage account.

1) At the suggestion of Stephane I deleted all the blobs created by the connector:

2) He sent me some lines to add in the connector configuration:

blob-storage {

accounts {

default {

// <blob-store> > Settings > Access Keys > Storage account name

account-name = "blobconnec******"

// <blob-store> > Settings > Access Keys > key1 or key2

account-key = "KUvEgXZKehDzFA6Q1EFMyVqdVokeR6DMdH8IfMlzcFQdZoxt/9pwvGzQ******"

}

event-processor-host {

container-name = "eph-storage-"${cx-server.protocol.eventHubName}

account = default

}

}

}

3) Restarted the connector.

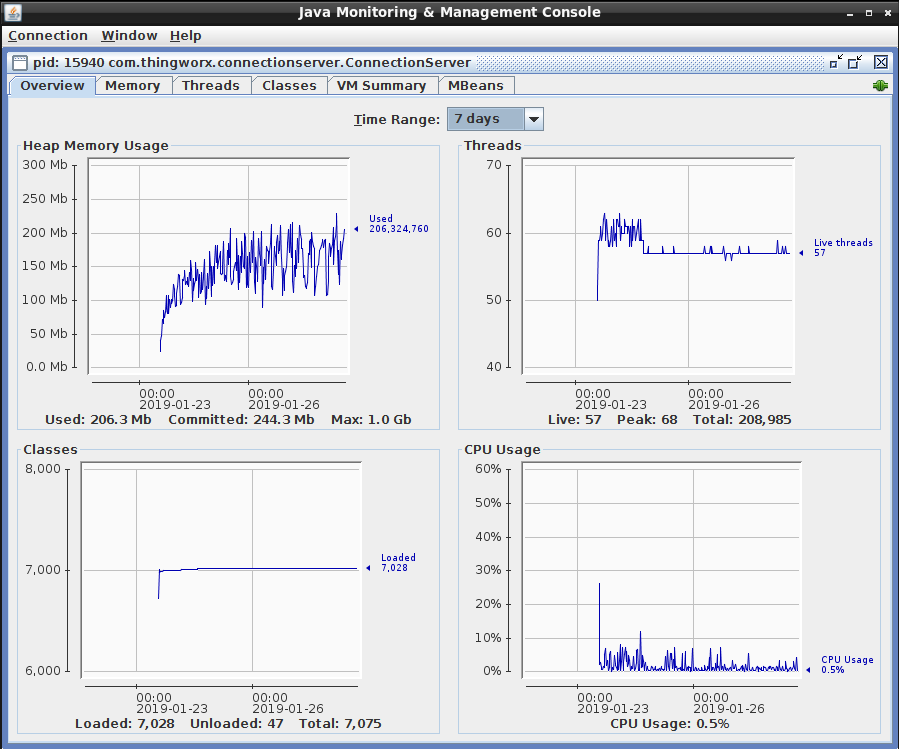

Threads are now of normal value[50-65]. [during problems increased over 5000 threads].

Here below the proof of normal functionality of the thingworx connector.

Bye

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Gabriele1986.

We tested under ThingWorx 8.3.3 using the 2.0.0 version of the connector but were unable to recreate the issue. We don't believe this is the result of an upgrade/incompatibility since it works following a restart.

How many messages are being sent? On the Azure side is logging reporting anything regarding the attempts being made to deliver messages?

Regards.

--Sharon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi Sharon,

To recreate the problem try to do this:

Create a Mashup and put a refresh of 5 seconds of the service AzureIoTSendMessage.

for me after 30-40 (more or less) messages, gives me the error Timout.

Azure side I can not see anything because the messages do not arrive in azure.

I Attached the entity for testing,in the thing's service [LocalRestApiCall] make sure to change the thing name with your ThingName that implement the azure iot connector.

Then change or create[with name: iotaas] the thing with azure template.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I want to emphasize that yesterday night I used a new iot hub for a test : iohubNorthEurope

In the same way I call the service AzureIoTSendMessage in Thingworx (with the connector) that invoke a C2D message and at about 40-50 message sent gives me the error : Timed out APIRequestMessage, and the device stopping receiving message from this service in thingworx.

Then I tested with another iot hub iothubtestWestEurope and here no problem.

This is interesting because same environment, different region of iot hub, at one hand works: west Europe. On the other hand not: north Europe.

I tested iot hub directly without Thingworx with a program written in node.js (https://docs.microsoft.com/en-us/azure/iot-hub/iot-hub-node-node-c2d )

With this program I have no problems.

It seems that something has changed in iot hub northorpe that AzureConnector of thingworx no longer works.

I tested also locally in my local infrastructure with the same result: after 40-60 messages sent gives me the error below:

Error executing service LocalRestApiCall. Message :: Timed out APIRequestMessage [requestId: 6266, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-66dec122-0451-4d29-a37f-b2aba841023e, characteristic: Services, target: AzureIotSendMessage] -

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hello @Gabriele1986,

At the thresholds you are reporting for when you notice this issue, it could be possible that you are running into a queue limit for your devices. Note that there is a per-device C2D queue max of 50 messages within Azure. Errors would result for any messages sent to the same device beyond this threshold. After the messages from the queue have been processed, you should be able to begin sending additional messages.

For your IoT devices in Azure, you should be able to review their C2D message counts to see where you are sitting currently. Also, a good test would be to see if the queue clears on its own with more time, as opposed to restarting the connector. Can you review/test these items and let us know what you find?

Regards,

Stefan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi @Gabriele1986.

If one of the previous responses answered your question, please mark the appropriate one as the Accepted Solution for the benefit of others with the same question.

Regards.

--Sharon

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Description Problem:

When I started the thingwox connector (which was always good for an abundant year), thousands of threads were opened constantly and grew.

This caused the saturation of RAM and CPU memory:

and when I tried to send C2D messages or read D2C message in thingworx log it was as follows:

Wrapped java.util.concurrent.TimeoutException: Timed out APIRequestMessage [requestId: 179343, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-f7f78e8f-7ed1-4ee5-be25-3e13b7498724, characteristic: Services, target: AzureIotSendMessage] Cause: Timed out APIRequestMessage [requestId: 179343, endpointId: -1, sessionId: -1, method: POST, entityName: AzureIot-cxserver-f7f78e8f-7ed1-4ee5-be25-3e13b7498724, characteristic: Services, target: AzureIotSendMessage]

The connector became unusable after a reboot.

I then activated, to put a patch to the problem, a sheduler in ubuntu: crontab that every 20 min restarts the service of the connector to avoid the saturation of resources.

The following warn was repeated in the connector log:

08:30:30.093 [Thread-42] DEBUG servicebus.trace - entityName[iothubproduction/ConsumerGroups/westeurope/Partitions/0], condition[Error{condition=null, description='null', info=null}]

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: Failure creating client or receiver, retrying

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: Caught java.util.concurrent.ExecutionException: com.microsoft.azure.servicebus.ServiceBusException: The supplied offset '137459006072' is invalid. The last offset in the system is '4604792' TrackingId:54e3a5a5-f7ec-4dcc-b3fc-84554378d7d2_B10, SystemTracker:iothub-ns-iothubprod-1202394-e704e8b244:eventhub:iothubproduction~8191, Timestamp:2019-01-21T08:30:29 Reference:d8a82a0e-7b13-45ff-8a00-15977a8e67bd, TrackingId:74d4a55a-7b08-4051-b45f-ee5ff7b90afd_B10, SystemTracker:iothub-ns-iothubprod-1202394-e704e8b244:eventhub:iothubproduction~8191|westeurope, Timestamp:2019-01-21T08:30:29 TrackingId:f0f7fb9ed7974ca4a1201437327ad188_G7, SystemTracker:gateway5, Timestamp:2019-01-21T08:30:29, errorContext[NS: iothub-ns-iothubprod-1202394-e704e8b244.servicebus.windows.net, PATH: iothubproduction/ConsumerGroups/westeurope/Partitions/0, REFERENCE_ID: 26e7ba_d188_G7_1548059429671]

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:357)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.EventHubPartitionPump.openClients(EventHubPartitionPump.java:126)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.EventHubPartitionPump.specializedStartPump(EventHubPartitionPump.java:46)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.PartitionPump.startPump(PartitionPump.java:70)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: com.microsoft.azure.eventprocessorhost.Pump.lambda$createNewPump$0(Pump.java:61)

08:30:30.093 [pool-5-thread-3] WARN eventprocessorhost.trace - host AzureIot-6a15a0c4-1040-4898-9312-cd9dff0977c1: partition 0: java.util.concurrent.FutureTask.run(FutureTask.java:266)

Problem Resolution:

The architecture used until now is as follows:

On iot hub there are two different consumer groups for each connector:

Support PTC thanks to 'Mainente, Stephane' after talking to and investigating with the connector developer advised me to do the following things:

The connector uses an Azure storage account.

1) At the suggestion of Stephane I deleted all the blobs created by the connector:

2) He sent me some lines to add in the connector configuration:

blob-storage {

accounts {

default {

// <blob-store> > Settings > Access Keys > Storage account name

account-name = "blobconnec******"

// <blob-store> > Settings > Access Keys > key1 or key2

account-key = "KUvEgXZKehDzFA6Q1EFMyVqdVokeR6DMdH8IfMlzcFQdZoxt/9pwvGzQ******"

}

event-processor-host {

container-name = "eph-storage-"${cx-server.protocol.eventHubName}

account = default

}

}

}

3) Restarted the connector.

Threads are now of normal value[50-65]. [during problems increased over 5000 threads].

Here below the proof of normal functionality of the thingworx connector.

Bye

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Hi all,

I used another account to mark as correct resolution.

bye