Community Tip - When posting, your subject should be specific and summarize your question. Here are some additional tips on asking a great question. X

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Strict enforcement of boundary conditions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Strict enforcement of boundary conditions

Dear PTC Community

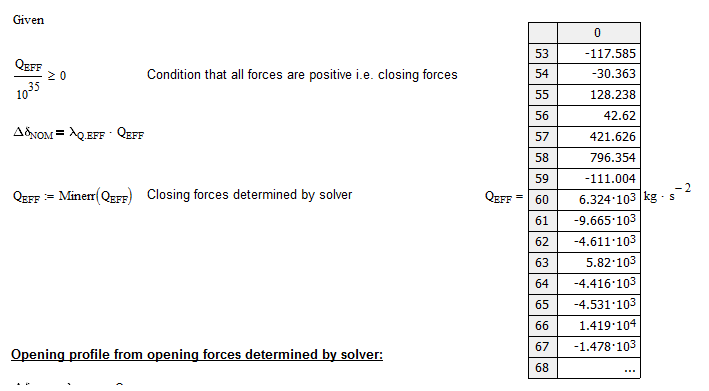

From a set of x and corresponding y values I'm trying to solve a system of equations and for this I'm of course using a set of boundary conditions. One of these is that all solution values are equal to or above zero (i.e. non-negative) but despite using the minerr-function to solve the system I still get negative values. They are, however, quite small and the results are thus usable but it seems strange to me that the negative values can't be avoided. Is there a way to strictly enforce a boundary condition?

- Labels:

-

Statistics_Analysis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Constraints in minerr are soft constraints, not hard constraints. The errors in the constraints are minimized as part of the sums of the squares of all the errors, so they are not forced to zero (another way to look at it is that everything is a constraint, either an equality constraint such as f(x[i)=y[i, or an inequality constraint such as p>0. They are all treated equally.). If you want a hard constraint, weight it very heavily. So instead of p>0, use p*10^15>0. Or as another example, instead of p>2, use (p-2)*10^15>0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I've played around with it a lot and no matter how hard I weigh it I still can't avoid negative results.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Without the worksheet I can't really say what is going on. How small are the negative values?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Here it is. It's quite a large worksheet ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

There's something wrong with the two data files, which have the same name, and the names don't match what's in the worksheet, so the worksheet can't calculate. Regardless, you have Q.EFF divided by 10^35. That means that if Q.EFF is a small negative number, then Q.EFF/10^35 is a really small negative number that is well within the solvers tolerance for not being negative. Instead of dividing by 10^35, multiply by 10^15.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I'm not sure why that is. Here are the files again.

About the constraint weight I'm fully aware that it's actually the opposite of what's been proposed but for some reason it works and multiplying doesn't.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

That files are still both called "attachFile.zip", and both zip files contain a file called simply "attachFile" (no extension).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I just guessed which file is which, and renamed them. Here's what I get when dividing by a large number:

Lot's of negative forces, as expected. Here's what I get when multiplying by a large number:

All forces are positive, as expected. Some are very small (to within the tolerance of the solver, effectively zero), but none are negative.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The problem is that the solution is wrong. If you look at the plots beneath the solver the subscript "EFF" denotes the solution and "NOM" the nominal values. The solution obtained multiplying by 10^35 is very far from nominal.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Solved!

Turns out the error was caused by a bad guess. Changed the guess to the nominal values and got a close-to-perfect solution. ![]()

Much obliged!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I was a bit too quick to consider the problem solved. It's correct that I got the right solution if my initial guess was the solution itself but it turns out that the solver will return any guess as the solution.

With an initial guess of zero (For the matrix Q) the returned solution is also zero but this is of course wrong.

The solver doesn't seem to be very interested in solving the equation at all ![]()

The test to determine if the solution is good are the plots below the solve block where Q_nom and sigma_nom are the correct values.

Both of these should only be positive values which is why it's importan to enforce that Q is positive.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

attachFile.xls is not an excel spreadsheet. Please name the data files correctly, and edit the Mathcad worksheet so that the file input components point to the correct files.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Somehow the uploader renames the files and unfortunately I don't know how to avoid it.

The small one should be renamed: "S=1, d=1, M=2693.3 (surf - 20mm).txt"

And the bigger one: "M=2693.3 (surf).csv"

Sorry for the inconvenience!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

You have given a unitless guess for Q. That's a problem, because as soon as Q is non-zero you have a units mismatch in your equality. Set Q=0*kg*s^-2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

As long as the guess is zero it's not a problem. I've played around with it though and it doesn't make a difference.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Something is really unstable in your system. Change the weighting on Q GT 0 to 10^15 and you get a solution. I need to look at it some more, but I'm on a business trip in Asia at the moment so I have limited time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

I really appreciate you taking the time to help! ![]()

I thinks the instability is caused by the number of solutions. It's basically 106 equations with the same amount of solutions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

It's also at least partly due to the guess values. If you set the guesses as Q:=Q.NOM then you get a solution regardless of the weighting of Q GT 0

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Correct, but the problem is that for guesses resembling Qnom it'll return the guess as the solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

When I try it the values are close (which they should be if the guesses are good), but they are not the same:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

The problem is that the current dataset can be evaluated against a known solution. I'm testing with artificial data and when I switch to data from real experiments I don't have a guess to go on. I need the Q matrix to be "free".

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Well, you may have a problem. Most solvers, including minerr, start at a point on the objective surface and head downhill. They stop when there's no significant improvement in the error when the parameters are changed. That happens either at a minimum, or when the surface is flat. Note however that the minimum may not be the global minimum, and "flat" just means flat enough that the error doesn't change significantly when the parameters are changed. If the surface is nasty, with lots of local minima and/or flat spots, then the guess values (i.e. the starting point) are very important. I would need to spend a lot more time looking at your objective surface (which is not easy, because of it's very high dimensionality), but I suspect that is where your problem lies.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

Is it something ctol could alleviate? I'm also considering weighting certain points heavier.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Notify Moderator

CTOL may help with flat spots (if they are not truly flat), but not local minima. A weighted fit may help. What would help the most though is some way of generating better guess values. In some cases a grid search of possible guess values is useful, but that's not going to work in your case because of the number of parameters (the number of points on the grid goes as the factorial of the number of parameters!). There are other optimization algorithms that may work better, such as simulated annealing, but they are not implemented as built-in Mathcad functions. With such a large number of parameters they would probably also be very slow.